Bend to: Explore the Proprioceptive Interaction Between Plants and Post-humans in an Immersive Experience

DOI: https://doi.org/10.1145/3698061.3726945

C&C '25: Creativity and Cognition, Virtual, United Kingdom, June 2025

Bend to is an embodied experience based on plants’ phototropic sense in the digital sympathetic environment. By utilizing 3D scanning and time-lapse photography techniques, we gather plant phototropic data, which is then processed using the Support Vector Regression (SVR) model for prediction and ultimately visualized in a virtual reality experience. It immerses individuals into the sensual experience of plants’ responses to surroundings through proprioceptive interaction. With the embodied engagement of hand-tracking and virtual touching, this artwork aims to enhance participants’ awareness of their bodily position and strengthen connections with the spatial senses of plants. More importantly, it encourages individuals to explore the intricate relationship between nature and post-humans in the future light-radiated environment.

ACM Reference Format:

Yuting Xue, Yuxuan Bai, and Elke Reinhuber. 2025. Bend to: Explore the Proprioceptive Interaction Between Plants and Post-humans in an Immersive Experience. In Creativity and Cognition (C&C '25), June 23--25, 2025, Virtual, United Kingdom. ACM, New York, NY, USA 5 Pages. https://doi.org/10.1145/3698061.3726945

1 INTRODUCTION

Bend to is an immersive artwork allowing individuals to anticipate and interact with plants’ phototropic senses in a futuristic environment where ultraviolet (UV) radiation has increased. It is created against the context of the current varying climate, which will lead to uncertain harmful UV radiation reaching the earth's surface in the future [1], offering a virtual experience for post-humans to perceive environmental changes alongside plants. This work integrates 3D scanning and time-lapse photography to collect real-world data on how plants respond to light and present this subtle process through the virtual reality (VR) experience, which is hard for humans to observe otherwise. With hand position tracking, users are stimulated to actively sense and explore the position of their own bodies and plants in the dynamic natural space.

This project is based on the phototropism that plants have robust photosensory mechanisms to bend directionally in response to light [7]. By sensing the fluctuations from internal cues, including stochastic tissue growth, and external indicators, such as the wind and gravity, plants can monitor their growth and channel in their own shapes, allowing shoots to grow against gravity and towards the light [9]. Plants perceive their surroundings with their whole bodies and react to environmental stimuli with physical movements, which the term proprioception discussed here suggests from a human perspective. The anthropoid sensation always incorporates kinesthesia, referring to the body's position and movement through receptors located in the joints, muscles, tendons, and skin [10]. Proprioceptive signals are clearly related to the sense of body ownership [14] and imply a delicate balance between individual bodies and the immediate environment in which we live [5]. Significantly, some researchers have extended the concept of proprioception to include the sense of relative position within a living body [8]. The phototropic senses of plants can be regarded as one kind of proprioception; they recognize the position of their bodies and engage their whole bodies to sense changes in their environment and react to survive. To some extent, proprioception is one sensory ability humans share with plants.

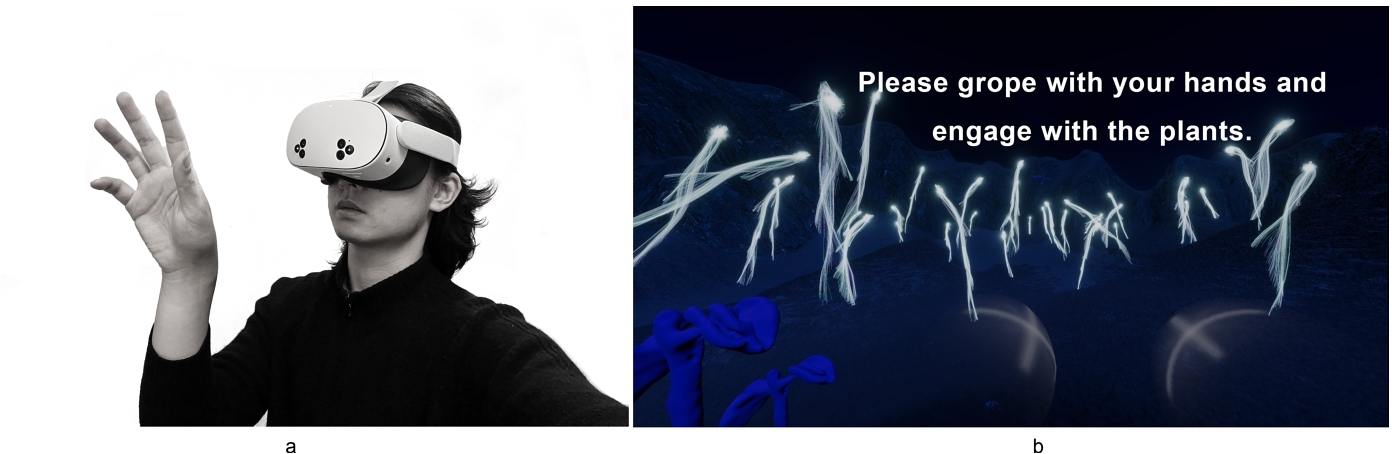

Delving into the theory of embodied interaction defined by Dourish, “embodied interaction is the creation, manipulation, and sharing of meaning through engaged interaction with artefacts” [4], a VR environment could support embodied interaction by the social and the physical context [3]. The hand-tracking and natural interaction of directed bodily actions provide chances to explore abstract concepts and scenes that are hard to achieve in the real world [2, 6, 12]. During the experience, individuals twist their upper limbs to sense how plants bend their bodies to the light to respond to environmental stimulation, which means experiencing the plants’ proprioception with humans’ proprioception in the non-conventional views (Figure 2). This work aims to help humans become aware of their proprioception and develop the perception of body position, subsequently employing it to sense the impacts of environmental changes in the future. Furthermore, the universe in Bend to is non-anthropocentric, without a hierarchy between nature and post-humans; plants are no longer subjected to manipulation by humans, allowing them to grow and orient themselves in accordance with light in nature. Plants belong to nature, as do individuals. When communal proprioception serves as a bridge, the connection between nature and post-humans will be enhanced at that status.

2 DESCRIPTION OF SYSTEM

The interactive system of Bend to consists of three components: data collection, data processing, and data visualization (Figure 3). We extracted the data from the phototropism experiment, predicted the phototropic response rate of seedlings, and ultimately visualized and facilitated interaction within the VR experience.

2.1 Data Collection

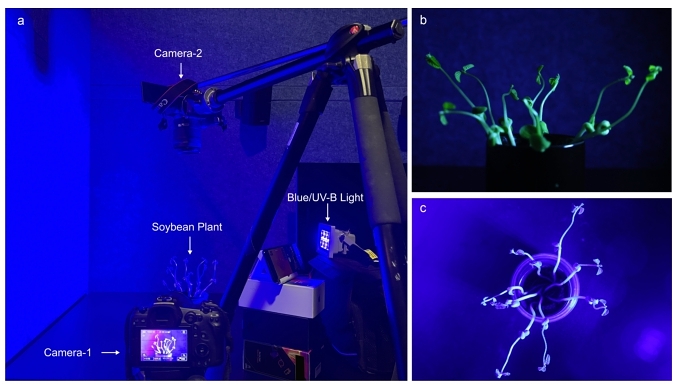

To collect empirical data on the response of plants to light, time-lapse cameras were used to document ongoing alterations in unilateral light experiments on 2-week-old soybean seedlings (Figure 4). For the choice of light, 302nm UV-B and 450 nm blue light spectrum have been proven to induce the strongest and fastest phototropic action [11, 13]. All experiments were carried out in a darkened room at a temperature of 22 °C. The 6W blue light (450-460nm) and the 8W UV-B light (302-312m) are placed separately on the right side of the soybean at a distance of 30 cm for 3 hours of irradiation. Two Canon EOS R5 cameras with 50 mm focal length macro lenses are positioned 30 cm in front and on top of the plant, taking an image every 5 seconds to collect data.

2.2 Data Processing

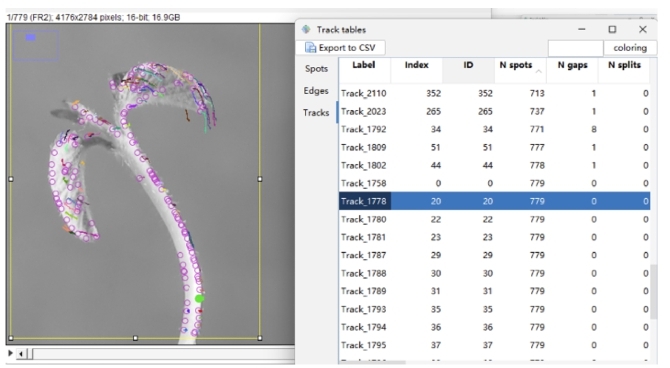

The TrackMate plugin of ImageJ was employed for keypoint detection and tracking in time-lapse images to produce positional data changes (Figure 5). Front-view images were employed as the primary data source, with top-view images used for auxiliary validation. A high-quality dataset was obtained by optimizing parameters such as point quality and size thresholds and performing the data cleaning according to predefined data integrity criteria to ensure that the detected points accurately covered the key areas of the plant. The spatial position data of phototropism was acquired by calculating the difference between the x, y, and z-axis coordinates at the start and end of light irradiation. During the modeling phase, a nonlinear Support Vector Regression (SVR) model and time series analysis were applied to predict the phototropic response rate of seedlings. By capturing temporal dependencies, time series features improved the SVR model's accuracy in predicting curvature dynamics under unilateral light stimulation. The obtained rates were classified as fast, medium, and slow into three categories to provide robust support for data visualization in the subsequent project.

2.3 Data Visualization

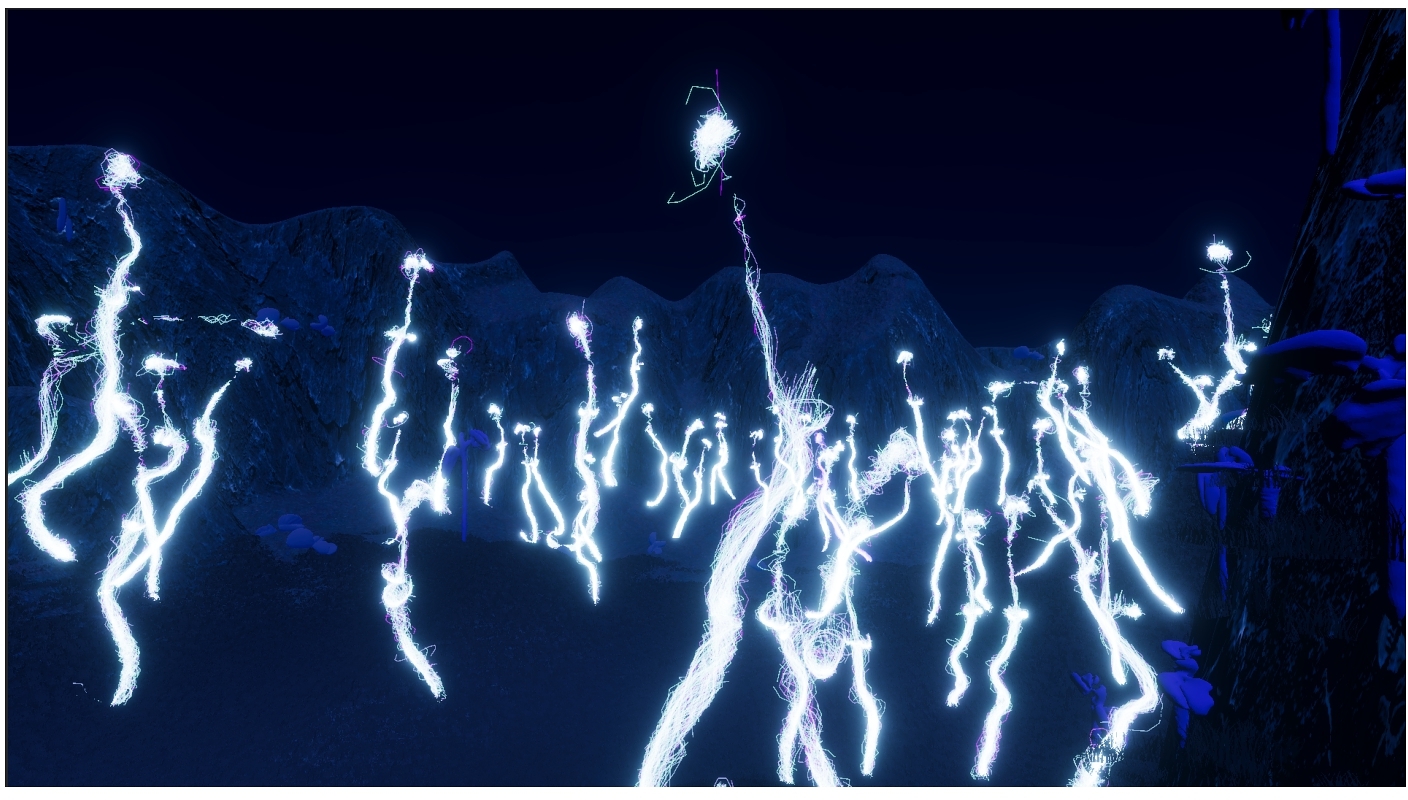

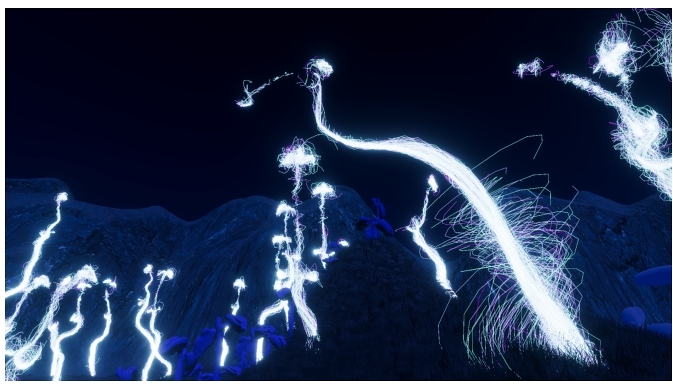

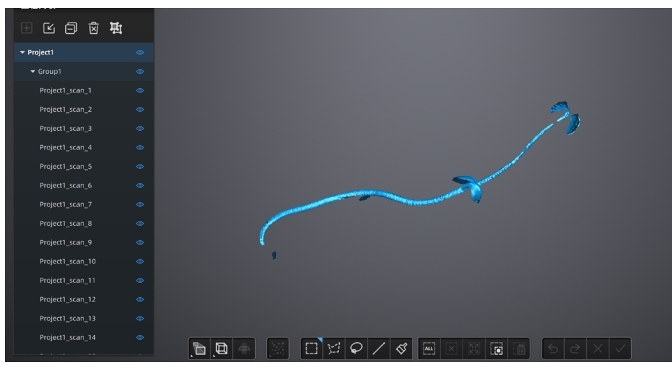

Initially, different soybean seedlings were scanned by a 3D scanner, EinScan Pro HD, to get high-quality FBX models. In Unity, through baking those models to “Signed Distance Fields (SDFs)” as the properties of “Visual Effect Graph (VFX Graph),” generated dynamic particle lines in the shape of soybean seedlings (Figure 6). In terms of data mapping, the processed data was used to control the phototropic response rate and the target point coordinates. The process of phototropic bending is fully automated by “C# Scripts” and is based on three different rates provided by the data processing. Meanwhile, a series of “Null Objects” based on the spatial position data was created, providing every seedling with unique relative coordinates for the intended bending point. Afterward, these dynamic soybean seedlings were separately placed in the post-human scene for further interaction.

The VFX Graph necessitates rendering in the High Definition Render Pipeline (HDRP), which is not supported by stand-alone VR headsets such as the Oculus Quest 3S. Consequently, this project operates on a PC with Meta Quest Link via Steam VR to facilitate interaction. It also offers a feasible solution for executing generative art produced by VFX Graph with stand-alone VR headsets in a VR environment.

3 IMMERSIVE EXPERIENCE

3.1 Embodied Interaction

Bend to offers an immersive and interactive experience in the 3D digital space that can sense the future light irradiation environment composed of phototropic data together with plants. The proprioceptive interaction consists of two phases: “connection” and “blending.” Upon entering the plant's world with the VR headset, instructional texts will be presented to facilitate the experience (Figure 7). Two semi-transparent circular shapes are outlined to signify each hand, assisting users in recognizing their bodily (hand) position. For the benefit of participant immersion, circular figures will not consistently be displayed.

Through the VR equipment, users entering the soybean plant world will initially observe different seedlings bending their bodies toward the light at different angles. When users grope around with their hands to search, they will focus on their bodily movement while traveling through different shapes of plants. Once they make a virtual touch with one particular plant, it will provide users with visual feedback in the form of color alterations and the floating circles effect (Figure 8). Through the response of plants, users are “connected” to plants in the virtual world and subconsciously perceive their bodily position in the physical world alongside the position of the plant in the virtual environment.

During the second phase, “blending,” users will have the opportunity to observe the phototropic response of plants. When individuals’ hand motion tracking aligns with the positional changes in phototropic bending derived from the experimental data, the “connection” will be reinforced to “blending” as splendor: smoke permeates around the edges of the plant, surrounded by spiraling waves of light (Figure 9). That kind of embodied interaction enables participants to fully sense how plants respond to light radiation and their spatial sensory abilities. Throughout the whole experience, users can adjust their viewpoints to access the insides of plants’ bodies.

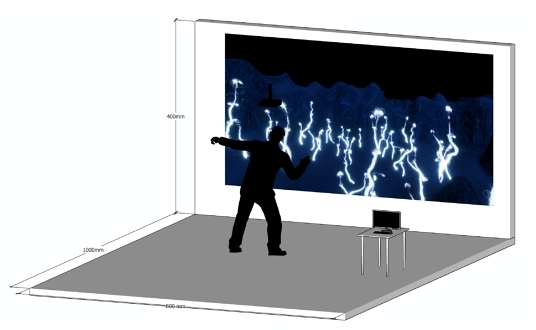

3.2 Physical Exhibition Setup

The equipment required for the exhibition of this project involves an Oculus Quest 3S, a PC laptop with Windows 10 and above, and a projector or a screen connected to the computer. The recommended installation area should be a square measuring 8 meters by 10 meters to enhance the audience's experience while navigating the virtual scene. This project is flexible regarding the exhibition space and could fit a smaller area if necessary. A quiet and relatively dark environment could provide optimal immersion for audiences.

The exhibition area should ideally have a projection wall or screen to display the real-time moving images captured by a stationary camera in Unity, enabling audiences to observe the other users’ experience from a third-person perspective. The VR headset should preferably connect to the laptop wirelessly via Air Link. In case of poor network conditions, a sufficiently long Type-C to USB 3.2 cable may be used for connecting.

In GatherTown, this work can be presented as a streamed VR scene or a documentation video. More information can be found at:

https://yutingxue.com/Bend-to

4 CONCLUSION

This project proposes a novel method for humans to sense the phototropic response of plants in nature through proprioception. It converts the biological data of plants, which are undetectable by the human eye within an ordinary timeframe, into sensory experience through creativity and computing. Through engaging the proprioceptive senses that are difficult to articulate in words with plants, our work stimulates individuals to explore their bodily position in the sympathetic environment. Bend to focuses on enhancing the spatial connection between plants and humans while furthering the comprehension of the ever-changing nature.

Acknowledgments

This work is supported by the City University of Hong Kong. We express our gratitude to Jiakun Pei for his contributions to data processing.

References

- Paul W Barnes, Thomas Matthew Robson, Richard G Zepp, Janet F Bornman, MAK Jansen, R Ossola, Q-W Wang, SA Robinson, Bente Foereid, AR Klekociuk, et al. 2023. Interactive effects of changes in UV radiation and climate on terrestrial ecosystems, biogeochemical cycles, and feedbacks to the climate system. Photochemical & Photobiological Sciences 22, 5 (2023), 1049–1091.

- Meredith Bricken. 1991. Virtual Reality Learning Environments: Potentials and Challenges. 25, 3 (1991), 178–184.

- Julia Chatain, Manu Kapur, and Robert W. Sumner. 2023. Three Perspectives on Embodied Learning in Virtual Reality: Opportunities for Interaction Design. In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems (Hamburg Germany, 2023-04-19). ACM, 1–8.

- Paul Dourish. 2001. Where the Action Is: The Foundations of Embodied Interaction. The MIT Press.

- Ksenia Fedorova. 2013. Mechanisms of Augmentation in Proprioceptive Media Art. 16, 6 (2013). Issue 6.

- Laura Freina and Michela Ott. 2015. A literature review on immersive virtual reality in education: state of the art and perspectives. In The international scientific conference elearning and software for education, Vol. 1. 10–1007.

- Ken Haga and Tatsuya Sakai. 2023. Photosensory Adaptation Mechanisms in Hypocotyl Phototropism: How Plants Recognize the Direction of a Light Source. 74, 6 (2023), 1758–1769.

- Olivier Hamant and Bruno Moulia. 2016. How Do Plants Read Their Own Shapes?212, 2 (2016), 333–337.

- Bruno Moulia, Stéphane Douady, and Olivier Hamant. 2021. Fluctuations Shape Plants through Proprioception. 372, 6540 (2021), eabc6868.

- Charles Spence. 2022. Proprioceptive art: How should it be defined, and why has it become so popular?i-Perception 13, 5 (2022), 20416695221120522.

- Filip Vandenbussche, Kimberley Tilbrook, Ana Carolina Fierro, Kathleen Marchal, Dirk Poelman, Dominique Van Der Straeten, and Roman Ulm. 2014. Photoreceptor-Mediated Bending towards UV-B in Arabidopsis. 7, 6 (2014), 1041–1052.

- Jan-Niklas Voigt-Antons, Tanja Kojic, Danish Ali, and Sebastian Moller. 2020. Influence of Hand Tracking as a Way of Interaction in Virtual Reality on User Experience. In 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX) (Athlone, Ireland, 2020-05). IEEE, 1–4.

- Alexander G Volkov, Tanya C Dunkley, Anthony J Labady, and Courtney L Brown. 2005. Phototropism and electrified interfaces in green plants. Electrochimica acta 50, 21 (2005), 4241–4247.

- Lee D Walsh, G Lorimer Moseley, Janet L Taylor, and Simon C Gandevia. 2011. Proprioceptive signals contribute to the sense of body ownership. The Journal of physiology 589, 12 (2011), 3009–3021.

Footnote

⁎Corresponding author

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be honored. For all other uses, contact the owner/author(s).

C&C '25, Virtual, United Kingdom

© 2025 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-1289-0/25/06.

DOI: https://doi.org/10.1145/3698061.3726945