Trust in AI is dynamically updated based on users' expectations

DOI: https://doi.org/10.1145/3706599.3719870

CHI EA '25: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, April 2025

Human reliance on artificial intelligence (AI) advice in decision-making varies, with both over- and under-reliance observed. Timing of AI advice has been proposed to address these biases. Additionally, trust in the AI also influences reliance. In a deepfake detection task, we investigated how AI advice affects human performance and decision-making. Using a large online participant pool, we compared task performance when AI advice was provided either concurrently with decisions or after an initial evaluation. We found that while AI advice improved deepfake detection, the timing of advice did not affect performance. Instead, we revealed, using computational modelling, that trust in the AI dynamically influenced participants' willingness to agree with its advice, based on expectations of its ability. These findings highlight the importance of developing appropriate trust in AI-powered decision support systems for effective human-AI collaboration, with applications in designing and testing decision-support tools for perceptual tasks.

ACM Reference Format:

Patrick Cooper, Alyssa Lim, Jessica Irons, Melanie McGrath, Huw Jarvis and Andreas Duenser. 2025. Trust in AI performance is dynamically updated based on users’ expectations. In Extended Abstracts of the CHI Conference on Human Factors in Computing Systems (CHI EA '25), April 26-May 01, 2025, Yokohama, Japan. ACM, New York, NY, USA, 11 Pages. https://doi.org/10.1145/3706599.3719870

1 Introduction

Artificial intelligence (AI) is poised to radically change how human labour is undertaken. Domains long thought to be restricted to human cognition and creativity, such as computer programming, scientific writing, and art, have all seen the advent of AI tools capable of replicating human abilities. However, AI tools touted to replace human workers may perform best in collaboration with them. That is, rather than labour market competition between humans and machines, an alternate approach has been championed where humans and AI work together to yield best outcomes (here referred to as collaborative intelligence; [39,44,47].

While collaborative intelligence has potential to improve how work is undertaken, it is still unclear how to best design workflows to harness the relative strengths of humans and AI. Working with an AI-based decision-support system typically improves performance on a task compared to human decision-making alone [17,18,25,50]. However, this improvement frequently does not extend beyond levels of performance that the AI could achieve without human input. A growing body of evidence suggests that this performance ceiling is the result of trust in AI support [4,32,36,49]. Specifically, if trust is mis-calibrated, humans may misalign their reliance on the AI recommendation (i.e., their willingness to act on AI advice), leading to either excessive or insufficient reliance on AI recommendations. This ultimately hinders collaborative performance [5,12,21,45]. Therefore, a critical question is how trust in AI support systems develops, and how humans adjust their reliance on the system in response to this trust.

In human-comprised teams, trust arises from a complex set of antecedents broadly related to ability, integrity and benevolence of the other team members [31]. In contrast, the non-anthropomorphic nature of AI means humans cannot use benevolence to develop trust. Instead, humans may use objective performance of the AI as a yardstick to determine its trustworthiness [6,16,20]. In doing so, trust in AI may be susceptible to multiple cognitive biases, such as the availability heuristic, where the most recent exposure to AI performance will be given unbalanced weighting when developing trust [9,24,27]. This has the potential to reduce reliance on the decision support, particularly if the advice provided was incorrect.

Attempts to overcome unproductive biases in interactions with AI (e.g., over-reliance; automation bias, under-reliance; algorithm aversion) have shown mixed effectiveness. One popular approach has been to change how the human approaches the decision-making task by forcing them to rely less on heuristics and more on critical assessment of the AI advice. Such approaches fall under the umbrella of cognitive forcing, where interventions aim to shift people from heuristic, automatic thinking styles (e.g., agree with the AI) to effortful, considered thinking styles (e.g., is the AI suggestion in line with my own expertise?). Approaches to inducing cognitive forcing vary; two-step decision making where a human decision is made prior to AI advice [4,13,25], a slow algorithm that forces the human to wait before receiving AI advice [4,38,42], anchoring and framing approaches [30,35] and providing explanations for the AI's suggestion [49]. To date, their efficacy in calibrating appropriate reliance on AI remains unclear [4,13,14,42,46]. Given the diverse range of tasks cognitive forcing interventions have used, alongside the variable nature of the support systems, the efficacy may be context dependent. Thus, knowing what workflow properties allow effective intervention is vital.

In this study, we aimed to see how trust development impacted AI-assisted decision making and whether cognitive forcing paradigms influence trust development and decision performance. Participants performed a deepfake detection task, a perceptual decision-making task that has consistently been shown as difficult for humans to perform accurately [3,29,40] but is could be suited for AI collaboration. Deepfakes are artificially generated media, often of human faces, that aim to be a convincing but false depiction of reality. Here, we expected that working with an AI assistant would improve the accuracy of human decision-making (i.e., greater accuracy on the task). We also examined the role of cognitive forcing and trust on decision-making. Specifically, if participants rely on heuristics to solve deepfake classification (e.g., agree with the AI's advice), using cognitive forcing could change their decision-making, leading to appropriate agreement with the AI, and consequently, improved accuracy. Additionally, higher trust in the AI's advice will be associated with higher agreement with it, likewise leading to improved accuracy when the AI is correct, but also errors due to overreliance when the AI is incorrect.

To better understand how trust changes over time and whether this was also impacted by cognitive forcing, we explored these questions using a reinforcement learning computational model. Reinforcement learning models have a robust history in uncovering subjective valuations that drive human decision-making [7,10] and are well suited to examine preference-based decisions. Briefly, in reinforcement learning models, choices are made based on the estimated value of different alternatives, with the highest value option being chosen. The value is based on the expected outcomes of each choice. Reinforcement learning theory holds that feedback from previous choices are used to update individuals' predictions for future choices. We adapted this framework to estimate the value of relying on an AI decision support tool's advice. This work contributes novel insights into trust in AI. We found that, rather than what is usually reported in the literature, trust in AI was dynamically updated based on the user's expectation of AI accuracy, rather than the AI's actual performance.

2 Methods

2.1 Participants

Three hundred participants from the United States self-selected into the current study via the online participant recruitment platform Prolific [37]. Sample size was determined a priori using a power analysis, which indicated that 80% power would be achieved for detecting a small-to-medium effect size for interaction terms in our design. After complete data was returned, a final set of 285 participants (mean age = 34.84 ± 12.26; 102 males, 172 females, 11 unknown) were retained. Participants were reimbursed at £6.60/hr and provided informed consent prior to undertaking the study. The experiment was approved by CSIRO's Social and Interdisciplinary Science Human Research Ethics Committee (245/23).

2.2 Procedure

Stimuli were presented using jsPsych v7.3 [8] and were delivered to participants via an in-house server using JATOS [26]. Participants performed a deepfake classification task, where a face was presented, and participants were asked to decide if the face was ‘real’ or ‘fake’ (Fig. 1A). Fifty genuine faces were sourced from the Flickr-Faces-HQ (FFHQ) dataset [22] and 50 artificially generated faces from the StyleGAN2 dataset [23] from Nvidia Labs. Critically, the deepfakes were created after being trained on the FFHQ database. Author AL selected an ethnically and gender diverse set of faces. In a separate pilot study, human classification accuracy for this set of selected faces was 66.45%. To aid in their decision, we presented advice from an AI assistant which suggested that the face was likely to be real or fake. Note, this AI was simply an algorithm that provided the correct image identity ∼75% of the time, a level deemed appropriate from results of a pilot version of the task, used in previous cognitive forcing work [4], and similar to that shown to facilitate human-AI collaboration [48]. In practice, AI accuracy was slightly less than 75% due to sampling stratification and the exclusion of training trials from analyses. After deciding the nature of faces, participants rated their confidence in their own detection ability, confidence in the AI's detection ability and trust in the AI assistant. Finally, feedback about whether their decision was correct was presented. Feedback was deliberately presented after confidence ratings to reduce participants calibrating their subjective ratings toward the objective feedback of their performance [2].

The study used a between-subjects design. Participants were randomly assigned into equal sized groups (n=150) associated with the different decision step combinations1 (Fig. 1B). First, for the one-step condition, AI advice and the face image were presented contemporaneously, allowing participants to incorporate AI advice and their own judgment when making their decision. In contrast, for the two-step condition, participants first made their classification choice without AI advice and then were presented the same image alongside AI advice requiring a second decision. This two-step decision served as our cognitive forcing manipulation.

Prior to the experiment, participants were given brief instructions and provided three practice trials to familiarize themselves with the task. For the experiment, on each trial, participants were first presented with a preparation screen for 750 ms, with a prompt to ‘Get Ready’ and a reminder of the response key mapping. Next, a blank screen was presented for 250 ms, replaced with the appropriate decision stage screen dependent on the AI advice condition. Real vs. fake decisions were made with key presses ‘z’ and ‘/’ respectively. After the decision was recorded, participants used their mouse to rate their 1) own confidence “Confidence in your detection ability for that face?”, 2) confidence in the AI “Confidence in the AI's detection ability for that face?” and 3) trust in the AI “I can trust the AI assistant.” For confidence, participants rated on a 4-point scale, going from 1 = “Complete Guess” to 4 = “Absolutely Certain” and for trust participants responded with a 5-point scale: 1 = “Not at all” to 5 = “Completely.” After ratings, feedback about the participant's decision was presented, with an accompanying confetti animation for correct answers and a sad robot face for incorrect answers.

2.3 Data analyses

Only valid trials from the experiment were included in analyses. Valid trials comprised those with RTs > 200 ms and RTs < mean RT + 3 SD. An average of 1.47 ± 0.93 trials were removed per participant from the overall analyses. Analyses were undertaken using the R programming language [41]. To assess the influence of AI assistance on deepfake detection, we compared performance without AI advice against the one-step and two-step conditions. As we did not include an explicit group without AI, we used the decisions in the two-step condition when participants did not receive AI advice (i.e., their first response).

Next, we used mixed effects modelling to assess the predictors of deepfake detection ability and trust within our design. This was due to the design of our cognitive forcing manipulation, where some participants would see identical stimuli (i.e., the two-step condition) [34]. Specifically, we used the lme4 package [1] to perform logistic mixed effects modelling to predict detection accuracy and agreement as a function of assistance type and trust. Agreement rates were defined as the proportion of trials in which the participants made the same decision as the AI. All models included Participant and Stimulus as random effects and Trial and the respective variable of interest as fixed effects.

Finally, to examine how trust developed, we employed a computational model that estimated how participants updated their trust scores based on the veracity of AI advice. For these updates, we implemented a reinforcement learning framework based on prediction errors (i.e., a Rescorla-Wagner learning rule [43]). We estimated a participant's current belief in the expected veracity of AI advice, according to:

(1)

(2)

Next, to determine the relationship between trust and these violations in expected AI veracity (i.e., prediction errors), we used linear mixed-effects modelling to predict observed trust scores from the model estimates of prediction errors.

General fitness of models was assessed using a likelihood-ratio test and where appropriate we used Akaike Information Criterion (AIC) fit estimates to compare models.

3 Results

3.1 Deepfake detection

Overall, participants were able to detect if a face was real or deepfake successfully on 67.86% ± 2.41 of trials, significantly greater than chance (χ2 = 1646, p < .001)2 . This is comparable to the AI assistant's mean accuracy of 72.76% ± 2.3 but does not realize collaborative gains by exceeding it. We next compared accuracy as a function of AI assistance type (i.e., None, One-step, Two-step) to determine whether this lack of collaborative improvement was driven by heuristic-based decision-making, and consequently rectified by cognitive forcing. Given the lack of a distinct baseline group, and that participants saw different combinations of stimuli (which may have varied in classification difficulty) we used a logistic mixed effects model that included Assistance Type as a factor to control for such confounds:

(3)

A likelihood-ratio test demonstrated that the model including Assistance Type was a better fit for the data than one without (χ2(2) = 15.9, p < .001). Model estimates revealed that having any AI assistance improved accuracy on the task, with both one-step (β = .033, SE = .012, t(502) = 2.86, p = .005) and two-step (β = .041, SE = .011, t(1586) = 3.8, p < .001) more accurate than the decisions made without AI support. However, two-step decision making (reflecting cognitive forcing) did not lead to significantly greater accuracy than one-step decision making (β = 6.8×10-4, SE = .012, t(502) =.58, p = .56).

We similarly assessed how rates of agreement with AI recommendations were influenced by AI assistance type. Here, we applied a similar model:

(4)

3.2 Trust predicts performance

Next, we tested whether trust in the AI assistant was related to performance on the task. First, we examined if classification accuracy was associated with trust:

(5)

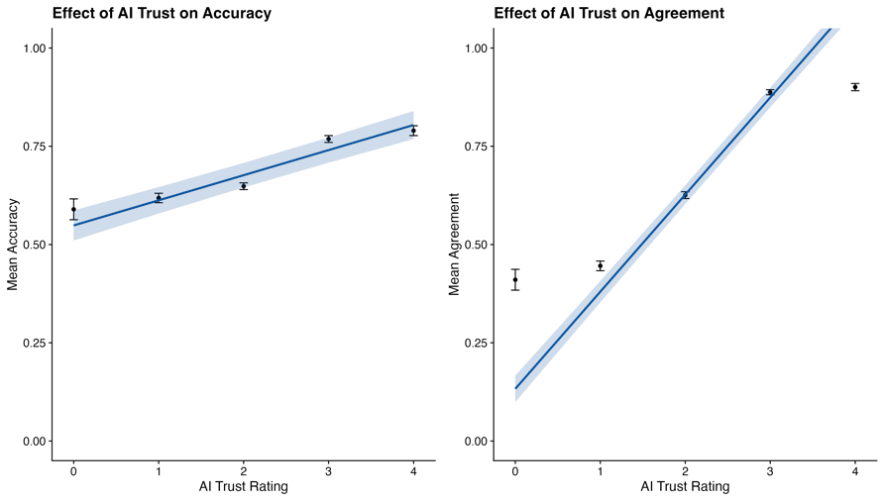

A likelihood-ratio test demonstrated the model including Trust was a better fit for the data than one without (χ2(1) = 152.1, p < .001). Model estimates revealed that increasing trust in the AI was associated with increased human decision accuracy, and that at the highest levels of human trust, collaborative performance exceeded the accuracy of the AI system alone (β = .064, SE = 5.041 ×10-3, t(2047) = 12.67, p < .001, see Fig. 2).

These findings suggest that trust may be driving underperformance of human-AI pairs, that is, participants with low trust in the AI recommendations are not agreeing with the system despite its higher accuracy. To examine this, we tested whether trust predicted an increase in the likelihood of agreeing with the AI's recommendation. Here we ran two models:

(6)

The model including Trust was a better fit for the data than the null model (χ2(1) = 1741.3, p < .001), with greater trust in the AI increasing the probability of agreeing with AI (β = .247, SE = 5.391 ×10-3, t(7043) = 45.84, p < .001, see Fig. 2). Taken together, higher levels of AI trust were associated with both increased agreement with AI suggestions and increased accuracy.

3.3 The relationship between trust and reliance is dynamically calibrated

Given that trust in the AI assistant was a strong predictor of successful deepfake classification, we explored how trust was established and updated over the course of the task. Although participants were informed a priori about the AI's typical accuracy, trust was not a stable value throughout the task, suggesting factors beyond explicit performance metrics of the AI influenced trust. Instead, participants might dynamically adjust their trust - and their willingness to rely on AI advice - based on their recent experiences with the AI's performance. Such adjustments could occur gradually or, as suggested in previous work [9,24], rapidly in response to recent experience. To examine this possibility, we compared two mechanisms of trust adjustment:

- Reactive updating (AI advice model): Trust increases or decreases based solely on the accuracy of AI advice in the previous trial, independent of prior expectations. For example, if the AI makes an error, trust is reduced.

- Expectation-based updating (prediction error model): Trust dynamically aligns with participants' internal expectations of the AI's performance. In this case, deviations from expected accuracy - termed prediction errors - drive changes in trust. For example, if the AI is expected to make an error, and it does, trust may not be negatively impacted.

To formalise this second mechanism, we adapted the Rescorla-Wagner learning rule as detailed in Equations 1 and 2.

Because prediction errors are signed, this model captures both the magnitude and direction (valence) of discrepancies: larger δ values indicate greater surprise and drive larger updates in trust, either positively or negatively, depending on whether the AI performed better or worse than expected.

To determine how these factors influenced trust in AI, we ran mixed-effects linear regressions with the format:

(7)

First, we evaluated the two models separately. For the Reactive updating AI advice model, we found that trust ratings significantly increased when AI advice was accurate on the previous trial (Table 1). This model provided a significantly better fit than a null model with no predictors (χ2(1) = 18.217, p < .001) and demonstrated strong correspondence between observed and predicted (i.e., derived from modelling) trust (r2 = .472).

| Model | Predictors | β | SE | p |

|---|---|---|---|---|

| Reactive updating (AI advice model) | AI Advice t−1, Trial | .082 | .019 | 1.98 ×10-5 |

| Expectation-based updating (prediction error model) | Prediction error (δ), Trial | 3.008 | .226 | 2 ×10-16 |

| Note: effect coefficients (β) shown for significant predictors (in bold). |

||||

Next, for the prediction error model, we found including prediction error was a significant improvement on modelling vs. the null model (χ2(1) = 175, p < .001). Our modelling revealed trust ratings significantly increased with larger prediction errors (Table 1). That is, reported trust in AI increased when AI performed better than expected. Predicted trust values from this model were strongly correlated with observed trust values (r2 = .482).

Finally, we compared the AI Advice model and the Prediction Error model directly. The Prediction Error model provided a better fit to the data than the AI Advice model (ΔAIC=161.68), suggesting trial-by-trial prediction errors better explain changes in trust than reactive updates based solely on previous advice accuracy.

4 Discussion

With advances in AI, teams of the future will likely comprise both human and artificial agents. Understanding how human decisions are influenced by interaction with AI is vital to develop appropriate workflows in such teams. In this study, we examined whether cognitive forcing influenced human decision-making in an AI-supported deepfake classification task and how trust in the AI assistant affected performance. In agreement with previous work [15], we found providing AI advice improved deepfake detection ability. We did not find evidence that cognitive forcing led to additional improvements in performance, with both one-step and two-step interventions producing similar performance gains. Instead, participants’ trust in the AI advice was a key predictor in task performance. Higher levels of trust in the AI advice were associated with both a higher likelihood of agreement with the AI advice, and increased deepfake detection accuracy. The higher tendency to agree with AI among participants with higher trust is likely to have contributed to overall performance, as simply agreeing with the AI would produce a boost in accuracy given the AI assistant performed better than a human did alone for this type of task.

We also reported novel evidence that trust in AI, which was critical for performance in the deepfake detection task, was dynamically updated based on prediction errors. Like other cases of behavioural adjustments modelled with reinforcement learning (e.g., willingness to invest [19]; or to seek information; [28]), our findings revealed deviations from the expected performance of AI were strongly coupled with dynamic adjustments of trust in AI. And importantly, our findings provide novel evidence that this expected performance of the AI explained trust calibration better than solely AI accuracy.

4.1 Trust is critical in human-AI workflows

Our findings support growing evidence that trust in an AI assistant critically influences human decision making. Trust calibration has been argued to be essential for appropriate reliance on AI, with mis-calibration leading to over- and/or under-reliance on the decision support system [4,32,48,49]. However, to date many trust measures are temporally disconnected from the AI advice, e.g., after a block of trials (e.g., [4]), at the start/conclusion of the experiment [33] or derived from behavioural/physical measures [36,49]. Trust in AI appears to develop differently than trust in humans, with people tending to have a biased view of AI capabilities prior to use and then rapidly distrusting AI upon observation of mistakes [11,33]. We found that these rapid changes in trust ratings impact performance, including both task accuracy and agreement with the AI. Computational modelling approaches, like those performed here, allow individual differences in trust trajectories to be examined. While we did not examine what subjective factors influenced these individual differences, future research may consider exploring the nature of variable trust setting.

4.2 Cognitive forcing had minimal influence on task performance

Despite AI advice improving task performance, our results did not support the idea that cognitive forcing improves reliance on AI. Given previous work has shown mixed results for cognitive forcing, task context may be a factor in whether cognitive forcing changes AI-supported decision making. Our task was a perceptual decision-making task that relied on forced choice (i.e., participants could only choose between real and fake). In this scenario, using heuristics may be beneficial to task performance. For instance, if participants have a set of features they consider markers of a deepfake, then making the decision about real or fake could be based on detecting the presence of such features. Indeed, previous work has shown that human deepfake detection often relies on particular local features in a face (e.g., smooth texture on skin, eye gaze direction [3]). Therefore, participants may be motivated to see if the AI agreed with their successful detection of the feature space they searched, rather than looking to the AI for advice per se. Indeed, this would fit with our pattern of results revealing high but not ceiling agreement rates with AI. In such a case, cognitive forcing may not be appropriate to influence decision making.

Additionally, our task was a difficult task for humans. Agreeing with an AI that has accuracy greater than the typical human respondent would have been an effective strategy to employ. Cognitive forcing, when found to be effective, is often shown to limit over-reliance on the AI advice [4]. While over-relying on the AI would have led to accuracy on par with the AI support, this still would be an improvement on typical performance. As such, it may be that there is an ideal context where the discrepancy between human and AI capabilities is at a vital level that over-relying becomes a problematic strategy and, in this situation, cognitive forcing may provide benefits.

5 Conclusion

Our results revealed an AI assistant can be useful in aiding human decision making when detecting deepfakes. We found the timing of this AI advice was immaterial to improved deepfake detection but critically, that appropriate trust in the AI was key. Participants dynamically adjust their tendency to agree with AI advice based on their recent trust levels, and dynamically adjust their trust in AI based on their expectations of the AI-advice accuracy. Our results support the notion that trust in human-AI teams is a critical component of future workflows where humans and AI work together to solve problems.

Acknowledgments

AL was supported by a Data61 Vacation Student Internship. We thank Dan Young for support setting up the experiment server.

References

- 1. Douglas Bates, Martin Mächler, Ben Bolker, and Steve Walker. 2014. Fitting Linear Mixed-Effects Models using lme4. Retrieved February 6, 2024 from http://arxiv.org/abs/1406.5823

- 2. Nadin Beckmann, Jens F. Beckmann, and Julian G. Elliott. 2009. Self-confidence and performance goal orientation interactively predict performance in a reasoning test with accuracy feedback. Learning and Individual Differences 19, 2: 277–282. https://doi.org/10.1016/j.lindif.2008.09.008

- 3. Sergi D Bray, Shane D Johnson, and Bennett Kleinberg. 2023. Testing human ability to detect ‘deepfake’ images of human faces. Journal of Cybersecurity 9, 1: tyad011. https://doi.org/10.1093/cybsec/tyad011

- 4. Zana Buçinca, Maja Barbara Malaya, and Krzysztof Z. Gajos. 2021. To Trust or to Think: Cognitive Forcing Functions Can Reduce Overreliance on AI in AI-assisted Decision-making. Proceedings of the ACM on Human-Computer Interaction 5, CSCW1: 1–21. https://doi.org/10.1145/3449287

- 5. Adrian Bussone, Simone Stumpf, and Dympna O'Sullivan. 2015. The Role of Explanations on Trust and Reliance in Clinical Decision Support Systems. In 2015 International Conference on Healthcare Informatics, 160–169. https://doi.org/10.1109/ICHI.2015.26

- 6. Vivienne Bihe Chi and Bertram F. Malle. 2023. People Dynamically Update Trust When Interactively Teaching Robots. In Proceedings of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, 554–564. https://doi.org/10.1145/3568162.3576962

- 7. P. Dayan and N. D. Daw. 2008. Decision theory, reinforcement learning, and the brain. Cognitive, Affective, & Behavioral Neuroscience 8, 4: 429–453. https://doi.org/10.3758/CABN.8.4.429

- 8. Joshua R. De Leeuw. 2015. jsPsych: A JavaScript library for creating behavioral experiments in a Web browser. Behavior Research Methods 47, 1: 1–12. https://doi.org/10.3758/s13428-014-0458-y

- 9. Berkeley J Dietvorst, Joseph P Simmons, and Cade Massey. 2015. Algorithm Aversion: People Erroneously Avoid Algorithms After Seeing Them Err. Journal of Experimental Psychology: General.

- 10. Ray J. Dolan and Peter Dayan. 2013. Goals and Habits in the Brain. Neuron 80, 2: 312–325. https://doi.org/10.1016/j.neuron.2013.09.007

- 11. Mary T. Dzindolet, Linda G. Pierce, Hall P. Beck, and Lloyd A. Dawe. 2002. The Perceived Utility of Human and Automated Aids in a Visual Detection Task. Human Factors: The Journal of the Human Factors and Ergonomics Society 44, 1: 79–94. https://doi.org/10.1518/0018720024494856

- 12. Mary T. Dzindolet, Linda G. Pierce, Hall P. Beck, Lloyd A. Dawe, and B. Wayne Anderson. 2001. Predicting Misuse and Disuse of Combat Identification Systems. Military Psychology 13, 3: 147–164. https://doi.org/10.1207/S15327876MP1303_2

- 13. Riccardo Fogliato, Shreya Chappidi, Matthew Lungren, Paul Fisher, Diane Wilson, Michael Fitzke, Mark Parkinson, Eric Horvitz, Kori Inkpen, and Besmira Nushi. 2022. Who Goes First? Influences of Human-AI Workflow on Decision Making in Clinical Imaging. In 2022 ACM Conference on Fairness, Accountability, and Transparency, 1362–1374. https://doi.org/10.1145/3531146.3533193

- 14. Krzysztof Z. Gajos and Lena Mamykina. 2022. Do People Engage Cognitively with AI? Impact of AI Assistance on Incidental Learning. In 27th International Conference on Intelligent User Interfaces, 794–806. https://doi.org/10.1145/3490099.3511138

- 15. Matthew Groh, Ziv Epstein, Chaz Firestone, and Rosalind Picard. 2022. Deepfake detection by human crowds, machines, and machine-informed crowds. Proceedings of the National Academy of Sciences 119, 1: e2110013119. https://doi.org/10.1073/pnas.2110013119

- 16. Kevin Anthony Hoff and Masooda Bashir. 2015. Trust in Automation: Integrating Empirical Evidence on Factors That Influence Trust. Human Factors: The Journal of the Human Factors and Ergonomics Society 57, 3: 407–434. https://doi.org/10.1177/0018720814547570

- 17. Marijn Janssen, Martijn Hartog, Ricardo Matheus, Aaron Yi Ding, and George Kuk. 2022. Will Algorithms Blind People? The Effect of Explainable AI and Decision-Makers’ Experience on AI-supported Decision-Making in Government. Social Science Computer Review 40, 2: 478–493. https://doi.org/10.1177/0894439320980118

- 18. Matthew L. Jensen, Paul Benjamin Lowry, and Jeffrey L. Jenkins. 2011. Effects of Automated and Participative Decision Support in Computer-Aided Credibility Assessment. Journal of Management Information Systems 28, 1: 201–234. https://doi.org/10.2753/MIS0742-1222280107

- 19. Kahneman, D and Tversky, A. 1979. Prospect Theory: An Analysis of Decision under Risk. Econometrica 47, 2: 263–291.

- 20. Alexandra D. Kaplan, Theresa T. Kessler, J. Christopher Brill, and P. A. Hancock. 2023. Trust in Artificial Intelligence: Meta-Analytic Findings. Human Factors: The Journal of the Human Factors and Ergonomics Society 65, 2: 337–359. https://doi.org/10.1177/00187208211013988

- 21. Steven E Kaplan, J. Hal Reneau, and Stacey Whitecotton. 2001. The effects of predictive ability information, locus of control, and decision maker involvement on decision aid reliance. Journal of Behavioral Decision Making 14, 1: 35–50. https://doi.org/10.1002/1099-0771(200101)14:1<35::AID-BDM364>3.0.CO;2-D

- 22. Tero Karras, Samuli Laine, and Timo Aila. 2019. A Style-Based Generator Architecture for Generative Adversarial Networks. Retrieved February 6, 2024 from http://arxiv.org/abs/1812.04948

- 23. Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. 2020. Analyzing and Improving the Image Quality of StyleGAN. Retrieved February 6, 2024 from http://arxiv.org/abs/1912.04958

- 24. Antino Kim, Mochen Yang, and Jingjing Zhang. 2023. When Algorithms Err: Differential Impact of Early vs. Late Errors on Users’ Reliance on Algorithms. ACM Transactions on Computer-Human Interaction 30, 1: 1–36. https://doi.org/10.1145/3557889

- 25. Vivian Lai and Chenhao Tan. 2019. On Human Predictions with Explanations and Predictions of Machine Learning Models: A Case Study on Deception Detection. In Proceedings of the Conference on Fairness, Accountability, and Transparency, 29–38. https://doi.org/10.1145/3287560.3287590

- 26. Kristian Lange, Simone Kühn, and Elisa Filevich. 2015. "Just Another Tool for Online Studies” (JATOS): An Easy Solution for Setup and Management of Web Servers Supporting Online Studies. PLOS ONE 10, 6: e0130834. https://doi.org/10.1371/journal.pone.0130834

- 27. John Lee and Neville Moray. 1992. Trust, control strategies and allocation of function in human-machine systems. Ergonomics 35, 10: 1243–1270. https://doi.org/10.1080/00140139208967392

- 28. Romain Ligneul, Martial Mermillod, and Tiffany Morisseau. 2018. From relief to surprise: Dual control of epistemic curiosity in the human brain. NeuroImage 181: 490–500. https://doi.org/10.1016/j.neuroimage.2018.07.038

- 29. Zhengzhe Liu, Xiaojuan Qi, and Philip H.S. Torr. 2020. Global Texture Enhancement for Fake Face Detection in the Wild. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 8057–8066. https://doi.org/10.1109/CVPR42600.2020.00808

- 30. Poornima Madhavan and Douglas A. Wiegmann. 2005. Cognitive Anchoring on Self-Generated Decisions Reduces Operator Reliance on Automated Diagnostic Aids. Human Factors: The Journal of the Human Factors and Ergonomics Society 47, 2: 332–341. https://doi.org/10.1518/0018720054679489

- 31. Roger C Mayer and James H Davis. 1995. An Integrative Model of Organizational Trust. The Academy of Management Review 20, 3: 709–734.

- 32. Nathan J McNeese, Mustafa Demir, Erin Chiou, Nancy Cooke, and Giovanni Yanikian. 2019. Understanding the Role of Trust in Human-Autonomy Teaming. Proceedings of the 52nd Hawaii International Conference on System Sciences.

- 33. Stephanie M. Merritt and Daniel R. Ilgen. 2008. Not All Trust Is Created Equal: Dispositional and History-Based Trust in Human-Automation Interactions. Human Factors: The Journal of the Human Factors and Ergonomics Society 50, 2: 194–210. https://doi.org/10.1518/001872008X288574

- 34. Lotte Meteyard and Robert A.I. Davies. 2020. Best practice guidance for linear mixed-effects models in psychological science. Journal of Memory and Language 112: 104092. https://doi.org/10.1016/j.jml.2020.104092

- 35. Mohammad Naiseh, Reem S. Al-Mansoori, Dena Al-Thani, Nan Jiang, and Raian Ali. 2021. Nudging through Friction: An Approach for Calibrating Trust in Explainable AI. In 2021 8th International Conference on Behavioral and Social Computing (BESC), 1–5. https://doi.org/10.1109/BESC53957.2021.9635271

- 36. Kazuo Okamura and Seiji Yamada. 2020. Adaptive trust calibration for human-AI collaboration. PLOS ONE 15, 2: e0229132. https://doi.org/10.1371/journal.pone.0229132

- 37. Stefan Palan and Christian Schitter. 2018. Prolific.ac—A subject pool for online experiments. Journal of Behavioral and Experimental Finance 17: 22–27. https://doi.org/10.1016/j.jbef.2017.12.004

- 38. Joon Sung Park, Rick Barber, Alex Kirlik, and Karrie Karahalios. 2019. A Slow Algorithm Improves Users’ Assessments of the Algorithm's Accuracy. Proceedings of the ACM on Human-Computer Interaction 3, CSCW: 1–15. https://doi.org/10.1145/3359204

- 39. Jeannette Paschen, Matthew Wilson, and João J. Ferreira. 2020. Collaborative intelligence: How human and artificial intelligence create value along the B2B sales funnel. Business Horizons 63, 3: 403–414. https://doi.org/10.1016/j.bushor.2020.01.003

- 40. Ethan Preu, Mark Jackson, and Nazim Choudhury. 2022. Perception vs. Reality: Understanding and Evaluating the Impact of Synthetic Image Deepfakes over College Students. In 2022 IEEE 13th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), 0547–0553. https://doi.org/10.1109/UEMCON54665.2022.9965697

- 41. R Core Team. 2023. R: A language and environment for statistical computing. Retrieved from https://www.R-project.org

- 42. Charvi Rastogi, Yunfeng Zhang, Dennis Wei, Kush R. Varshney, Amit Dhurandhar, and Richard Tomsett. 2022. Deciding Fast and Slow: The Role of Cognitive Biases in AI-assisted Decision-making. Proceedings of the ACM on Human-Computer Interaction 6, CSCW1: 1–22. https://doi.org/10.1145/3512930

- 43. Robert A. Rescorla and Wagner, Allan R. 1972. A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and non-reinforcement. Classical conditioning, Current research and theory 2: 64–69.

- 44. Emma Schleiger, Claire Mason, Claire Naughtin, Andrew Reeson, and Cecile Paris. 2023. Collaborative Intelligence: A scoping review of current applications. https://doi.org/10.32388/RZGEPB

- 45. Sebastian W. Schuetz, Zachary R. Steelman, and Rhonda A. Syler. 2022. It's not just about accuracy: An investigation of the human factors in users’ reliance on anti-phishing tools. Decision Support Systems 163: 113846. https://doi.org/10.1016/j.dss.2022.113846

- 46. Heliodoro Tejeda, Aakriti Kumar, Padhraic Smyth, and Mark Steyvers. 2022. AI-Assisted Decision-making: a Cognitive Modeling Approach to Infer Latent Reliance Strategies. Computational Brain & Behavior 5, 4: 491–508. https://doi.org/10.1007/s42113-022-00157-y

- 47. H James Wilson and Paul R Daugherty. 2018. Collaborative Intelligence: Humans and AI Are Joining Forces. Harvard Business Review.

- 48. Kun Yu, Shlomo Berkovsky, Ronnie Taib, Jianlong Zhou, and Fang Chen. 2019. Do I Trust My Machine Teammate? An Investigation from Perception to Decision.

- 49. Yunfeng Zhang, Q. Vera Liao, and Rachel K. E. Bellamy. 2020. Effect of Confidence and Explanation on Accuracy and Trust Calibration in AI-Assisted Decision Making. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, 295–305. https://doi.org/10.1145/3351095.3372852

- 50. Lina Zhou, Judee K. Burgoon, Douglas P. Twitchell, Tiantian Qin, and Jay F. Nunamaker Jr. 2004. A Comparison of Classification Methods for Predicting Deception in Computer-Mediated Communication. Journal of Management Information Systems 20, 4: 139–166. https://doi.org/10.1080/07421222.2004.11045779

Footnote

1The study also included motivational manipulations, such as speeded vs. accurate responding which were assigned to participants. However, for this study we collapsed across these levels of manipulation to focus on the cognitive forcing aspect of the experiment.

2For accuracy, we only include the final responses from both one-step and two-step conditions (i.e., only when AI support is included).

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be honored. For all other uses, contact the owner/author(s).

CHI EA '25, Yokohama, Japan

© 2025 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-1395-8/2025/04

DOI: https://doi.org/10.1145/3706599.3719870