Challenges of Music Score Writing and the Potentials of Interactive Surfaces

DOI: https://doi.org/10.1145/3613904.3642079

CHI '24: Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, May 2024

Composers use music notation programs throughout their creative process. Those programs are essentially elaborate structured document editors that enable composers to create high-quality scores by enforcing musical notation rules. They effectively support music engraving, but impede the more creative stages in the composition process because of their lack of flexibility. Composers thus often combine these desktop tools with other mediums such as paper. Interactive surfaces that support pen and touch input have the potential to address the tension between the contradicting needs for structure and flexibility. We interview nine professional composers. We report insights about their thought process and creative intentions, and rely on the “Cognitive Dimensions of Notations” framework to capture the frictions they experience when materializing those intentions on a score. We then discuss how interactive surfaces could increase flexibility by temporarily breaking the structure when manipulating the notation.

ACM Reference Format:

Vincent Cavez, Catherine Letondal, Emmanuel Pietriga, and Caroline Appert. 2024. Challenges of Music Score Writing and the Potentials of Interactive Surfaces. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI '24), May 11--16, 2024, Honolulu, HI, USA. ACM, New York, NY, USA 16 Pages. https://doi.org/10.1145/3613904.3642079

1 INTRODUCTION

Music notations provide composers with a way to represent visually what they have in their mind. The modern staff notation in particular is used in a broad variety of genres. It lets composers share their work with other musicians, and do so with minimal ambiguity. But for many composers the notation also plays a key role throughout the creative process. It is a means for them to capture early ideas, to expand and to iterate on what they have already written. When composing on a computer, music notation software are thus not only music engraving tools, but actual creativity support tools that composers use for a variety of activities [39], from the capture of “germinal ideas” [3] to the communication and archival of the final piece.

Music notation software can be seen as elaborate structured document editors that share many similarities with visual programming languages [29]: music symbols are essentially graphical primitives structured according to constraints captured by a multi-dimensional grammar [16]. As such, those software enable the creation of syntactically well-formed compositions. By enforcing the notation's syntactic rules and automatically laying out symbols to ensure good readability, those programs produce high-quality scores that are ready to be engraved. But at the same time, enforcing those rules impedes the more creative stages of the composition process. These stages rather call for flexibility in the interactive manipulation of symbols and, beyond symbols, of other types of contents that might be relevant to insert in a score during the composition process. These include freeform annotations, pictures, audio samples, foreign score fragments used for reference only.

This lack of flexibility often leads composers to use not only software but paper as well [25], more particularly in the early stages of the creative process [43]. Composers may face “abrupt shifts” [14] when switching medium, however. This will sometimes lead them to keep editing on the computer even if paper would be better suited for a particular activity.

The HCI literature suggests that interactive surfaces that support pen and touch input – such as, e.g., the Apple iPad or the Microsoft Surface Pro – could combine the best of both paper and computer mediums to resolve tensions between the need for structure and the need for flexibility (see [9, 38] for recent examples). But while a couple of commercial music notation applications have been designed for interactive surfaces (for instance StaffPad [41] and Symphony Pro [32]), they mostly adhere to the same WIMP-oriented interaction model as desktop applications, treating pen and touch as generic pointing devices such as mouse and trackpad. We believe that this approach is suboptimal, and advocate for a more comprehensive redesign of the whole interaction model to reveal the full potential of interactive surfaces. Such a design process requires close observation of composers’ creative processes and an analysis of previous work that has leveraged the distinctive properties inherent to interactive surfaces for other application domains.

This paper reports on the first phase of this design process, in which we interviewed nine professional music composers following a semi-structured qualitative study protocol [6]. Our goal was to understand their creative process, paying particular attention to how they use music notation software. We wanted to understand what challenges they face, and how they address them. We first report high-level observations to capture their thought process and creative intentions. We then perform a detailed analysis, guided by the “Cognitive Dimensions of Notations” framework adapted to music [29] in order to capture the challenges they face when materializing those intentions. Paving the way for future interaction design work, we conclude with a discussion informed by prior research on interactive surfaces, exploring their potential to address the usability challenges previously identified, and what opportunities they provide to better support composers’ creative process.

2 BACKGROUND AND MOTIVATION

The design and development of tools to support creativity [11] is a very active topic in the HCI community. We focus here on music composers specifically and discuss prior work that aimed at understanding composers’ creative process – with and without computers – and creativity support tools that are not only aimed at music composers but that involve writing and editing music on a score using the common staff notation. We then provide background information about the properties of pen and touch input to motivate our exploration of interactive surfaces as devices to interactively manipulate music notation.

2.1 Qualitative Studies about the Composition Process

Bennett [3] conducted one of the first qualitative studies about the musical creation process, interviewing eight professional composers. Each composer has their own process, but high-level activities can be identified across composers. The discovery of a “germinal idea” often comes first. And then the drafting of this idea, elaborating upon and refining it – what is identified as the writing and rewriting phases in another study by Roels [35]. Rosselli del Turco and Dalsgaard [39] provide a good overview of additional studies that aimed at better understanding composers’ creative process [12, 44], and contribute their own study about how music artists capture and manage their ideas. They draw a parallel with personal information management tools, and discuss recommendations for the design of tools aimed at supporting idea management in music.

Key takeaways from the above studies of particular interest here can be summarized as follows:

- even if the creative process can be characterized in terms of overall strategy [12] and high-level activities [3, 35, 44], it varies significantly from one composer to another and across music genres [12];

- the process is highly iterative [10], composers writing and rewriting [4, 35], spending much time elaborating and refining their draft [3].1

2.2 Creativity Support Tools

Studies in the HCI community about composers also emphasize the iterative nature of the music writing process [15, 25, 43]. These studies have had different goals. Following early observations about contemporary composers’ use of paper and computer by Letondal & Mackay [25], Tsandilas et al. developed Musink [43]. Musink is a computer-based composition environment that integrates sketching, gesturing and end-user programming in the same workflow thanks to interactive paper [21]. Garcia and colleagues further explored this line of research, designing creativity support tools that combine paper and computer programming to support contemporary composers’ creative process [13, 14] as well as to observe that process [15]. These works differ from our work in two ways. First, while interactive paper is very intriguing because it faithfully replicates the experience users have with physical paper, it also inherits drawbacks of physical paper. In particular, as opposed to marks on interactive surfaces, marks on interactive paper cannot be modified, moved or deleted once written. Second, these projects focused on a very specific community: contemporary composers. While these composers may use the staff notation to capture some ideas, they primarily rely on music programming tools such as Max [33] and OpenMusic [7], as well as on notations that they design themselves.

Closer to our research objectives, Leroy et al. [24] were among the first to develop an interactive system using an early form of optical music recognition [8] to let composers use handwriting to input music symbols on staves. From the very beginning, Leroy et al. explicitly state that this is “a system for composers, not for engravers,” observing that “composers are skilled in handwritten notation and editing and need to sketch out musical ideas rapidly while retaining the ability to make alterations at both local and global levels” [24]. Presto [1, 30] is another early pen-operated system for music score editing. The system was designed to facilitate the input of music symbols on staves, this time using a set of simple, predefined gestures rather than handwriting recognition. Several other manipulations were possible, such as moving elements, transposing or adding ornaments, but accessible via menus only. Current interactive surfaces have capabilities significantly more advanced compared to these early systems. In this paper, we advocate for a comprehensive reevaluation of the entire interaction model, broadening the scope beyond rudimentary music symbol input.

Coughlan and Johnson [11] discuss the tension between the desire for flexibility of composers and the constraints imposed by software tools that stems from interaction design choices made by the developers of those tools, and from the need for these tools to be able to interpret user input. While they made this observation about composition tools that do not use staff notation as the primary means to represent music, this tension clearly exists in music score editing software as well. Many of the programs used by composers (Finale, MuseScore, Sibelius) impose significant constraints on how composers can input and modify the notation, impeding the creative process as we discuss in detail in Section 5.

2.3 Interactive Surfaces for Music Composition and Beyond

Several music composition programs designed for the desktop can operate on interactive surfaces that support digital pen input, including Sibelius, Dorico, Flat, and Notion. A few programs have also been designed specifically to run on interactive surfaces, such as StaffPad [41] and Symphony Pro [32]. But both categories essentially rely on the traditional WIMP model of desktop programs, making very few adjustments to it. Pen and fingers are seen as generic pointing devices that can be used interchangeably, and composition programs treat them as mere alternatives to mouse or trackpad. They do not fully leverage their specific affordances and expressive power.

In this context, the pen is little more than a mouse with a single button, making numerous mode switches necessary. It still has interesting properties though, most notably when inking on a canvas as it better affords handwritten input. The few music composition programs designed specifically for interactive surfaces thus support handwritten input of common music symbols. This capability is highly valuable as it makes the experience closer to writing music on paper. But multiple constraints still impede the creative process. Two constraints relate to the ink recognition process. First, symbols need to be inked in a predefined order to ensure that the recognition engine will be able to interpret them correctly, imposing a particular way of writing on composers. Second, recognition is implemented as a greedy process, beautifying handwritten symbols as soon as a measure is complete and enforcing syntactic well-formedness rules on beautified symbols systematically. For instance, a measure must be filled before proceeding to the next one. The user experience is thus closer to writing music on paper than what desktop programs afford, but the flexibility associated with writing music freely on paper is lost. Those constraints limit composers’ freedom to experiment and jot ideas down [29]. Composers need to follow certain rules when inking the notation, and once input, the notation remains difficult to edit.

Besides music composition, prior work has investigated the use of pen input in a variety of application areas, identifying interesting properties of this modality when combined with touch to manipulate dynamic graphical representations. First, beyond the ability to write and draw freely with an appropriately-shaped input device, pen and touch provide capabilities to make annotations that are well integrated with the primary content in the document [38, 42, 49]. This typically results in an easier externalization of thoughts [26, 28, 34, 40], which is key to many creative processes. The ability to point precisely and delineate arbitrary regions has proven effective when interacting with canvases hosting diverse types of marks and glyphs [36, 47]. Such capabilities seem particularly interesting to enable elaborate selections of music symbols that can be subsequently modified by direct manipulation.

Interactive surfaces also enable contextual gesture-based command invocation [2]. Those commands are usually triggered immediately, but their interpretation can also be delayed [43] by leaving ink traces to be activated later [37], thus avoiding some types of premature commitment [20]. Finally, implementing a division-of-labor strategy between pen and touch can offer a higher level of expressive power compared to interactive systems featuring a single type of pointer [22, 27, 31], increasing the number of actions that can be performed by direct manipulation.

These specific characteristics of interactive surfaces suggest that they have much potential to improve support for music composers’ creativity. But to inform the design of music notation software on those devices, it is first necessary to understand the writing and rewriting phases of their creative process, and more specifically how they use current music writing software, what challenges they face, and how they address them.

3 METHODOLOGY

We conducted 9 interviews with music writers in order to understand their creative process and the challenges they face. Our only selection criterion for interviewees was that they had to write down their music on scores (as opposed to, e.g., using a Digital Audio Workstation). Our interviewees have a variety of profiles. Although all of them have a classical background and received academic training in music theory (8 from the Conservatoire National Supérieur de Musique et de Danse de Paris and 1 from Sorbonne University), Table 1 reports on how those different profiles vary in i) their music writing activity; ii) their musical style; iii) their music writing experience; iv) the music notation software they use; and v) their main writing tool.

| Interviewee | Initials | Activities | Main Style | Experience (y) | Current Software | Main Writing Tool(s) |

| Lucius Arkmann | LA | C*, A*, E* | Modern classical | > 15 | Musescore | Laptop or paper |

| Alexandre Olech | AO | C*, A* | Modern classical | > 15 | Sibelius | Desktop |

| Gabriel Feret | GF | C*, A* | Modern classical | ≃ 18 | Sibelius | Laptop and paper |

| Maxime Senizergues | MS | C* | Progressive rock | 10 | Musescore | Laptop |

| Philippe Gantchoula | PG | C* | Modern classical | 30 | Finale | Desktop |

| Denis Ramos | DR | C*, A* | Modern classical | ≃ 10 | Sibelius | Laptop and paper |

| Jérémy Peret | JP | A* | Modern classical | > 20 | Finale | Laptop |

| Coralie Fayolle | CF | C* | Choral and orchestral | > 35 | Finale | Laptop and paper |

| Gustave Carpène | GC | C* | Modern classical | 12 | Sibelius | Laptop and desktop |

The interviews were semi-structured and composed of 6 blocks of questions: composer profile, writing process, software use, difficulties encountered, mental model and handling of musical motifs. The order of factual questions within these blocks was adapted during the course of the interviews to create a more fluent conversation. Generative questions complemented factual questions to allow interviewees to expand on some topics. We also included a final block of questions about their potential use of tablets. This revealed that tablet use is almost inexistent. Only three of our interviewees actually have a tablet but of small size and without a stylus. Only one of them uses a tablet to read scores. Three of them mentioned tablet use by musicians during performances. Four of them explicitly mentioned that handwritten input would be a great addition to either annotate or input notes.

We used contextual interview techniques [23] in order to situate answers in the context of interactions with concrete compositions. Three interviews were conducted at the composer's place of work, and 6 were conducted remotely with the possibility to turn on screen sharing when relevant. Interviews lasted between ≃ 1 and ≃ 3 hours. With the consent of interviewees, we took some pictures of their software sessions and paper scores to illustrate their comments. The interviews were recorded and transcribed.

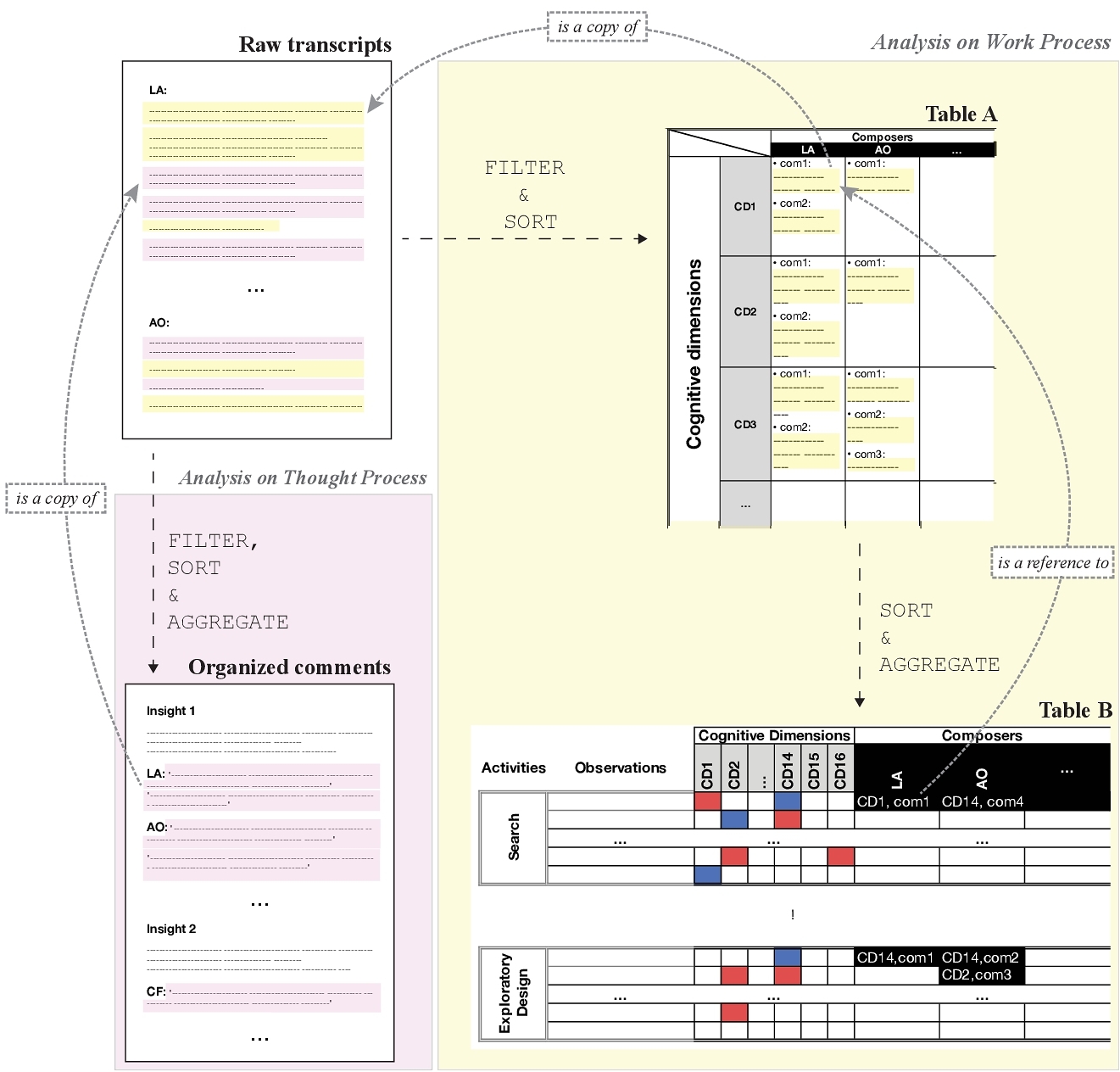

Raw interview transcripts were then analyzed using two distinct methods, as illustrated in Figure 1. These complementary analyses were conducted with two objectives in mind:

- understand composers’ individual thought process in order to capture valuable insights about their creative activities;

- understand composers’ individual work process in order to identify both the positive and negative usability-related aspects of their music writing tools and workflow.

Figure 1 describes how these two analyses are articulated. The work process analysis consisted of a systematic application of the Cognitive Dimensions of Notations (CDN) framework [18] using the comprehensive set of CDNs listed in Table 2.

| Cognitive Dimension | Interpretation in the music notation context |

| Abstraction Management | “How can the notation be customised, adapted, or used beyond its intended use?” |

| Closeness of Mapping | “Does the notation match how you describe the music yourself?” |

| Error Proneness | “How easy is it to make annoying mistakes?” |

| Hard Mental Operations | “When writing music, are there difficult things to work out in your head?” |

| Hidden Dependencies | “How explicit are the relationships between related elements in the notation?” |

| Juxtaposability | “How easy is it to compare elements within the music?” |

| Knock-on Viscosity | “Is it easy to go back and make changes to an element?” |

| Learnability | “How easy is it to master the notation?” |

| Premature Commitment | “Do edits have to be performed in a prescribed order, requiring you to plan or think ahead?” |

| Progressive Evaluation | “How easy is it to stop and check your progress during editing?” |

| Provisionality | “Is it possible to sketch things out and play with ideas without being too precise about the exact result?” |

| Repetition Viscosity | “Is it easy to automatically propagate an action throughout the notation?” |

| Role Expressiveness | “Is it easy to see what each part is for, in the overall format of the notation?” |

| Secondary Notation | “How easy is it to make informal notes to capture ideas outside the formal rules of the notation?” |

| Synopsie | "Does the notation provide an understanding of the whole when you "stand back and look"?" |

| Visibility | “How easy is it to view and find elements or parts of the music during editing?” |

To perform this work process analysis, the first author meticulously reviewed all transcripts, identifying and categorizing specific comments that fall under one or several cognitive dimensions (CD). This initial step yielded a table that indexes comments on the work process by CD × composer (Figure 1, Table A). These filtered comments were then further organized in a second table, wherein they were aggregated into higher-level observations and categorized based on specific activities as defined within the CDN framework (Figure 1, Table B). Observations were also color-coded, with positive aspects marked in blue and negative aspects in red. Table B provides explicit cross-references to the original raw comments, using the coordinate system established in Table A. We use this comprehensive analysis to present an overview of composers’ work process in Section 5.

To ensure the accuracy and comprehensiveness of the CDN analysis, the second author conducted a review of the work carried out by the first author based on their own reading of the transcripts. This review led to some revisions regarding the classification under different CDNs and activities. Additionally, the second author collected comments that were not covered by the CDN analysis, comprising higher-level remarks from composers about their creative process. These comments were methodically sorted and aggregated into high-level insights, offering a comprehensive overview of composers’ creative intentions and practices, which we report in Section 4.

By correlating observations about the work process with insights about the thought process, our overall analysis aimed to pinpoint areas where usability issues might impact composers’ creative process.

4 INSIGHTS ON THOUGHT PROCESS

In this section, we report on the analysis of the interviews along with aspects that emerged as crucial to understand diversity among the composers we interviewed. The primary objective of this analysis is to capture the composers’ thought process as a set of insights. These insights will then serve as a reference to contextualize our analysis of their work process (Section 5) and to inform the proposal of design directions (Section 6). Our thought process analysis encompasses both aspects related to the composers’ activities, such as: the various shapes taken by the interplay of high-level and concrete concerns during the composition process; the importance of details; the assembly paradigm or the shaping of a specific individual; and aspects related to the properties of music, such as the role of time as experience or representation, and the mechanisms of repetitions and variations.

4.1 Interplay between Details and High-level Concerns

In this part, we highlight the importance of details to our interviewees, and the rich interplay of high-level and concrete low-level concerns during the composition process, which echo the different levels of musical abstraction in compositional activities observed by Roel [35]. As AO puts it, “music is an art of details”, with a constant interplay between abstract and concrete levels where music comes to life, emerges. Echoing Nash's observation about composers’ need to “access every detail of a piece, but also be able to get a sense of the ’big picture’,” [29] the important aspect to grasp is thus that there are generally micro and macro levels. Those need to be strongly linked together but have different properties, with much variation between composers. The shape this interplay takes varies depending on the composer. For GF, there is “a bit of dialectic between the macro and the micro,” where “the macro level would be the vision side, the micro level would be the purely auditory side.” For this composer, an abstract level view is required to clarify the state of his composition: “sometimes you can't see, like I said, you've got your head in the sand [...] that's the macro side, that's it, the vision side.” But this is in contrast with MS, for whom reading at the concrete level of music notes helps making ideas clearer, since “there are times when you're going to have to go through the score to see things more clearly.”

For some composers, the micro and macro levels are co-constructed by working on short musical segments. For instance, GF explains how the primary notation level (e.g., rhythm) can also provide a macro level idea, as in “Beethoven symphony number seven, the andante, there's precisely this macro rhythmic side that's <<taa ta ta taa ta>> and you can step back and see it higher and higher, it's always there [...] when you look at it from a macro perspective.” The emergence of a macro level can also come from an amplification of micro elements: “It's a little world in itself that just needs to be enlarged, explored, amplified in as many ways as you want [...] Afterwards, if need be, like a painter, I like it to be stretched out a bit with water.” (GF). The emergence of form is compared by AO to the progressive sculpting of, e.g., attack, resonance, orchestration...; and by LA to polishing thanks to stronger ideas: “once you start to give a form [...] you polish, you polish, you polish, because you have found more expressive ideas, better ideas, stronger ideas, ideas that correspond better to what you wanted to do.”

Details can be immediately-perceived data, pure matter (GF: “ideas for themes come to us, and for us it's that and nothing else. It's obvious, it's pure matter, so to say”) but also constraints, and tedious work in progress (“GF: These places, we say to ourselves, are where there's the most craftsmanship, we're bound to say that it's not as good as art with a capital A and in fact these places are sometimes the ones we prefer too”). What falls in the details category is also what is less essential, as in the case of JP who creates versions of a song with different levels of difficulty. To build an easier version: “the idea is to make a slightly minimalist version of it, where we keep the essence of the song, the essential things and where you remove details or change specific aspects, for instance by keeping only a part of an arpeggio that is emblematic for the piece” (Ständchen from Schubert).

4.2 Experience of Time

Time, and the associated aspects of linearity and change, play an important role. As AO also says indeed, “music is an art of time,” which raises questions about the experience and sensation of time, but also about its representation.

For the composers we interviewed, the experience and sensation of time can consist either in putting oneself in the place of a listener “there's bound to be listening, a human ear, a human temporality” says MS, or in trying to live through a more linear composition process an experience equivalent to improvisation. In general, the composition process is not linear but rather highly iterative [10, 35], as several of the interviewees explained: “It's never too linear” says JP, “I think that's a bit to be avoided”. So, as highlighted by PG, “it's not written as you go along, starting on the first note and ending on the last”. However, AO explains the need “to get back into the feeling of time and to get back into the improvised side of things [...] because composition isn't improvisation [...] In fact, right now I'm forcing myself to go back to something more linear, so as not to go too far into abstraction either.” GF, for his part, compares composing to a journey: “I see [composition] as a process, as a journey [...] I really like initiatory things, I really like travelling and I think that each composition is a little journey and I think spontaneously I compose rather in a linear way.” This comes with “happy accidents”, as DR puts it: “I like a bit of accidents, surprises and all that,” and a relative reluctance to accept a pre-established structure: “I like to give myself a certain amount of freedom when it comes to travelling.”

The sensation of time also encompasses the perception of change, which is why music often has to provide a sense of novelty, but within a familiar context. For this reason, repetition is important if the listener is to get familiar with the themes. To convey the perception of changes, the themes will be more or less transformed, as noted by Blackwell et al. [4] as well. As GF explains, there is a need to “transpose or change melodic ideas, rhythmic ideas, or patterns.” It is important to think carefully about the number of repetitions, and about the type of those repetitions: “I don't make music that's very repetitive in the sense of the motif, it's not the motifs that are repeated, it's more the sequences, the harmonic ranges, that sort of thing” explains GC.

Regarding representation, our interviewees express a need to “get an idea of what it would look like, for example in terms of time proportions [...] to work out the temporal relationships of different episodes in a composition” (PG). For DR, “finding your landmarks in time is easier with paper.” Other needs encompass landmarks in time, that can be provided either with bars, rhythms, pages (“a page is like a rhythm” as DR puts it) or musical events, and time durations that can be also provided with bars or with seconds. Knowing that “such and such a bar lasts six seconds” is important to GC, and for composers making movie music.

4.3 Shaping of a Specific Individual

The composers raised concerns about assembly, and how they work towards the shaping of a specific individual. They use all sorts of metaphors to describe how they see and manage the assembly of musical components to make a piece of music, from “gap-fill text” (GF) to “jigsaw puzzle” (MS) or “sorter” (DR). Their concerns relate to being able to put several ideas into dialogue, to mix them: “because you're obsessed with the same things, it comes from you and it's material that will end up fitting together” (GF) or superpose them: “And here, I'm really working on the melodic motif that I'm going to associate with my rhythm, for example. So after a while, the two things will probably overlap. I'll superimpose them on the score, for example” (LA). They also need to design transitions, because “transitions are meant to unify the piece”, as expressed by LA: “It's like making a robot, you build the leg and the arm separately. And then you have to find a way for the leg and the arm to work on the same entity.” Composers indeed build on a more or less clear vision of the specific piece they are working on until they reach a state where something new, something that “works”, comes to life, which they may compare to birth: “But what often interests me is finding, let's say eight, twelve, sixteen bars, of an impactful melody that works with the harmony and that it all comes together as one. That's kind of my ideal, and we'll say that it's a birth, a raw material that already has a lot” (GF). They are constantly on the search for something that will resemble the musical intention that leads them to the specific individual they are interested in: “you have to find something that resembles the idea” (LA), “build it as good as you wanted it to be” (CF), and “make it real” (GF). As we have seen above, a piece is made from a converging process involving low- and high-level elements that feed off each other: high-level, abstract elements (ideas or structure) are the ingredients of an architecture in which lower-level elements (notes, dynamics, rhythm, articulation) can take shape to form this musical individual. This process is well summarised by AO: “In fact, there's matter and form. So it was Aristotle who distinguished between matter and form, if I'm not mistaken, and form is what gives it order. It's what makes it not just a collage of bits of music that we've put together without any vision behind it. Form is what makes it come alive, that it's not just matter, it actually has a shape”.

5 ANALYSIS OF WORK PROCESS

We now report on our analysis of composers’ work process, guided by the “Cognitive Dimensions of Notations” framework adapted to music notation [29]. We take the perspective of a notational system, i.e., considering not only the notation itself but also the tools and environments to manipulate that notation as well [4]. As detailed in Section 3, we have systematically tagged participants’ comments with the relevant cognitive dimensions and organized these annotated comments according to the different activities performed by composers. The five activities considered in our analysis are those listed in [5]: transcription, incrementation, modification, exploratory design and search.

It is worth emphasizing that nearly all composers engage in all five activities when using their music notation software. Throughout the remainder of this section, we maintain the same color-coding scheme employed in Figure 1-Table B to present our findings: blue for observations highlighting positive user experiences, and red for those indicating negative user experiences. Furthermore, we take care to provide context by referencing insights on thought process from Section 4 and from prior studies when relevant.

5.1 Transcription

Transcribing music requires composers to divide their attention between the source – whatever form it takes: original printed score, audio recording, live performance of an idea using an instrument – and the target – typically a score. It is a crucial activity across composers, who rely on multiple tools depending on the type of project and step in the process. These include their mind, paper, an instrument, an audio recorder, a computer. Ultimately, they need to produce a legible score (or set of scores) using the standard staff notation so that it can be shared with and played by musicians. In this section, we discuss the different kinds of transcriptions that may take place during the composition process.

5.1.1 From Immediacy to Computer. Transcribing musical ideas coming from the mind, whether writing them or playing them, is akin to capturing fleeting thoughts. As note-taking, the transcription process needs to be as smooth as possible so as not to impede the flow of ideas [37]. For instance, CF, having established a basis of raw ideas in her mind and on paper, concretizes them by entering them on her computer: “I use [the computer] more like a typewriter.”

However, inspiration can occur in situations where neither paper nor computer are within reach. As DR explained: “Ideas often come like that, when you are not at your desk [...] you take a step back and it comes.” Seeking a linear flow (see Section 4.2), many composers find their ideas while playing their instrument. They do not want to interrupt their flow to write their musical ideas down. They might record themselves using an audio recorder, adding yet another source of information that will later have to be transcribed since audio input is rarely integrated in music notation software. Because they are created independently, these recordings can contain discrepancies with the rest of the music piece when several instruments are intermingled (high level of hidden dependencies), resulting in assembly challenges (see Section 4.3).

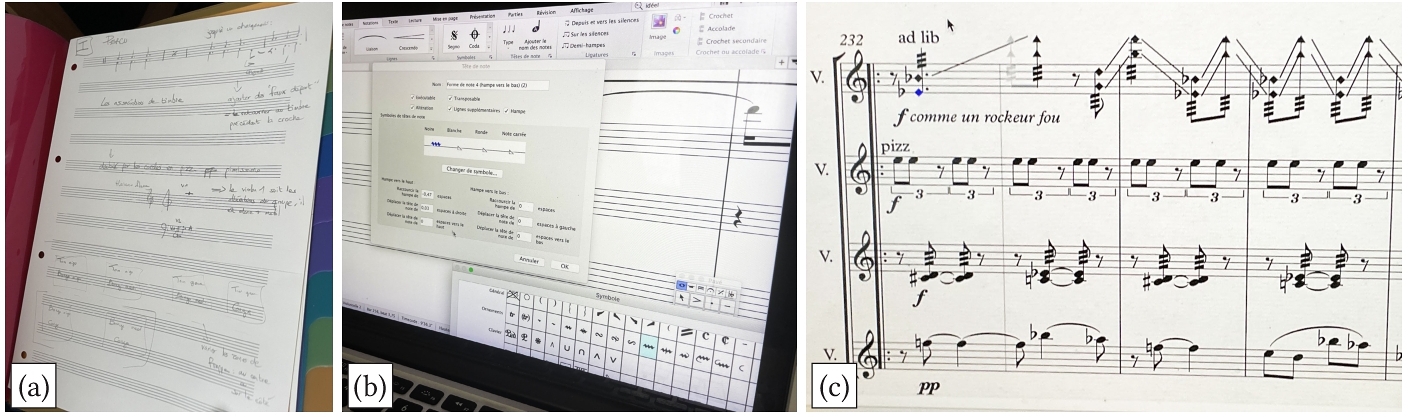

5.1.2 From Paper to Computer. The score that gets passed down to musicians must be computer-written for readability purposes. In order to avoid spending too much time on transcription, most interviewees base their work on computer and use paper as a secondary tool. Nonetheless, paper remains an efficient way to capture fleeting ideas [34] and to engage in unbounded creative processes, both because it is free of the many constrains imposed by software and hardware, and because it is the way composers learn to write music. They can put down exactly what they have in mind, either detailed notes or high-level ideas (see Section 4.1) to build their specific individual (see Section 4.3) and worry about the rest later. Paper is “a private medium [...] tolerant of errors” that composers “are free to misuse” [29]. Writings can thus be musical notations (symbols), but textual annotations or freeform sketches as well (Figure 2-a).

When transcribing on the computer, composers can choose between learning the numerous keyboard shortcuts (low closeness of mapping and learnability), navigating through the icons and menus (high knock-on viscosity due to the low visibility of the overloaded menus), playing the melody on a midi keyboard (high error proneness but high closeness of mapping for piano players), or a combination of those. Most interviewees seemed satisfied with how they are transcribing basic musical notation. But many complained that some symbols are not supported by their software and require tremendous effort to add (low closeness of mapping and abstraction management), sometimes requiring the creation of a new symbol such as an accent or note head (Figure 2-b).

Textual annotations are not necessarily destined to be transcribed on computer scores. For instance, personal comments or research notes are usually made on paper, because music notation software are centered on the score and have limited support for such side-notes (poor support for secondary notation). Similarly, sketches such as structural plans are made on paper because they are not supported by the software. Even if they were, drawing them with a mouse or trackpad would be particularly impractical. Those handwritten notations, when bounded to stay on paper, force composers to divide their attention between mediums. More importantly they force them to be perfectly organized to be able to access the information when needed (as AO who writes in notebooks or DR who uses sorters, see Figure 2-a), whether it be during the composition process or years later.

5.1.3 From Computer to Computer. Arrangers often rely on PDF files or have several instances of the application opened simultaneously to transcribe parts of musical notations. This can bring visibility problems, as JP stated: “I have the PDF with the original score on one half of the screen, another PDF with a piano arrangement which I draw ideas from, and Finale roughly above, so I switch from one window to another.” LA also complained about the impossibility to put two scores side-by-side (low juxtaposability), leading him to make constant back-and-forths between files and remembering musical elements to transcribe (hard mental operations) instead of making a visual copy.

5.1.4 From Computer to Paper. The last type of transcription is from the score to the end product(s): when written for several instruments, musicians generally only need their own part. In this case composers must extract the respective staves, producing new scores that can be distributed to - and usually printed by - the musicians. Music notation software handle these extractions, but while composers do not have to rewrite the score's contents, they have to adjust the layout because measures and spacing between staves get jumbled in the process (high level of hidden dependencies). Moreover, when the music piece is split between several files, composers have to repeat these extractions, increasing repetition viscosity, as corroborated by CF : “I do this file by file, it takes more time.”

5.2 Incrementation

Adding small bits of information to the music notation differs from transcription in that it does not require composers to divide their attention. They can remain primarily focused on the score. Moreover, incrementation not only involves adding content – such as notes, symbols or text – but modifying or erasing content as well. In this section, we focus on the context in which composers perform this activity.

5.2.1 The Sacrifice of Flexibility for Aesthetics. A score made with music notation software is both beautiful and well-structured. Resembling an engraved score throughout the work process (providing high visibility and progressive evaluation), it can help composers with some tasks. But it also impedes other tasks. For instance, AO writes a lot of annotations for himself on the score and needs to get rid of them when sharing the final product. He has trouble identifying these annotations because they look too similar to the primary notation (poor support for secondary notation). Another major problem of working directly on the final product and not on a personal draft is the negative impact on creativity, because the rigid framework that comes with this appealing notation imposes rules on composers that sometimes prefer more flexibility (low abstraction management). As GC said: “It's configured in every way, so to be able to unconfigure something is not easy, you have to really dig into the depths of the software, and the user is not at all creative with the tool. He can't create his own tool, he can't develop, except by being super strong. But otherwise it's true that we endure a lot and in fact we can do things but it's always tricky, tinkering.” MS wished for an interface closer to his mental model, that would not hinder his workflow: “I would just like to write it in a more graphic way” (low closeness of mapping).

It is indeed difficult to add notations because composers need to deal with issues of form and position, whether it be within measures (e.g., elements that shift when you add new ones) or around them (e.g., additional lines, ornaments) (high knock-on viscosity). But the visual issues are symptomatic of deeper, more logical ones. For instance, CF commented about being unable to step outside of the rhythmic framework: “The starting point is the measure. But when we don't have measures [in our music], that's a lot more complicated because we are still obliged to pretend we use measures, to delete them afterwards, to ensure that they don't appear anymore and that the spacing is still consistent” (Figure 2-c). LA shares this concern: “In these software, the rhythm is hyper framed. If you make music in 4/4, everything has to be in 4/4 perfectly. It can't overflow. You are forced to solve rhythm problems where sometimes you just want to put your idea. And sometimes it goes beyond the measure.” Such problems, that do not exist on paper, force composers to look for answers in the software's documentation or on the Internet, leading them to think twice before adding a complex notation (low provisionality) or to postpone their action, as GF: “There are places that we deliberately leave under construction, we tell ourselves that we will come back to them later” (high premature commitment). Because phrasing a practical problem to get help from online forums is troublesome, some composers give up and avoid using music notation software to their full potential. On top of that, elements such as nuances or fingerings are also rather added towards the end, in order to avoid having to repeat this task in case of more fundamental changes to, e.g., the rhythm or melody.

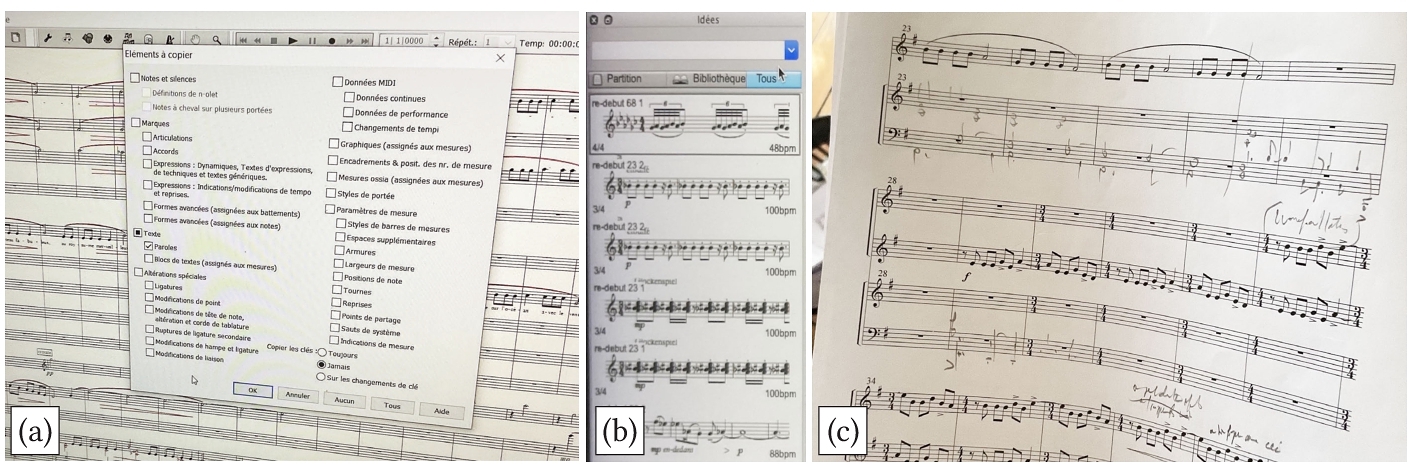

5.2.2 The role of Copy and Paste. Once composers have written something they like, they do not write it again. They rely instead on the copy & paste function, especially when they are working with repetition and variations (see Section 4.2). The ability to instantly reproduce elements that have been hard to input is one of the strengths of music notation software. It represents a huge time-saver and makes incrementation easier by handling the heavy lifting in place of composers (e.g., pitch and rhythms of numerous notes, or weird abstractions), making up for the time lost inputting the notation initially. As GF explains, there is a point where copy & paste is much more frequent than manual input of new symbols. But while it is a central function, it could be more efficient. Copy & paste comes with a lot of hidden dependencies, because the software does not know which part of the notation the composer is interested in. It needs to be specified, if the composer only wants to copy the rhythmic aspect of a motif and paste it in a different harmonic context, or the other way around in case of different time signatures. Most interviewees struggle with it, and only CF mentioned an advanced, arguably bloated dialog box that enables customizing the copy & paste function (Figure 3-a).

Besides individual passages, copy & paste is also of use in the context of more global actions, when the score contains many repetitions, or features “a theme returning in a modified form” [4]. This quickly becomes tedious, as composers must i) ensure that they are copying the right elements in order to avoid bad surprises when pasting; ii) search for relevant occurrences through the notation [4] and paste in the right place; iii) perform modifications on the elements in every passage to edit (high repetition viscosity) – in addition to layout problems discussed in the next section. Some composers wish they could apply grouped edits on motifs of interest.

5.3 Modification

The reorganization and restructuring of existing notation focuses on its layout and implies that no new content is added to the score. Composers often have to modify the layout of individual elements or entire measures to improve the readability of the score. But multiple dependencies, between elements and measures and between elements themselves, make this difficult. We detail the three levels at which we observed such dependencies.

5.3.1 Elements within a Measure. Notes and accidentals are usually bound to a measure. Music notation software need to anticipate what composers are aiming for – as opposed to paper where elements can be written directly as intended without interference. The software often makes wrong guesses (resulting in high error proneness), misplacing elements (low closeness of mapping) which then require additional operations to correct. LA complained about this gulf of execution: “If you want something really specific, you cannot get into it and you always stay at the surface of it. [The software] might place elements in an intelligent way, you don't want them to be placed like that. It makes an average between what it thinks is best and your intention, so you waste a lot of time trying to adjust things progressively.” Actions like shifting notes, flipping stems, or adjusting accidentals can be tedious to perform in the confined space of a measure (high knock-on viscosity) because of the structured editor's rigidity, as discussed earlier. As MS said: “You tinker with it to make it fit into a frame.” When the notation is dense, composers will often start over rather than embark on a complex edit sequence.

5.3.2 Elements within the Page. On the contrary text, and most symbols, are not bound to a measure and can be moved around freely on the page. While this can be an asset when fine-tuning the layout (see Section 4.1), it can also create problems, impeding the restructuring and assembly of musical components (see Section 4.3). LA, for instance, who “would like to drag things around” and “move measures, but refrain from copy-and-pasting” has currently no other choice, and because texts and symbols like dynamics and ornaments are still conceptually bound to the elements of a measure, they need to be selected separately.

5.3.3 Measures within the Page. The layout of measures constitutes a major source of friction, as expressed almost unanimously by the interviewees. Layout decisions can depend on diverse constraints, like aiming to have a fixed number of measures by staff, the need to fit the score in a given amount of pages, or the effort to match layout interruptions with the flow of musical phrases – avoiding a page break in the middle of a phrase, for instance. Whereas a text editor can go as far as hyphenating a word to optimize layout, the modern staff notation forbids splitting measures. Composers thus resize measures at the risk of triggering a snowball effect on the measures that follow due to their high hidden dependencies, leading to much hard mental operations. As AO explains: “It has to be somewhat standardized. You have to ensure that all the notes have approximately the same gap, but at the same time, that all the pages are almost full, that everything is filled, that there is no empty space, unused space. Yeah, that might take a little thought.” Some composers do work on the layout of measures during the writing process for the purpose of progressive evaluation, to get a sense of the final structure. But as observed by Bennett [3] most music writers delay the effort, possibly until the end (too much premature commitment), preferring to work in a fickler context in order to avoid the potential waste of tedious layout efforts.

5.4 Exploratory Design

It is common practice for composers to play around with new ideas on their instrument and only put them in writing after they are satisfied. However, going through writing for exploratory design without being sure of what will result allows to see and understand the music mechanisms, favoring progressive evaluation. As illustrated by MS: “When there are several voices, it allows you to see how they can fit together so that it can work [...] Seeing the notes in writing makes it clearer in the head. Otherwise it's not intellectualized, and it stays a bit vague.” We discuss several strategies to support exploratory design, each adapted to its situation.

5.4.1 On the Final Score. Exploring ideas directly on the final score is risky, as it can adversely impact work done earlier, be it content- or layout-wise. Composers thus usually restrict their in-context modifications to small edits only, with manageable hidden dependencies over content and close-to-none effect on layout. When faced with more ambitious modifications, another strategy described by GC consists of copying a passage and pasting the duplicate right next to it, working on that duplicate and thus staying in-context. This gives music writers high juxtaposability power, allowing them to try things without altering the original material, and changing the layout only temporarily (better provisionality). However, a more popular strategy is to copy and paste the passage at the end of the score, in measures left blank to this effect. Composers then work in this isolated zone, akin to a sandbox, i.e., safe, without hidden dependencies, but at the expense of juxtaposability and visibility. Committing modifications then requires effort to assemble the musical components and make them work together (see Section 4.3).

When working on the final score, another issue to consider is the lack of versioning capabilities [19] to keep track of changes. Even if editors have an undo feature, edits quickly become definitive. As GF said: “There is a lot of choosing. And sometimes the choices are painful because there are things we like and would like to keep, but we must not hesitate to erase.”

5.4.2 Away from the Final Score. In some cases, an idea turns out to be a better fit to another piece. Or composers simply want to explore it later. Some software feature a component where to store such ideas, akin to an elaborate clipboard that can be accessed and browsed (Figure 3-b) from any score. Finding a particular idea in the stack and working on it can be challenging, however. Most interviewees also explained switching between several files in order to explore novel ideas. For instance, some of them maintain a draft version and an engraved version, or a succession of chronological versions of the same score. Composers thus reassure themselves with the existence of a static version before embarking on global modifications (e.g., changing the tonality) that could disturb the whole piece in ways difficult to predict. Some composers rather print their current score and scribble on it with a pencil, to regain “the expressive freedom of pencil and pen marks” [29] they need to explore ideas (high closeness of mapping). CF does so to try out orchestration bits, under the lead parts that are software-written (Figure 3-c). DR explores distinct aspects of his music on paper (e.g., rhythmic, harmonic or contextual ones) and explains that it also entails low premature commitment: “I can always note new ideas, new concepts, there is no precise order.”

5.5 Search

Blackwell et al. [4] identified the search for occurrences of a theme or motif as one of the generic activities performed by composers. More specifically, our observations reveal that composers search for information within the score for two main reasons: when looking for an element – typically to edit it – and when reviewing their work, checking for possible mistakes, making sure everything is “as it should be” – shaping a specific individual (see Section 4.3). We identify search strategies available to the composers and discuss the importance of the chosen perspective.

5.5.1 Using Absolute Positions. Music writers can find an element based on its previously-known location. For instance, bar or page numbers are useful to find a location in relation to another source, acting as a coordinate system. CF, who writes on paper before working with software, explained: “The paper version does not have the same layout, so visual cues change. But there are bar numbers that do not change.” JP, who writes arrangements using software together with the original score displayed as a PDF, added: “Sometimes there are repeated elements, so to ensure that I am in the right place, I use the bar numbers a lot. [...] This is mostly for comparison purposes” (high visibility). Although they are easy to jump to, bar numbers are not always there in the first place, limiting juxtaposability: on a handwritten score composers have to write every number themselves, and on an original score numbers are usually shown for the first measure of a line only.

5.5.2 Using Relative Positions. Comparison tasks aside, numbers are of little use to find an element in a score, as they have no meaning musically-speaking. Instead, composers use their knowledge of the form (see Section 4.3) and temporal grasp (see Section 4.2) of the score. MS explained: “I know that [what I am looking for] is towards the end, or the beginning, or the middle of the score.” PG relies on relative positions: “It is before or after another musical event, theme or passage that I remember.” Although this type of search works fine with short scores, it quickly becomes tedious with longer ones, as LA stated: “Finding a precise element across twelve pages begins to feel like finding a quote in a book.” To reduce the search scope, composers commonly resort to custom visual cues such as headers, comments and color codes that catch the eye and that can be quickly identified from an overview of the score. These spare the composer from having to actually read the notation in detail, acting as bookmarks that support role expressiveness.

5.5.3 Using Musical Properties. Some interviewees expressed the need to find elements based on their properties rather than by scanning and navigating the score, essentially calling for better abstraction management at both the micro or macro levels (see Section 4.1). For instance, GC wished he had a way to quickly inspect specific details: “I know what is missing, what would be great: an internal search engine. If I am searching for a succession of notes and/or rhythms, I would like to find it like when I do "Ctrl+F" in a text editor.” It is also the case for AO, who needs to check that every time he writes “pizz”, he also writes “arco” somewhere in the following measures. LA expressed related high-level concerns: “I would like to search for precise things in a score by their theoretical designation or by describing their role in the piece. For instance, I may need to find a "transition" or a "conclusion".” This corroborates what GC said about studying his own music, concerned about the temporal experience (see Section 4.2) of the listener: “You are not always aware of everything. From a formal point of view, you may ask yourself: "Have I not over-used this motif ?". [...] What is hard to realize when you are composing, is the effect it has on someone who listens for the first time. [As composers], we don't have this initial listening experience and need to check if an element is repeated too many times, or not enough to actually be remembered.”

5.5.4 Using Ears and Fingers. Composers often rely on the audio playback function to check their score for mistakes. Playback, even with mediocre sound quality or with the wrong instruments playing, lets them check the notation in detail (see Section 4.1) and reveal hidden errors of pitch and duration. It is particularly useful among composers who can neither perform what is written with their instrument, nor imagine the result. Even for those who can, it hints that the musical notation is fairly opaque, thus bearing high error-proneness. As CF said: “The ear is better than the eye.” Progressive evaluation seems to be best supported by enabling composers to listen and read simultaneously in order to check that the sound matches the notation.

However, when composers assess the musicality of their work, the audio playback function usually falls short: elements around the staff, like dynamics and ornaments, although being an integral part of the notation, are not always recognized as such. In addition, digitally-simulated instruments fail to sound realistic and have limited expressivity (low closeness of mapping), forcing composers to install additional sound libraries. Only then can they take a step back from their work and properly gauge if the musical elements are well-balanced, together (see Section 4.3) and over time (see Section 4.2).

Another piece of information that can be found neither by reading the notation nor by listening to the piece is the playability of the score. Fingerings, complex chords and hazardous rhythms must be tested on a real instrument, because these are interpretation-related problems. Many composers, including GF, exchange with performers to iterate on these details: “We show the score to the violinist or the pianist, and we are told: <<It will take two weeks to work on just these two measures, it's not consistent in terms of difficulty.>> There must be a certain coherence in the playability so that the performer can express himself well. The music must fall under the fingers.” These remarks are usually noted on the spot using paper. The composer then has to find the corresponding places to modify in the score, leading back to the first issue discussed in this section.

5.5.5 Using a Specific Perspective. Regardless of what composers are searching for, how the score is displayed heavily influences their ability to navigate easily. Being able to switch representation depending on the activity is a key feature. For instance, when the score is written for a solo instrument, the portrait orientation is preferred. As AO explained: “I am able to see almost half of my piece on one page, it gives me a more global vision” (see Section 4.3) (high synopsie). When the score is written for several instruments, the landscape orientation is sometimes a better option, reducing the number of line breaks (high visibility). These two types of display are both paginated, bringing temporal structure (see Section 4.2) that can help music writers like DR: “Pages allow me to compartmentalize. They set the pace. I know that there is roughly the same duration on every page. [...] They are like the white bands on the road that show you how far is the car in front of you because without them, it would be much harder to have a notion of space and time” (high closeness of mapping). However, this structure also pushes them to deal with layout challenges early on, shifting the focus away from creativity (high premature commitment). Panoramic orientation removes pagination and shows the score as a continuous flow of measures, reducing premature commitment. CF explained: “It depends on the state of the work, and it is very variable. Usually, it is easier to start like this without worrying about the layout, and then work on the layout, which is tedious.” Composers can compensate for the lack of systematic structure with their own abstractions like the headers discussed earlier. AO, writing a piece of music for a movie, comments: “The score has five parts that correspond to five scenes, and I see them visually, because I defined them with numbers.” This way, good abstraction management increases visibility.

The longer the score gets and the more instruments a piece involves, the more important structural choices become. Composers often split their score in several files to work on distinct parts in any order, reducing premature commitment and making it easier to find a specific element (high visibility). Another way of increasing the visibility is to optimize the space by hiding non-essential staves (for instance those with no notation) during the writing process, displaying them only in the end.

Taking a step back in order to get a sense of the global flow allows composers to find elements or spot mistakes easily, provided that they can still see the details: when the score is several pages long, unzooming quickly makes the notation illegible (low synopsie). This is why many composers use a wide aspect-ratio monitor, several monitors or even a printed version of their score that they can hang on the wall or spread on the floor, increasing visibility, sometimes at the cost of additional transcription tasks.

6 DESIGN OPPORTUNITIES

At a high-level, our analyses suggest that music writing software impose too many constraints when editing the primary notation, in line with observations made by Nash [29]. While a couple of composers acknowledge the utility of strongly-structuring elements such as measures or pages to guide their composition process, the majority of them clearly express that, although they want structured outputs that they can share with other musicians, music writing applications frequently interfere with their creative workflow. This often leads them to find workarounds to cheat the software or to resort to more adaptable mediums such as paper and audio recorders.

Opportunities to address these issues are both at the notation and at the environment levels [17]. Notation level design manoeuvers include, e.g., reducing viscosity by providing relevant abstractions, or increasing clarity by enabling secondary notations. At the environment level, as discussed in Section 2, the integration of interactive surfaces supporting digital pen input in the music writing process offers a promising avenue for increasing flexibility without sacrificing structure by “simplif[ying] the alteration, erasure and overwriting of notes and passages” [29]. An obvious advantage of digital pens lies in the easy creation of freeform annotations above a structured digital representation. As observed at multiple occasions during our interviews, music composers have their mental representations towards the specific individual (see Section 4.3) they have in mind, partially implemented in their score but not entirely in the primary notation. Freeform annotations can reproduce the experience they have with paper where they can circle, add symbols and notes to capture their mental representation of the score and/or convey intentions to the musicians who will perform their piece. However, we believe that the advantages of digital pens go much further than supporting the easy and flexible input of annotations [46]. In particular they have the potential to address issues that are related to the rigidity of the structure. This section discusses opportunities that seem worth investigating as part of the design of future music notation software. All of these opportunities revolve around the idea of temporarily breaking the structure to better fit composers’ thought process. We envision breaking away from the structure along three possible directions, detailed in the remainder of this section:

- breaking down musical elements: enabling manipulation of score elements at a finer granularity than the primary notation permits;

- breaking the score's homogeneity: allowing composers to capture their ideas using other notations and media within the score;

- breaking the score's linear structure: offering composers the freedom to arrange scores and musical fragments spatially, adapting the layout to the specific task at hand.

6.1 Breaking Down Musical Elements

Our analyses revealed that, in their building of a rich experience happening within time (see Section 4.2), composers dedicate much attention to repetitions in their work, inserting recurring patterns but customizing some or all occurrences of those patterns so that repetitions become variations rather than mere duplicates. Composers work with various aspects of patterns: rhythm, pitch, fingering, nuances, ornaments. They explained heavily relying on copy-paste to facilitate such pattern-centric work but, because copy-paste is monolithic, spending a lot of time to add, remove and adjust elements within the pasted fragment, as we detail in our analysis of their incrementation activities. Furthermore, our examination of their search activities revealed that music notation software lacks effective means to identify musical segments based on specific musical or notation properties.

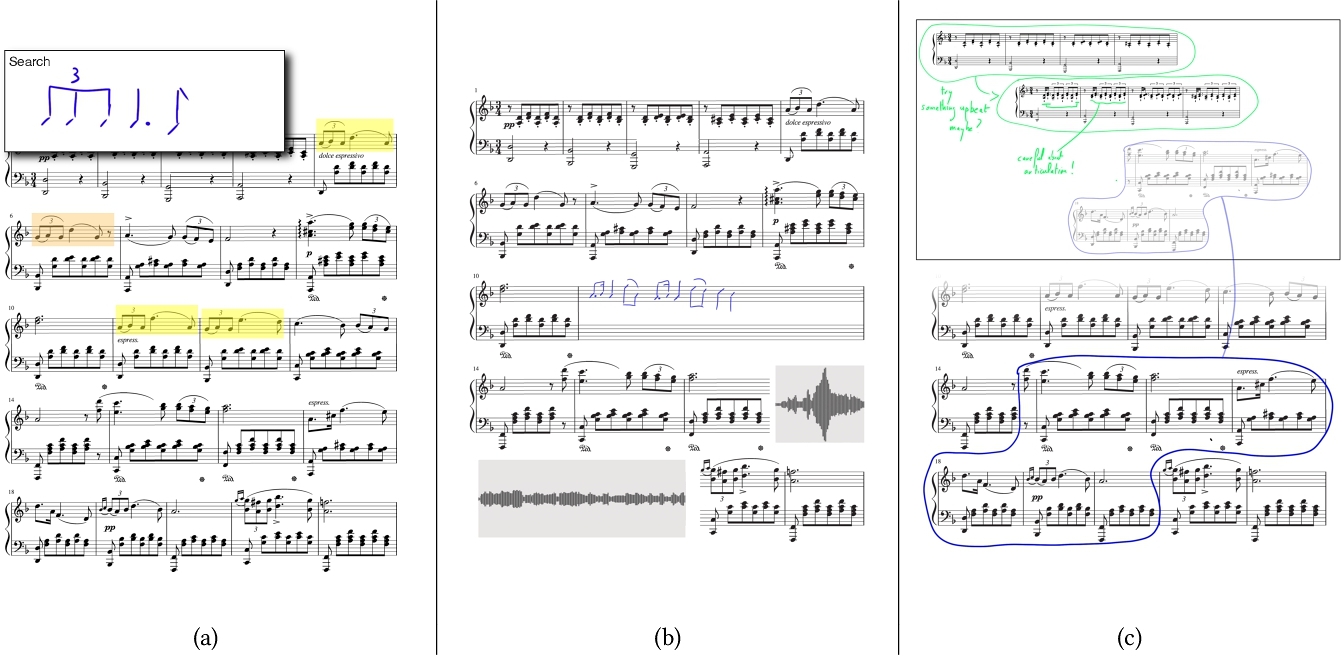

The precision and flexibility of the digital pen could empower composers when working on patterns. The pen can precisely point at and delineate graphical elements. Current music software only allow monolithic selections, but with this type of precise selection input device composers could tell the system what specific properties they are interested in. This could for instance be through direct selection by, e.g., circling them or making a freeform lasso selection on a specific element in order to restrict the selection scope to only elements of the same type. For instance, they could initiate a selection on a beam (resp. a note head) to copy only the rhythm (resp. the pitch) within the delineated fragment. Or they could select and copy only the fingerings to be repeated throughout the score – an operation that was described as particularly tedious in our interviews. Beyond the direct selection of elements, the pen could leverage users’ ability to write and draw elements by hand beyond input of the primary notation to search patterns in a score and thus facilitate checks and edits across repetitions. Interviewed composers reported the lack of support to search patterns according to diversified musical properties. A pen-operated search box could address this limitation. Composers would be able to express the pattern they seek by drawing note heads, beams, stems, or combinations thereof, specifying a query across their score to identify fragments that match their desired pattern, as illustrated in mock-up (a) in Figure 4. Based on what gets highlighted in the score, they could draw or erase some ink marks in the search box to further constrain or relax their query.

6.2 Breaking the Score's Homogeneity

Our interviews revealed a potential mismatch that can occur when the temporal flow for writing music in software is not aligned with the natural flow of composers’ creative thoughts. This creative flow is different depending on the composer's thought level: at a low level of details (see Section 4.1), it is rather temporal and auditory, while at a high level, it is more about spatial organization, considering parts, structure, and relationships, which are predominantly visual in nature. Our analysis of work process revealed that composers often pause their software-based work to turn to alternative mediums such as paper to capture fleeting ideas in the form of notes and sketches, or an audio recorder to capture musical sequences played directly on their instruments. Additionally, they are often constrained by the software's rigidity in terms of form and layout, when they would rather have the flexibility to leave certain sections in draft form for later refinement. In essence, composers’ thoughts cannot always be expressed in the primary notation supported by the software, leading to the usability issues detailed across the Transcription, Incrementation, and Modification activities in Section 5.

Current music notation software expose scores as one homogeneous sequence of staves that cannot be broken and mixed with other content. To address the above issue, the score editing environment could be made more flexible, breaking the homogeneity of the score to support the insertion of different types of contents and media both within and between staves. These elements would not persist on the score. They would exist only temporarily, until they get transformed into actual music notation. Their purpose would rather be to enable composers to insert ideas in-context, regardless of the way they were captured. Starting with a relatively simple case, recent work on supporting in-context annotations on pen-based devices can inspire interaction techniques for music notation software. For instance, SpaceInk [38] enables users to insert handwritten notes between words, lines, or paragraphs in a structured text document by locally reflowing its contents. RichReview [49] also explores the concept of in-context annotations with a rich variety of media, including audio annotations. Such systems preserve the spatial structure and linear flow of documents without being overly constrained by the original document's primary notation. Applying a similar approach to music notation software, composers could for instance insert empty space between existing measures on a staff to jot down a new idea (3rd grand staff in Figure 4-b). They could also insert ideas captured as audio recordings, represented using their audio spectrum (Figure 4-b across the 4th and 5th great staves).2 In both cases, the new idea is placed in-context effortlessly, as it does not have to be converted to the primary notation immediately. This can be postponed to a later time, causing less interruptions to the composer's train of thoughts.

Whichever notation we consider, offering flexibility between interpreted input (digitally-enhanced musical symbols) and non-interpreted input (handwritten symbols) seems particularly important. Non-interpreted input lets composers capture fleeting thoughts without disrupting the creative process. Composers may choose to leave a region in the form of annotations or as elements from the primary notation but with relaxed constraints (e.g., without mesure bars) and refine it later (3rd grand staff in Figure 4-b), eventually asking the system to interpret when it is stable enough. This is opposite to what music notation software such as StaffPad does, as it recognizes hand input notation greedily – see Section 2.3. It seems particularly important that composers keep control over when a passage gets interpreted to avoid the system taking premature actions such as adjusting the layout while they are still in a transitional phase of modifications (e.g., when filtering their score for a specific instrument). Pen-based systems such as MusInk [43], WritLarge [48] and ActiveInk [37], which give users much freedom regarding when to interpret pen input, can inspire the design of such interactions.

6.3 Breaking the Score's Linear Structure

Linearity is a fundamental aspect of a musical piece. Composers assess their scores with respect to temporal evolution and temporal constraints. However, the creative process itself is often non-linear. It can even extend beyond a single composition. In particular, in their complex and iterative assembly process (see Section 4.3), composers frequently revisit specific sections within their scores to explore alternative versions. They also sometimes draw inspiration from their own previous works, or from the works of others. Unfortunately, music notation software poorly support tasks that involve sections scattered within a score or across scores. Additionally, when revising a section at a detailed level (see Section 4.1), composers often copy the section and paste it right after the original to work on that copy (potentially disrupting the layout). Or they copy it towards the end of the score, potentially losing valuable context. Some composers even prefer creating several independent versions of their score. This is due to the rigidity of the score's linear structure. A well-designed pen-based system could allow temporarily breaking this rigidity to facilitate the activities that this rigidity adversely impacts, as identified in our analysis of the work process: Transcription, Incrementation, Exploratory Design.

One particular advantage of pen-based systems is their ability to replicate the experience of working on a blank sheet of (unstructured) paper. In digital systems, this concept can take the form of an infinite canvas where users can insert various elements and arrange them freely [36, 37, 47]. Designing such a canvas mode would be a valuable addition to music composition software that could support much more operations than the Idea Clipboard depicted in Figure 3-b, which is essentially a list of archived passages that can be pasted on a score. With a more flexible canvas, composers could organize and “retrieve [collected ideas] based on diversified cues” [39]. Figure 4-c illustrates how such a canvas could be used as a storage space as well as a sandbox to experiment with fragments safely. Those fragments could be arranged freely to support spatial grouping and indexing, and to support tasks that involve their comparison side-by-side. This flexibility would for instance help address the challenges composers encounter when transcribing content from one score to another. It would also facilitate exploring different versions of the same passage. With a canvas mode, composers could work on different versions concurrently, add annotations as needed, and then preview and transfer some of them back to the original score, effectively committing their changes. By combining an unstructured and infinite canvas with annotation capabilities, composers could keep a record of their creative process, that would thus favor reflection when needed. Transitions between these views can be made seamless in pen+touch input systems, thanks to, e.g., command marks [2] or menus designed for quick access to frequently used commands [31].

6.4 Integration into Composers’ Work Environment

The proposals presented above give a glimpse of how the affordances of interactive surfaces could help address composers’ contradicting needs for structure and flexibility. They were chosen as representative examples but they by no means represent a complete solution. Much remains to be done and developing means to better support the creative process in music notation software will require careful interaction design. As evidenced by the literature on pen + touch interaction, multiple challenges need to be overcome. New and existing features must form a coherent whole. Essential features need to be exposed primarily as direct manipulations seamlessly integrated in terms of input. In addition, the legacy of designs implemented by existing software, that composers have invested significant time to master, cannot be ignored.

An additional challenge lies in the integration of interactive surfaces into composers’ work environment. Rather than an immediate and full replacement for a desktop workstation setup, we envision interactive surfaces serving in two situations: 1) as the device of choice when away from the main workstation (mobile context of use); and 2) as a complementary tool alongside more traditional mediums such as paper and desktop computers [4]. An effective interaction design will likely position them as a preferred option over paper in certain scenarios or over computers in others. We even anticipate that, for some composers, interactive surfaces may replace the need for either paper or computers entirely. Ultimately, interactive surfaces have the potential to enable a more radical change in composers’ work environment where both paper and computers would be replaced by a single, comprehensive, and efficient medium.

7 CONCLUSION AND FUTURE WORK

Even if the creative process varies significantly from one composer to another [12], our interviews with nine professionals strongly suggest that the many constraints imposed by music notation software raise significant usability challenges. While there is – given the complexity of the music notation – a clear need for well-formedness and structure, the constant enforcement of syntactic and structural rules results in a lack of flexibility that represents a major hindrance to the creative process. The design opportunities explored in this article offer insights into how interactive surfaces could effectively tackle this issue.

Beyond interactive surfaces, an interesting avenue to explore would be the integration of other modalities such as voice commands in music writing software. Voice input could allow composers to keep holding a music instrument while expressing commands in natural language, playing and capturing ideas seamlessly. However, our interviews underscored the intricate structure of musical notation and the need for precise, efficient interactions. Such intricate manipulations, which can be expressed by direct manipulation of the score representation, may be difficult to express with words. While natural language interaction can complement graphical interaction in music composition, it is unlikely to replace it entirely due to the complexity and nuances of musical expression and how it is captured with the staff notation.

ACKNOWLEDGMENTS

We warmly thank the nine music composers who generously devoted their time to share their invaluable experience during the interviews: Lucius Arkmann, Gustave Carpène, Coralie Fayolle, Gabriel Feret, Philippe Gantchoula, Alexandre Olech, Jérémy Peret, Denis Ramos, Maxime Senizergues. This project has been partially supported by grant ANR Continuum (ANR-21-ESRE-0030).

REFERENCES

- J. Anstice, T. Bell, A. Cockburn, and M. Setchell. 1996. The design of a pen-based musical input system. In Proceedings Sixth Australian Conference on Computer-Human Interaction(OzCHI). 260–267. https://doi.org/10.1109/OZCHI.1996.560019

- Caroline Appert and Shumin Zhai. 2009. Using Strokes as Command Shortcuts: Cognitive Benefits and Toolkit Support. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Boston, MA, USA) (CHI ’09). Association for Computing Machinery, New York, NY, USA, 2289–2298. https://doi.org/10.1145/1518701.1519052