Scaffolded versus Self-Paced Training for Human-Agent Teams

DOI: https://doi.org/10.1145/3687272.3690873

HAI '24: International Conference on Human-Agent Interaction, Swansea, United Kingdom, November 2024

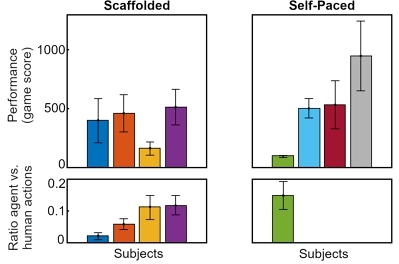

Two pilot studies compare the impacts of scaffolded versus self-paced practice on teaming and performance on an open-ended design challenge. In both studies, guiding players early on in how to leverage AI assistance (scaffolded practice) led to much more robust teaming than allowing players to learn at their own pace, but did not improve task performance.

ACM Reference Format:

Ying Choon Wu, Leon Lange, Jacob Yenney, Qiao Zhang, and Erik Harpstead. 2024. Scaffolded versus Self-Paced Training for Human-Agent Teams. In International Conference on Human-Agent Interaction (HAI '24), November 24--27, 2024, Swansea, United Kingdom. ACM, New York, NY, USA 3 Pages. https://doi.org/10.1145/3687272.3690873

1 Problem statement

Given the diverse and dynamic landscape of human-AI partnership [11], discovering effective teaming strategies is non-trivial for novices. Existing research suggests that improving communications and coordination processes [3, 10], as well as the perceived assertiveness, warmth, and task readiness and integration of an AI agent [1, 6, 17] can benefit team cohesion. This work-in-progress study explores how different types of training – specifically, self-paced versus scaffolded approaches – can also play a role in supporting the emergence of robust teaming dynamics. In self-paced practice, participants were given the opportunity to practice the game in a self-directed fashion. This approach is informed by theories of self-regulated learning [14], which encompasses processes whereby learners establish their own learning goals and strategies and evaluate their own progress and outcomes. Studies have indicated that this approach can confer cognitive advantages in that self-directed and self-paced learners are more likely to develop mental schema in anticipation of new knowledge [8] as well as superior metacognitive control [15].

Participants assigned to scaffolded practice were instructed to engage the AI agent in specific ways. This method is in line with scaffolded learning theories [16] and Vygotsky's notion of the zone of proximal development, which is the range of objectives that a learner may be able to attain with guidance, but not independently. Scaffolding may allow novice participants to achieve robust collaboration with their AI teammate more rapidly than would be possible without guidance; however, they might also become overly reliant on experimenter prompts to the detriment of acquiring a deep understanding of the task and teaming strategies [13].

2 Specialized HAT Platform

Paralleling the game structure of Mini Metro (Dinosaur Polo Club, 2013), a HAT task was created in virtual reality (VR) centered on designing transportation routes from train stations that spawn in space at jittered intervals. Human-AI teams continuously update transit lines to ensure that all passengers are transported on time, as long wait times (overcrowding) will terminate the entire network. A second challenge is allocation of resources, as new trains and lines become available at regular intervals to alleviate pressure on existing infrastructure with the ever increasing quantities of stations and passengers. Further, two entirely distinct transit networks with the same constraints, but separated in space, run in parallel, requiring attention switching between networks.

A hierarchical task network (HTN) agent [9] assists the human in response to verbal commands. Spoken requests are transformed to text, which is sent to the agent via a web socket API and then implemented by the agent in the game. Either the human or HTN agent can perform any of these actions: creating or deleting a transit line, adding or removing stations to and from the line, and adding or transferring trains between lines.

This platform architecture yields several advantages. First, the task is dynamic: over time, the number of stations and complexity of networks increases, forcing human-agent teams to discover new optimal interaction strategies in response to the increasing task demands. Secondly, the problem is open-ended, supporting the study of HAT in contexts without fixed and known reward functions. Thirdly, because multiple transit networks can run simultaneously in the same virtual scene, teaming dynamics related to the negotiation of 3D space can also be studied.

3 Methods

Eight adult volunteers were randomly assigned to self-paced or scaffolded training (Study 1). Both groups received the same comprehensive task instructions and completed a structured tutorial. Next, self-paced participants explored the game independently during two practice sessions and had the discretion to utilize the AI agent at will. In contrast, for the first guided practice session, participants were directed by a co-present experimenter to request the AI agent to perform specific tasks according to a pre-established schedule. In a second practice session, they were instructed to perform their next self-chosen action using the AI agent every 30 seconds. After their respective forms of practice, both groups performed four test sessions. All actions (by humans and agents), and time stamps were recorded. In (Study 2), the scaffolded practice protocol was repeated with five new volunteers and their prior experience with AI, coding, and video games was recorded. For both studies, teaming outcomes were operationalized as the ratio of agent action requests relative to self-performed actions. Performance outcomes were the number of passengers delivered aggregated over task sessions.

4 Results

Scaffolded training yielded a much higher quantity of agent interactions relative to human actions (Figure 1, lower panel). Whereas all four individuals in the scaffolded practice group requested agent actions – on average at a rate of one agent interaction per twelve human actions, only one person who received self-paced training engaged with the agent. Both groups showed considerable interindividual variability in performance – perhaps due to individual differences in prior experience with technology.

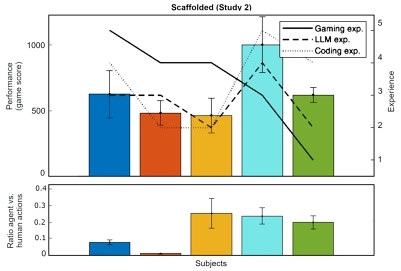

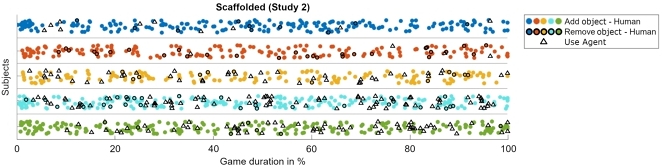

In Study 2, once again, all individuals receiving scaffolded practice engaged the agent. Prior experience with video games, coding, and LLMs did not appear related to teaming outcomes, although the small sample size precludes a definitive conclusion.(Figure 2). Additionally, most individuals adopted complex network management strategies, both adding and removing stations by themselves or with help of the agent throughout the test period (Figure 3).

5 Significance

This work explores how training can support the emergence of robust HAT dynamics. All eleven individuals who received scaffolded training with an AI agent engaged with the agent during the subsequent test sessions. Further, in Study 2, participants actively revised their transit network designs in collaboration with their AI partners (Figure 3). Adding stations by hand was most common, but all participants also removed stations and engaged the AI to assist with both of these task types, suggesting that AI partnership supported rather than hindered the adoption of complex design strategies that involved revamping on the fly in response to the dynamic task landscape.

The observed benefit of scaffolded training on human-AI teamwork is consistent with other studies demonstrating positive impacts of other forms of guided training, such as cross-training [12], or human-agent co-training in general [2, 7] – and negative impacts of collaborative training that does not involve direct AI engagement [4]. One possible explanation for why scaffolded training led to regular AI engagement during test sessions is that through repeated interactions, the skills necessary to engage with the AI agent became somewhat proceduralized. Thus, because interacting with the agent was not effortful, during the test sessions, participants would be more likely to engage the agent and would have more attentional resources left over to devote to learning effective approaches to transit line construction.

However, it is important to note that the rich team dynamics observed in the scaffolded practice group did not lead to better performance than the self-guided group. Indeed, it is possible that self-paced practice can yield a deeper understanding of network design strategies – and how to redesign transit networks on the fly – in trade for skills in engaging the AI agent. For this reason, in the current task, self-guided practice might prove especially beneficial when people are paired with a highly autonomous agent, or when longer-term personal goals (i.e., to discover optimal network designs) conflict with immediate team goals (e.g., maximize the current score) [5]. These possibilities and more will be explored with larger sample sizes. Further, the impact of self-directed versus scaffolded training will be assessed during teaming with more autonomous AI partners that operate with less explicit guidance.

Acknowledgments

This project was made possible by the Army Research Laboratory.

References

- Francisco Maria Calisto, João Fernandes, Margarida Morais, Carlos Santiago, João Maria Abrantes, Nuno Nunes, and Jacinto C Nascimento. 2023. Assertiveness-based agent communication for a personalized medicine on medical imaging diagnosis. In Proceedings of the 2023 CHI conference on human factors in computing systems. 1–20.

- Joseph Cohen and Andrew Imada. 2005. Agent-based training of distributed command and control teams. In Proceedings of the human factors and ergonomics society annual meeting, Vol. 49. SAGE Publications Sage CA: Los Angeles, CA, 2164–2168.

- Mustafa Demir, Nathan J McNeese, and Nancy J Cooke. 2016. Team communication behaviors of the human-automation teaming. In 2016 IEEE international multi-disciplinary conference on cognitive methods in situation awareness and decision support (CogSIMA). IEEE, 28–34.

- Christopher Flathmann, Beau G Schelble, and Anna Galeano. 2024. Empirical Impacts of Independent and Collaborative Training on Task Performance and Improvement in Human-AI Teams. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting. SAGE Publications Sage CA: Los Angeles, CA, 10711813241274425.

- Christopher Flathmann, Beau G Schelble, Patrick J Rosopa, Nathan J McNeese, Rohit Mallick, and Kapil Chalil Madathil. 2023. Examining the impact of varying levels of AI teammate influence on human-AI teams. International Journal of Human-Computer Studies 177 (2023), 103061.

- Alexandra M Harris-Watson, Lindsay E Larson, Nina Lauharatanahirun, Leslie A DeChurch, and Noshir S Contractor. 2023. Social perception in Human-AI teams: Warmth and competence predict receptivity to AI teammates. Computers in Human Behavior 145 (2023), 107765.

- Rehan Iftikhar, Yi-Te Chiu, Mohammad Saud Khan, and Catherine Caudwell. 2023. Human–Agent Team Dynamics: A Review and Future Research Opportunities. IEEE Transactions on Engineering Management (2023).

- Deanna Kuhn and Victoria Ho. 1980. Self-directed activity and cognitive development. Journal of Applied Developmental Psychology 1, 2 (1980), 119–133.

- Lane Lawley and Christopher Maclellan. 2024. VAL: Interactive Task Learning with GPT Dialog Parsing. In Proceedings of the CHI Conference on Human Factors in Computing Systems. 1–18.

- Nathan J McNeese, Mustafa Demir, Nancy J Cooke, and Christopher Myers. 2018. Teaming with a synthetic teammate: Insights into human-autonomy teaming. Human factors 60, 2 (2018), 262–273.

- Jason S Metcalfe, Brandon S Perelman, David L Boothe, and Kaleb Mcdowell. 2021. Systemic oversimplification limits the potential for human-AI partnership. IEEE Access 9 (2021), 70242–70260.

- Stefanos Nikolaidis, Przemyslaw Lasota, Ramya Ramakrishnan, and Julie Shah. 2015. Improved human–robot team performance through cross-training, an approach inspired by human team training practices. The International Journal of Robotics Research 34, 14 (2015), 1711–1730.

- Chuen-Tsai Sun, Dai-Yi Wang, and Hui-Ling Chan. 2011. How digital scaffolds in games direct problem-solving behaviors. Computers & Education 57, 3 (2011), 2118–2125.

- Allen Tough et al. 1967. Learning without a teacher: A study of tasks and assistance during adult self-teaching projects. Educational Research Series; 3 (1967).

- Jonathan G Tullis and Aaron S Benjamin. 2011. On the effectiveness of self-paced learning. Journal of memory and language 64, 2 (2011), 109–118.

- David Wood, Jerome S Bruner, and Gail Ross. 1976. The role of tutoring in problem solving. Journal of child psychology and psychiatry 17, 2 (1976), 89–100.

- Kevin T Wynne and Joseph B Lyons. 2018. An integrative model of autonomous agent teammate-likeness. Theoretical Issues in Ergonomics Science 19, 3 (2018), 353–374.

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be honored. For all other uses, contact the owner/author(s).

HAI '24, November 24–27, 2024, Swansea, United Kingdom

© 2024 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-1178-7/24/11.

DOI: https://doi.org/10.1145/3687272.3690873