The Effect of an Avatar on Gaze Guidance: An Eye-Tracking Study of Historical Photograph Appreciation

DOI: https://doi.org/10.1145/3765766.3765857

HAI '25: 13th International Conference on Human-Agent Interaction, Yokohama, Japan, November 2025

This study investigated the influence of a 3D avatar on viewers’ gaze patterns as they observed historical photographs presented on large-scale 2D displays. We used Head Mount Display as a controlled platform for eye-tracking. We compared gaze data from nine participants across two conditions: a narration-only condition and an avatar-guided condition, where a virtual agent pointed to specific regions of interest on the photograph. Our results show the avatar significantly improved temporal gaze synchrony, reducing the time to first fixation on an ROI by 57% and increasing scanpath similarity among participants. However, the avatar's effect on the spatial dispersion of attention—measured by 2D entropy and Root Mean Square distance—varied with the photograph's composition and the avatar's position, highlighting a trade-off between focused attention and exploratory viewing. These findings suggest that designing effective virtual agents for educational contexts requires balancing the speed of guidance against the desired degree of focused attention.

ACM Reference Format:

Shohei Komatsu, Kosuke Shimizu, and Hidenori Watanave. 2025. The Effect of an Avatar on Gaze Guidance: An Eye-Tracking Study of Historical Photograph Appreciation. In 13th International Conference on Human-Agent Interaction (HAI '25), November 10--13, 2025, Yokohama, Japan. ACM, New York, NY, USA 3 Pages. https://doi.org/10.1145/3765766.3765857

1 Introduction

The rapid development of Artificial Intelligence (AI) has led to the deployment of virtual agents as guides in settings like museums and educational platforms [4]. These agents can direct users’ attention and enhance their engagement. However, the principles governing how an agent's behavior influences human attention are not yet well-established. An agent's behavior is a critical component of interface design, impacting the user experience across applications from gaming to guided tutorials.

A key mechanism for guiding attention is establishing joint attention—the shared focus of two individuals on an object, often achieved through non-verbal cues like gaze and pointing gestures [3]. In Virtual Reality (VR), an avatar's deictic gestures are powerful cues for directing a user's gaze, potentially improving information acquisition. However, the agent itself is also a salient visual stimulus that can distract the user from the primary object of interest. This introduces a fundamental question: How does a guiding avatar's presence mediate the trade-off between directing and distracting attention?

Previous research has explored the impact of avatar realism on communication quality [1] and used eye-tracking to facilitate interaction with virtual agents [2]. Studies in museum contexts have also used mobile eye-tracking to understand visitor attention patterns [5]. Yet, few studies have systematically investigated how a co-present virtual guide affects visual attention on static, two-dimensional stimuli within an immersive VR environment. This study leverages VR as a precisely controlled experimental environment to investigate an avatar's influence on gaze patterns on 2D photographs.

This study aims to clarify how a guiding avatar alters viewers’ gaze patterns when observing historical photographs in VR. By quantitatively evaluating temporal synchrony and spatial distribution of gaze, we aim to provide actionable insights for designing effective virtual exhibitions.

2 Methods

Nine individuals (7 male, 2 female; Mage = 27.3, SD = 4.1) participated. All were right-handed with normal or corrected-to-normal vision. The experiment was conducted in a quiet room (300–350 lx, < 35 dB) using a PICO 4 Enterprise headset. Gaze data (WorldGazePoint X, Y, Z) were recorded at 120 Hz with a custom Unity application.

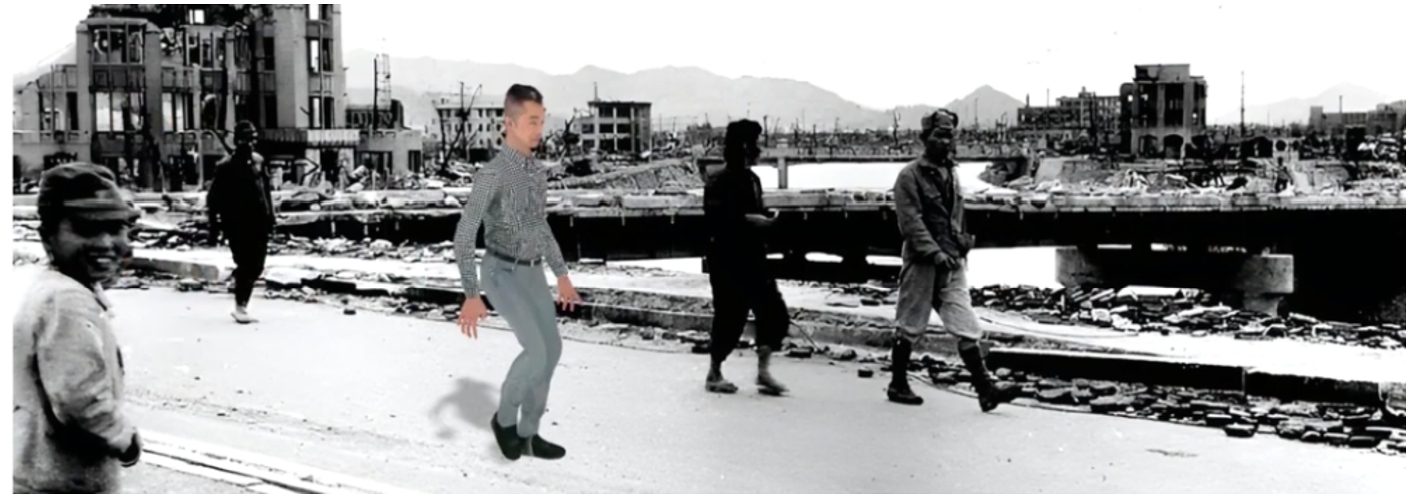

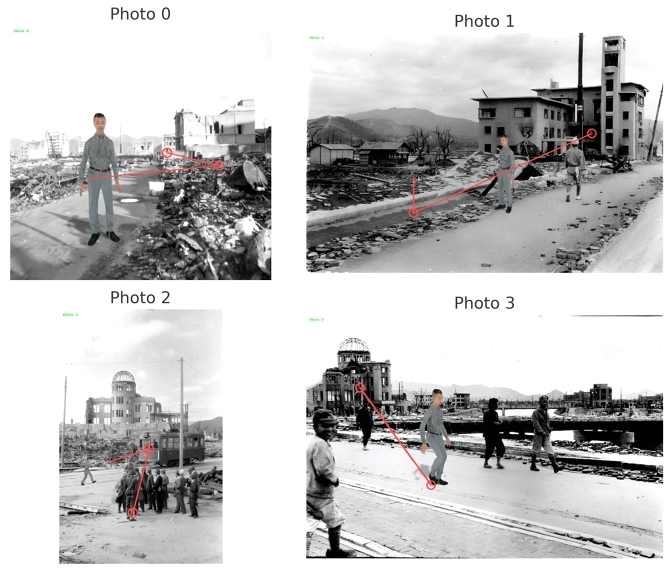

The stimuli were four monochrome historical photographs of post-atomic bombing Hiroshima. Each photograph was presented as a static 2m x 3m plane in the VR space, positioned 2.2m from the participant. A 20-second Japanese narration (neutral male voice) accompanied each photograph. Participants viewed the photograph with only the audio narration. A 1.7m tall 3D avatar was rendered between the participant and the photograph. The avatar moved along a pre-set path, synchronizing its deictic gestures with the narration to point at specific ROIs. The pointing gesture consisted of a 0.8s motion and a 1.2s hold.

After providing informed consent and undergoing a 2-minute eye-tracking calibration, participants completed eight trials (4 photos × 2 conditions) in a randomized order. Each trial lasted approximately 20 seconds.

Gaze data samples with missing values (<3%) were excluded. The analysis window for each trial was from 4s to 20s. We projected the 3D gaze coordinates onto a 2D plane (X: horizontal, Z: vertical). To quantify spatial distribution, we created a density histogram for each trial by imposing a 60 × 60 grid over the stimulus. From these data, we computed four metrics: 2D Entropy, Root Mean Square (RMS) Distance from the center, First-Fixation Latency on an ROI, and Scanpath Distance (using the Levenshtein algorithm).

3 Results and Discussion

The avatar's presence led to a statistically significant reduction in First-Fixation Latency (p = .016) and a significant decrease in Scanpath Distance (p = .028), indicating greater scanpath similarity among participants. No significant overall differences were found for 2D Entropy or RMS Distance (see Table 1).

| Metric (M ± SD) | Narration | Avatar | Z | p |

|---|---|---|---|---|

| 2D Entropy [bits] | 4.93 ± 0.16 | 4.73 ± 0.46 | -1.30 | .19 |

| RMS Distance [m] | 0.150 ± 0.05 | 0.162 ± 0.02 | +0.42 | .68 |

| First-Fix. Latency [s]* | 2.8 ± 1.1 | 1.2 ± 0.5 | -2.41 | .016 |

| Scanpath Dist.†* | 8.1 ± 2.4 | 5.9 ± 1.9 | -2.20 | .028 |

| † Levenshtein distance; lower values indicate higher similarity. | ||||

As shown in Figure 2, gaze in the Avatar condition was more concentrated around the ROIs indicated by the avatar. However, the effect on spatial dispersion varied by photograph (Figure ). For Photos 0 and 3, the avatar's presence reduced the overall gaze spread (lower entropy). In contrast, for Photo 2, the avatar increased entropy while decreasing the RMS distance.

These results yield practical design implications for virtual agents:

- For Rapid Guidance: In time-sensitive contexts, an avatar is a highly effective tool for directing attention quickly.

- For Sustained Focus: To encourage detailed observation of a specific area, the avatar should be placed at a distance from the ROI, or its gestures should be minimized to reduce distraction.

- For Promoting Exploration: To encourage exploration of a complex scene, placing the avatar near a key feature can anchor attention before guiding subsequent exploration.

This study's limitations include a small sample size (N = 9) and a narrow range of stimuli (four static, monochrome photographs). Future research should recruit a larger, more diverse participant pool and utilize a wider range of stimuli, such as color photographs or dynamic scenes, to validate and extend these findings.

References

- Garau, M., Slater, M., Vinayagamoorthy, V., Brogni, A., Steed, A., & Sasse, M. A. (2003). The impact of avatar realism and eye gaze control on perceived quality of communication in a shared immersive virtual environment. Proceedings of the SIGCHI conference on Human factors in computing systems, 529-536.

- Lee, S., Kim, S., & Lee, K. M. (2022). Depression detection using virtual avatar communication and eye tracking. Frontiers in Psychiatry, 13, 10699095.

- Pan, J., et al. (2024). Visual Guidance for User Placement in Avatar-Mediated Telepresence Between Dissimilar Spaces. IEEE Transactions on Visualization and Computer Graphics.

- VIGART. (2013). Design of a Gaze-Sensitive Virtual Social Interactive System for Children With Autism. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 21(5), 1-11.

- Ylirisku, S., et al. (2011). Museum Guide 2.0 – An Eye-Tracking based Personal Assistant for Museums and Exhibits. Proceedings of the 2011 conference on Human Factors in Computing Systems.

This work is licensed under a Creative Commons Attribution 4.0 International License.

HAI '25, Yokohama, Japan

© 2025 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-2178-6/25/11.

DOI: https://doi.org/10.1145/3765766.3765857