PM4Music: A Scriptable Parametric Modeling Interface for Music Visualizer Design Using PM4VR

DOI: https://doi.org/10.1145/3653876.3653906

ICDSP 2024: 2024 8th International Conference on Digital Signal Processing (ICDSP), Hangzhou, China, February 2024

The intersection of music and visual arts has long been a captivating field, offering immersive experiences that engage both auditory and visual senses. Music visualizers, in particular, serve as a dynamic medium for translating sound into captivating visual displays. This research paper introduces PM4Music, a cutting-edge scriptable parametric modeling interface tailored for music visualizer design. Leveraging the power of PM4VR, a versatile virtual reality environment, PM4Music empowers artists to create intricate and synchronized visualizations that enhance the auditory experience.

ACM Reference Format:

Wanwan Li. 2024. PM4Music: A Scriptable Parametric Modeling Interface for Music Visualizer Design Using PM4VR. In 2024 8th International Conference on Digital Signal Processing (ICDSP) (ICDSP 2024), February 23--25, 2024, Hangzhou, China. ACM, New York, NY, USA 5 Pages. https://doi.org/10.1145/3653876.3653906

1 INTRODUCTION

The intersection of signal processing technology [6, 39, 40] and creative digital expression [4, 42, 47] has led to the development of innovative tools in the realm of music visualization [37]. As a consequence, the fusion of technology and artistic expression has given rise to innovative platforms that bridge the gap between sound and sight [44]. Music visualizers play a pivotal role in this convergence [38], creating a canvas for translating audio frequencies into mesmerizing visual representations [8]. In the end, the convergence of music and visual arts has paved the way for immersive experiences through music visualizers [3], offering a synthesis of auditory and visual stimuli. As an interdisciplinary phenomenon, music visualization intertwines the auditory and visual realms [5], presenting a narrative that reflects the paradigms of artistic expression and technological innovation. This paper seeks to unravel the music visualizer's rich tapestry by examining parametric artistic forms which shapes its visual effects in virtual reality.

Virtual Reality (VR) [45] refers to a computer-generated simulation of a three-dimensional environment that can be interacted with in a seemingly real or physical way by a person, using electronic devices such as headsets, gloves, or motion sensors [43]. The goal of VR is to immerse the user in a simulated world that feels as close to reality as possible, creating a sense of presence and allowing users to explore and interact with the virtual environment [2]. VR headsets are the primary hardware used to experience VR [1]. Advanced computer graphics technologies are crucial for creating realistic and immersive virtual environments [9, 11, 17, 22, 26, 27, 28]. VR has been applied in immersive interactive gaming for entertainments [7, 13, 41], simulations [21, 23] and trainings [46] across various fields, including civil engineering [33, 34], healthcare [35], sports [15, 16], exercise [20, 29, 36], etc. It is also used in education to create immersive virtual environments for enhanced learning experiences [12, 19], as well as in artistic designs [10, 25] for visualizing creations before physical construction or realization.

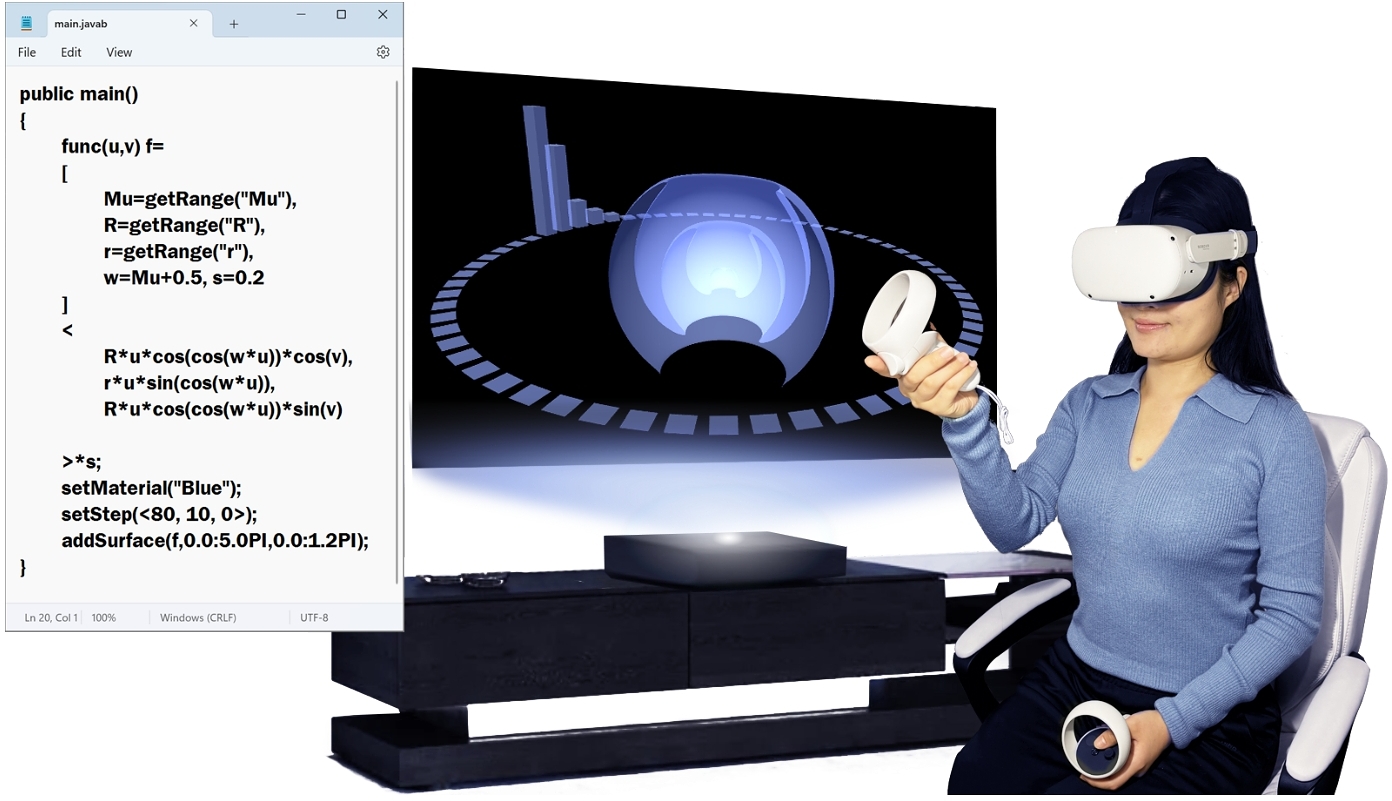

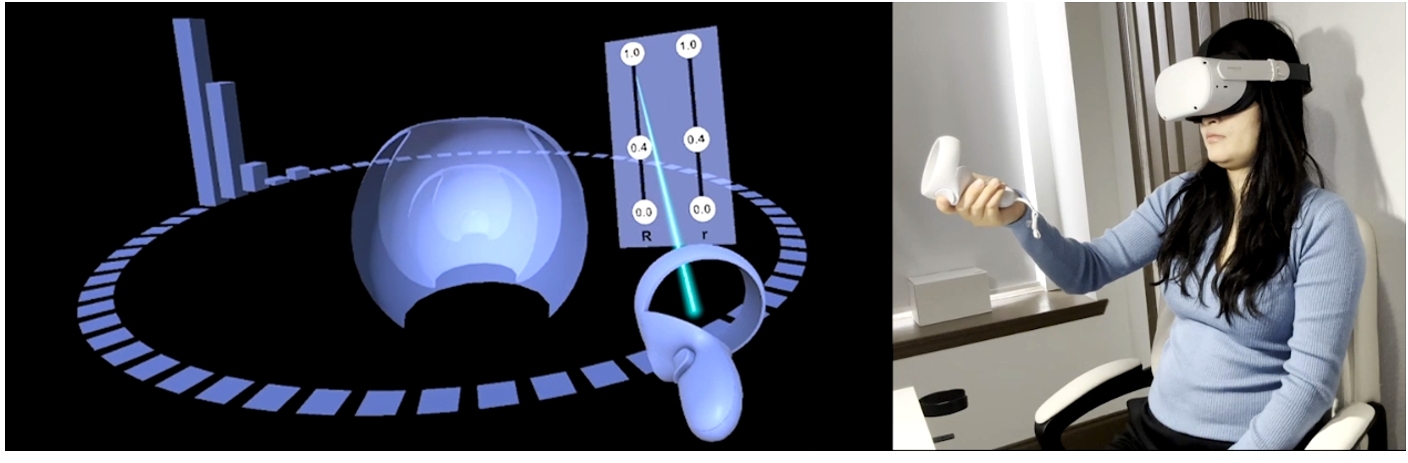

This paper introduces PM4Music, a scriptable interface for music visualization within the framework of PM4VR [14, 30, 31, 32]. PM4Music allows artists to create parametric visualizations [18, 24] that synchronize with the auditory experience. Serving as a novel solution, PM4Music employs scriptable parametric modeling to facilitate the design of music visualizers in PM4VR's dynamic landscape. Fig. 1 provides a glimpse of PM4Music, highlighting its scriptable parametric modeling interface for music visualizer design. Designers write Java♭ scripts to generate abstract 3D shapes, seamlessly integrated with the Oculus Quest 2 VR headset for immersive parameter tuning experience using VR controllers in virtual environment.

2 TECHNICAL APPROACH

PM4VR Interface. As proposed by Li et al. [14], PM4VR is a highly effective programming interface integrated into Unity Editor with a specific focus on facilitating parametric modeling in virtual reality. PM4VR contains two crucial C# script files including JavabCompiler.cs, and JavabScriptBehaviour.cs. JavabCompiler.cs establishes a connection between the Unity Editor and Java♭ compiler. This real-time intercommunication is invoked by a Java♭ function called getRange("Range Variable Name") and the connection is realized via exchanging Unity♭ Scripts (*.unityb) between JVM♭ and Unity♭.

3 EXPERIMENT RESULTS

In assessing the effectiveness of our proposed methodology, we undertook numerical experiments using the scriptable parametric modeling interface of PM4Music. The implementation of these experiments was executed within the Unity 3D framework, specifically the 2019 version. The computational tasks were performed on hardware equipped with an Intel Core i5 CPU, 32GB DDR4 RAM, and an NVIDIA GeForce GTX 1650 4GB GDDR6 Graphics Card. This hardware configuration ensured a robust environment for our evaluations, allowing us to gauge the performance, efficiency, and overall viability of our proposed approach in the realm of music visualizers. The chosen specifications offer a balance between processing power and graphical capabilities, aligning with the demands of the Unity 3D environment and the complexities associated with the scriptable parametric modeling interface of PM4Music.

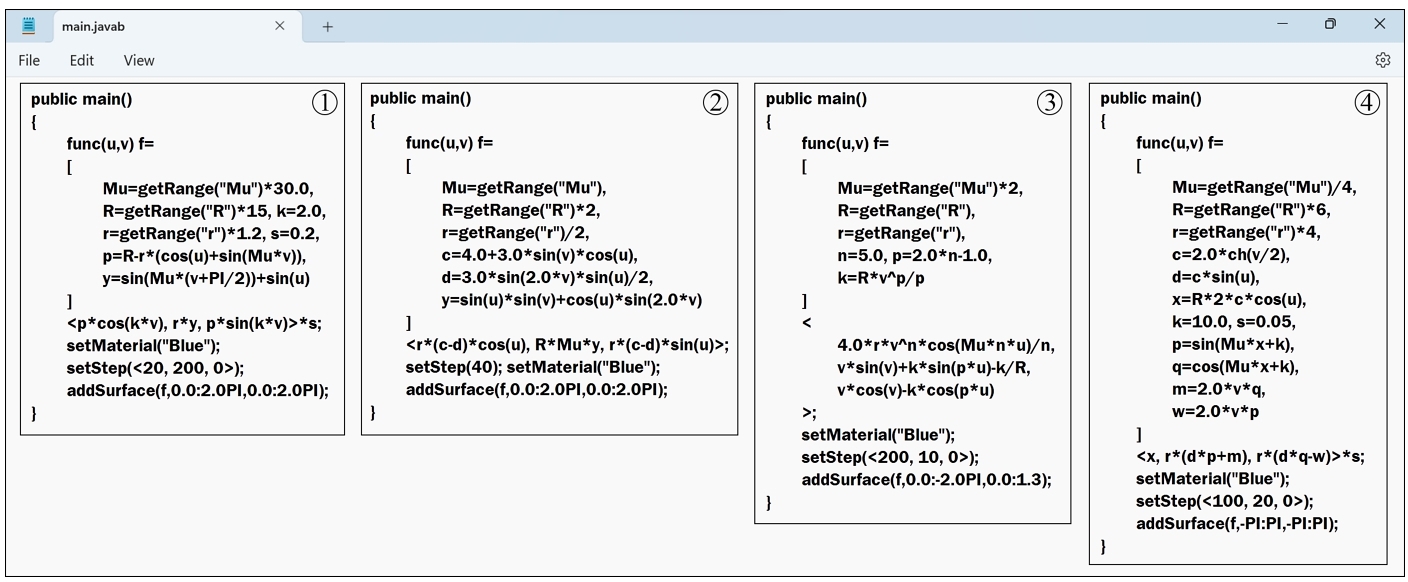

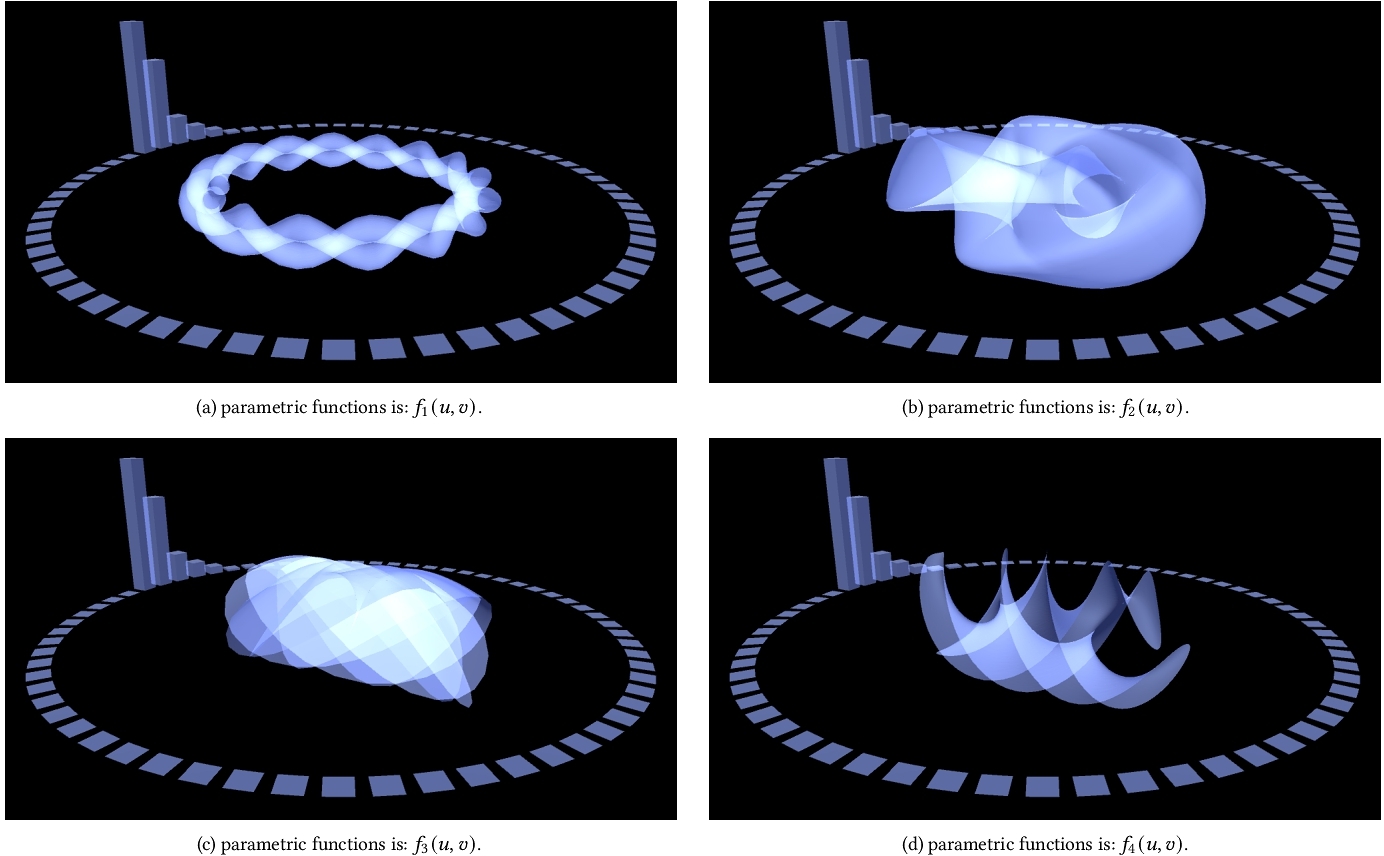

Figure 3 visually showcases the outcomes of parametric music visualizers created through the innovative capabilities of PM4Music under distinct parametric functions. In this depiction, four different parametric music visualizers are presented in four subfigures, each crafted using a different parametric function which are (f1(u, v),..., f4(u, v)). The diverse visualizations highlight the flexibility and versatility inherent in PM4Music, demonstrating its capacity to generate varied and expressive music-driven visual experiences. To provide further insight into the underlying mechanisms, the corresponding Java♭ script for each of these four parametric functions is detailed in Figure 2. This dual representation offers a comprehensive view of both the visual outcomes and the associated scripting, providing a valuable resource for understanding the correlation between parametric functions and the resulting music visualizers’ 3D geometric models in the context of PM4Music.

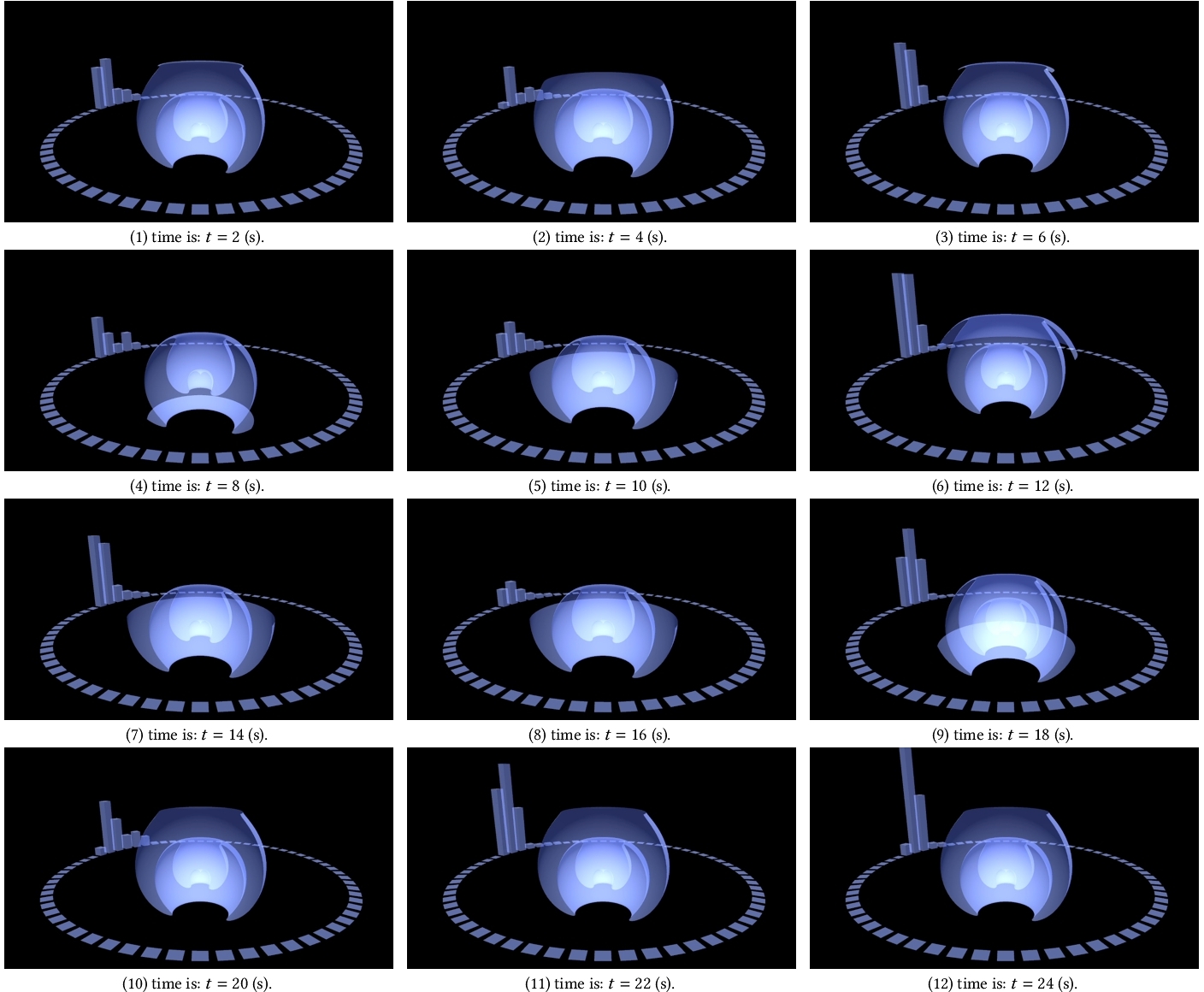

The visual representation in Fig. 4 provides a dynamic showcase of the evolving shapes of the parametric music visualizer, whose corresponding parametric equation is detailed in Figure 1, at different time intervals. Comprising 12 subfigures arranged in a 4 by 3 grid, these screenshots capture the visual transformations of the music-driven graphics, with each image representing a distinct moment in time. The snapshots were captured at regular 2-second intervals, covering the temporal span from 2 to 24 seconds. The accompanying musical backdrop for this illustrative example is a piano composition titled "Bach in G Minor" (Arranged by Luo Ni).

User Study. In Fig. 5, we offer a glimpse into the immersive realm of VR, where a user engages with the PM4Music interface while donning an Oculus Quest 2 headset. This figure provides a firsthand look at the experiential aspect of tunning the music visualizer's parameter values within the PM4Music environment, showcasing the seamless integration of VR technology with our proposed parametric modeling interface. To offer a more comprehensive understanding of this immersive user experience, allow readers to witness the user's interaction in real-time, and provide a more in-depth perspective on the music visualizer's VR-enabled parametric modeling approach within the PM4Music framework, we have created a video available at the following link: https://youtu.be/BczA3i2tflA

4 CONCLUSION

This paper introduces PM4Music, an innovative scriptable parametric modeling interface for music visualizers design within the PM4VR framework. The interface empowers users to script and generate intricate visual representations that respond dynamically to changes in music parameters. By providing a flexible and scriptable parametric modeling interface integrated seamlessly with PM4VR, PM4Music leverages the power of parametric modeling to dynamically synchronize visual elements with the accompanying music, marking a significant advancement in the realm of music visualization. The paper highlights the architecture, implementation details, and immersive experiences within VR, underscoring PM4Music's potential as a transformative tool for artists and enthusiasts to craft visually stunning and responsive representations of music. As an evolving technology, PM4Music stands at the intersection of art and VR, offering a unique platform for creative expression.

REFERENCES

- Vladislav Angelov, Emiliyan Petkov, Georgi Shipkovenski, and Teodor Kalushkov. 2020. Modern virtual reality headsets. In 2020 International congress on human-computer interaction, optimization and robotic applications (HORA). IEEE, 1–5.

- YAGV Boas. 2013. Overview of virtual reality technologies. In Interactive Multimedia Conference, Vol. 2013. sn.

- Gabriel Dias Cantareira, Luis Gustavo Nonato, and Fernando V Paulovich. 2016. Moshviz: A detail+ overview approach to visualize music elements. IEEE Transactions on Multimedia 18, 11 (2016), 2238–2246.

- Yongli Dai. 2021. Digital art into the design of cultural and creative products. In Journal of Physics: Conference Series, Vol. 1852. IOP Publishing, 032042.

- Joyce Horn Fonteles, Maria Andréia Formico Rodrigues, and Victor Emanuel Dias Basso. 2013. Creating and evaluating a particle system for music visualization. Journal of Visual Languages & Computing 24, 6 (2013), 472–482.

- Ben Gold, Nelson Morgan, and Dan Ellis. 2011. Speech and audio signal processing: processing and perception of speech and music. John Wiley & Sons.

- Dong Jun Kim and Wanwan Li. 2023. A View Direction-Driven Approach for Automatic Room Mapping in Mixed Reality. In Proceedings of the 2023 5th International Conference on Image Processing and Machine Vision. 29–33.

- Kelian Li and Wanwan Li. 2021. MusicTXT: A Text-based Interface for Music Notation. In Proceedings of the 11th Workshop on Ubiquitous Music (UbiMus 2021). g-ubimus, 62–71.

- Wanwan Li. 2021. Make uber faster: Automatic optimization of uber schedule using openstreetmap data. In Proceedings of the 2021 EURASIAGRAPHICS. 19–26.

- Wanwan Li. 2021. Pen2VR: A Smart Pen Tool Interface for Wire Art Design in VR. (2021).

- Wanwan Li. 2021. Procedural Modeling of the Great Barrier Reef. In Advances in Visual Computing: 16th International Symposium, ISVC 2021, Virtual Event, October 4-6, 2021, Proceedings, Part I. Springer, 381–391.

- Wanwan Li. 2022. Creative Molecular Model Design for Chemistry Edutainment. In Proceedings of the 14th International Conference on Education Technology and Computers. 226–232.

- Wanwan Li. 2022. Musical Instrument Performance in Augmented Virtuality. In Proceedings of the 6th International Conference on Digital Signal Processing. 91–97.

- Wanwan Li. 2022. PM4VR: A Scriptable Parametric Modeling Interface for Conceptual Architecture Design in VR. In Proceedings of the 18th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry. 1–8.

- Wanwan Li. 2022. Procedural Marine Landscape Synthesis for Swimming Exergame in Virtual Reality. In 2022 IEEE Games, Entertainment, Media Conference (GEM). IEEE, 1–8.

- Wanwan Li. 2022. Simulating Ice Skating Experience in Virtual Reality. In 2022 7th International Conference on Image, Vision and Computing (ICIVC). IEEE, 706–712.

- Wanwan Li. 2022. Simulating Virtual Construction Scenes on OpenStreetMap. In Proceedings of the 6th International Conference on Virtual and Augmented Reality Simulations. 14–20.

- Wanwan Li. 2023. Animating Parametric Kinetic Spinner in Virtual Reality. In 2023 7th International Conference on Advances in Image Processing (ICAIP 2023). 1–5.

- Wanwan Li. 2023. Compare The Size: Automatic Synthesis of Size Comparison Animation in Virtual Reality. In 2023 5th International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA). IEEE, 1–4.

- Wanwan Li. 2023. Elliptical4VR: An Interactive Exergame Authoring Tool for Personalized Elliptical Workout Experience in VR. In Proceedings of the 2023 5th International Conference on Image, Video and Signal Processing. 111–116.

- Wanwan Li. 2023. InsectVR: Simulating Crawling Insects in Virtual Reality for Biology Edutainment. In Proceedings of the 7th International Conference on Education and Multimedia Technology. 8–14.

- Wanwan Li. 2023. Patch-based Monte Carlo Terrain Upsampling via Gaussian Laplacian Pyramids. In Proceedings of the 2023 5th International Conference on Image, Video and Signal Processing. 172–177.

- Wanwan Li. 2023. PlanetTXT: A Text-based Planetary System Simulation Interface for Astronomy Edutainment. In Proceedings of the 2023 14th International Conference on E-Education, E-Business, E-Management and E-Learning. 47–53.

- Wanwan Li. 2023. SurfChessVR: Deploying Chess Game on Parametric Surface in Virtual Reality. In 2023 9th International Conference on Virtual Reality (ICVR). IEEE, 171–178.

- Wanwan Li. 2023. Synthesizing 3D VR Sketch Using Generative Adversarial Neural Network. In Proceedings of the 2023 7th International Conference on Big Data and Internet of Things. 122–128.

- Wanwan Li. 2023. Synthesizing Realistic Cracked Terrain for Virtual Arid Environment Generation. In 2023 8th International Conference on Communication, Image and Signal Processing (CCISP). IEEE, 361–366.

- Wanwan Li. 2023. Synthesizing virtual night scene on openstreetmap. In 2023 International Conference on Communications, Computing and Artificial Intelligence (CCCAI). IEEE, 177–181.

- Wanwan Li. 2023. Synthesizing Virtual World Palace Scenes on OpenStreetMap. In 2023 7th International Conference on Computer, Software and Modeling (ICCSM). IEEE, 62–66.

- Wanwan Li. 2023. Terrain synthesis for treadmill exergaming in virtual reality. In 2023 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). IEEE, 263–269.

- Wanwan Li. 2024. PM4Car: A Scriptable Parametric Modeling Interface for Concept Car Design Using PM4VR.. In 2024 International Conference on Computer Graphics and Image Processing (CGIP). 1–6.

- Wanwan Li. 2024. PM4Pottery: A Scriptable Parametric Modeling Interface for Conceptual Pottery Design Using PM4VR. In 2024 International Conference on Automation, Robotics, and Applications (ICARA). 1–5.

- Wanwan Li. 2024. PM4WireArt: A Scriptable Parametric Modeling Interface for Conceptual Wire Art Design Using PM4VR. In 2024 International Conference on International Conference on Information and Computer Technologies (ICICT). 1–6.

- Wanwan Li, Behzad Esmaeili, and Lap-Fai Yu. 2022. Simulating Wind Tower Construction Process for Virtual Construction Safety Training and Active Learning. In 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). IEEE, 369–372.

- Wanwan Li, Haikun Huang, Tomay Solomon, Behzad Esmaeili, and Lap-Fai Yu. 2022. Synthesizing personalized construction safety training scenarios for VR training. IEEE Transactions on Visualization and Computer Graphics 28, 5 (2022), 1993–2002.

- Wanwan Li, Javier Talavera, Amilcar Gomez Samayoa, Jyh-Ming Lien, and Lap-Fai Yu. 2020. Automatic synthesis of virtual wheelchair training scenarios. In 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR). IEEE, 539–547.

- Wanwan Li, Biao Xie, Yongqi Zhang, Walter Meiss, Haikun Huang, and Lap-Fai Yu. 2020. Exertion-aware path generation.ACM Trans. Graph. 39, 4 (2020), 115.

- Hugo B Lima, Carlos GR Dos Santos, and Bianchi S Meiguins. 2021. A survey of music visualization techniques. ACM Computing Surveys (CSUR) 54, 7 (2021), 1–29.

- Delfina Malandrino, Donato Pirozzi, and Rocco Zaccagnino. 2018. Visualization and music harmony: Design, implementation, and evaluation. In 2018 22nd International Conference Information Visualisation (IV). IEEE, 498–503.

- Meinard Mueller, Bryan A Pardo, Gautham J Mysore, and Vesa Valimaki. 2018. Recent advances in music signal processing [from the guest editors]. IEEE Signal Processing Magazine 36, 1 (2018), 17–19.

- Meinard Muller, Daniel PW Ellis, Anssi Klapuri, and Gaël Richard. 2011. Signal processing for music analysis. IEEE Journal of selected topics in signal processing 5, 6 (2011), 1088–1110.

- Devin Perry, Thomas Bivins, Bianca Dehaan, and Wanwan Li. 2023. Procedural Rhythm Game Generation in Virtual Reality. In Proceedings of the 2023 6th International Conference on Image and Graphics Processing. 218–222.

- Archana Rani. 2018. Digital Technology: It's Role in Art Creativity. Journal of Commerce and Trade 13, 2 (2018), 61–65.

- William R Sherman and Alan B Craig. 2018. Understanding virtual reality: Interface, application, and design. Morgan Kaufmann.

- Yujia Wang, Wei Liang, Wanwan Li, Dingzeyu Li, and Lap-Fai Yu. 2020. Scene-aware background music synthesis. In Proceedings of the 28th ACM International Conference on Multimedia. 1162–1170.

- Isabell Wohlgenannt, Alexander Simons, and Stefan Stieglitz. 2020. Virtual reality. Business & Information Systems Engineering 62 (2020), 455–461.

- Biao Xie, Huimin Liu, Rawan Alghofaili, Yongqi Zhang, Yeling Jiang, Flavio Destri Lobo, Changyang Li, Wanwan Li, Haikun Huang, Mesut Akdere, et al. 2021. A review on virtual reality skill training applications. Frontiers in Virtual Reality 2 (2021), 645153.

- Wei Zhu. 2020. Study of creative thinking in digital media art design education. Creative Education 11, 2 (2020), 77–85.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than the author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org.

ICDSP 2024, February 23–25, 2024, Hangzhou, China

© 2024 Copyright held by the owner/author(s). Publication rights licensed to ACM.

ACM ISBN 979-8-4007-0902-9/24/02.

DOI: https://doi.org/10.1145/3653876.3653906