Tracking Drones with Manual Operation Representation

DOI: https://doi.org/10.1145/3638985.3639013

ICIT 2023: 2023 The 11th International Conference on Information Technology: IoT and Smart City, Kyoto, Japan, December 2023

The Global Positioning System (GPS) is a system that provides position information such as latitude, longitude, and altitude anywhere on the earth. If this system is used, however, it may cause (1) position acquisition interruptions in places where GPS signals do not reach, (2) increased power consumption due to the constant processing of numerous satellite signals, and (3) cyber-attacks targeting GPS receivers. Until now, many methods have been proposed to overcome these challenges individually. This study, in contrast, addresses these challenges simultaneously for the quadcopter (abbreviated as drone) case, where the use of GPS is a prerequisite.

Drones used in logistics, agriculture, surveillance, and other industrial fields are transitioning from manual operation to autopilot due to geographic expansion of services and reduction of labor costs. This study uses Perceiver, the latest deep-learning model, to track a autopilot drone from takeoff to current position in real time by using signals roll, pitch, yaw, and throttle. We call the four signals Manual Operation Representation (MOR) because a drone can be manually controlled with a radio controller that transmits the four signals. Our method can complement GPS at any time and can detect a malfunction or cyber-attack if the flight route calculated by the deep-learning model deviates from the route specified by the autopilot.

ACM Reference Format:

Geng Wang and Kazumasa Oida. 2023. Tracking Drones with Manual Operation Representation. In 2023 The 11th International Conference on Information Technology: IoT and Smart City (ICIT 2023), December 14--17, 2023, Kyoto, Japan. ACM, New York, NY, USA 6 Pages. https://doi.org/10.1145/3638985.3639013

1 INTRODUCTION

The Global Positioning System (GPS) is a satellite-based system for measuring location information on the earth. Because GPS can measure position with an error of only a few meters, it is widely used for navigation in ships, aviation, and automobiles. However, GPS has the following challenges. (1) There are GPS-less environments where GPS signals cannot reach due to physical obstructions such as high-rise buildings, dense clouds, and trees. (2) GPS receivers consume a lot of power due to receiving trajectory information from many satellites for tracking satellite [7, 14]. (3) Cyber-attacks such as GPS spoofing [19] and jamming [23] targeting GPS users need to be addressed.

Many methods have been proposed to address these three challenges individually. Adopting a set of methods that overcome all the challenges could result in building an expensive and power-hungry system. This study proposes an inexpensive and energy-efficient approach while simultaneously addressing these three challenges. To this end, a system is designed with the following premises: (1) It replaces GPS temporarily at any time (to cover GPS-less areas or to save power consumption). (2) It follows predetermined routes (to detect cyber-attacks based on the difference between the predetermined and actual routes). Therefore, we are targeting neither systems that operate completely without GPS (e.g., long-range outdoor localization systems [16, 20]) nor systems that complement some of the challenges (e.g., energy-efficient methods that minimize the use of GPS [27]).

The second premise mentioned above does not significantly narrow the scope of application. As a case study, this study targets autopilot quadcopters (hereafter referred to as drones) used in industries such as logistics, agriculture, surveillance, infrastructure inspection. Currently, drones used in these fields are shifting from manual to autopilot operation due to geographic expansion of services, reduction of labor costs, and improved legislation. In December 2022, Japan started a new rule so-called “LEVEL 4” flight (remotely piloted flight beyond visual line of sight in an inhabited area). Such drones fly according to predetermined flight routes specified by the autopilot programs.

| INS | Vision | RF | MOR | |

|---|---|---|---|---|

| Keyword | gyroscope, | video, | Wi-Fi, cellular, | manual |

| accelerometer, | optical flow, | radar, LoRa, | operation | |

| magnetometer | map | beacon | ||

| Signal | sensor | camera | transceiver | autopilot |

| source | receiver | software | ||

| Environment | independent | visual obstacle, | radio | weather |

| dependence | poorly lit area | interference | ||

| Challenge | error | image processing | base station | ML training |

| accumulation | & storage cost | cost | cost |

In manual operation with radio controllers, drones are controlled by roll, pitch, yaw, and throttle signals. Hereinafter, these four signals are called manual operation representation (MOR). This study proposes a method to convert an MOR time series into an autopilot program using Perceiver [10, 11], a state-of-the-art deep-learning model. This conversion is feasible in principle because the flight route created by the autopilot can be reproduced by manual operation. Our approach does not require expensive hardware. Training of the Perceiver system is performed before flight, so that the computational cost during flight is small. In addition, a smaller number of flight paths would greatly reduce training costs. Furthermore, if the calculated flight path deviates from the path specified by the autopilot, a malfunction or cyber-attack can be detected. Time series data from both simulations and actual flights show that Perceiver can calculate flight paths with high accuracy.

The rest of the paper is organized as follows. Section 2 presents prior work related to our study. Section 3 details the MOR-based localization method and the software and hardware configuration of the experimental drone system. Section 4 describes the experimental conditions and results. Finally, the conclusions of this study are presented in Section 5.

2 RELATED WORK

Various GPS alternative methods are currently available and still being improved to obtain the current location of objects moving outdoors. Table 1 provides a summary of these methods. Note that there are many other approaches if indoor tracking systems [25], which are out of scope, are taken into account. Inertial navigation systems (INSs) with accelerometers and gyroscopes etc. are a widespread complement to the GPS. This system is highly accurate and has a fast response time, but can only be used for a short period of time because errors accumulate over time. Research is underway to improve it using deep-learning technologies [8].

The vision- and radio frequency (RF)-based localization methods are also popular alternatives. The former method captures images of the surrounding environment with visual cameras and calculate the location and direction of the environment [15, 22]. The latter typically uses the distance between the source and receiver of a radio signal, the signal's arrival time, and the signal's strength to calculate location [4]. Because different methods pose different challenges, the conditions of use should select an appropriate method. To obtain a more accurate estimate, sensor fusion technologies that combine information from multiple sensors such as cameras and Wi-Fi receivers have been developed [3, 30].

The MOR-based localization method is largely different from existing ideas. (1) Our approach completely ignores signals from outside, whereas existing methods, with the exception of INS, do not. Therefore, vision and RF-based approaches could be suffered from spoofing and interference attacks. Because the Perceiver model calculates the interactions among all the elements of a time series (rather than processing time series sequentially as INS does), errors do not accumulate over time. Thus, our approach can handle longer time series than INSs. (2) The existing methods are all based on signal processing because they extracts positional information from the dynamics of hardware output. On the other hand, our method can be regarded as a human-level approach because we infer flight routes from the dynamics of MOR signals that directly reflect manual operation. Although existing methods might be more accurate, our approach is less expensive (because it does not require special hardware or infrastructure).

The three existing approaches in Table 1 can be extended to detect GPS-related attacks because any discrepancy between measurements (e.g., positions and velocities) of these methods and those of GPS suggests the attacks. Ceccato et al. proposed a method that is based on the comparison between GPS and INS measurements [5]. Qiao et al. compared the visual sensor velocity to the GPS velocity estimate [24]. Dang et al. obtained statistical properties of path loss measurements collected from nearby cellular base stations to determine the credibility of GPS positions. Meanwhile, the MOR-based method can detect the attacks without additional functionality. Inconsistencies between the autopilot and the actual flight path suggest that an attack is occurring [21].

Nowaday, Perceiver has been refined to cope with various time-series inputs. Chauhan et al. used the model to cope with irregular time series (ITS) in electric health records [6]. Sbrana and Castro demonstrated outstanding performance of a Perceiver model in cryptocurrency price forecasting and portfolio selection [26]. Kwon and Lee extended a Perceiver model to predict the temperature of the poloidal field coils [12].

3 EXPERIMENTAL SYSTEM

3.1 Drone System

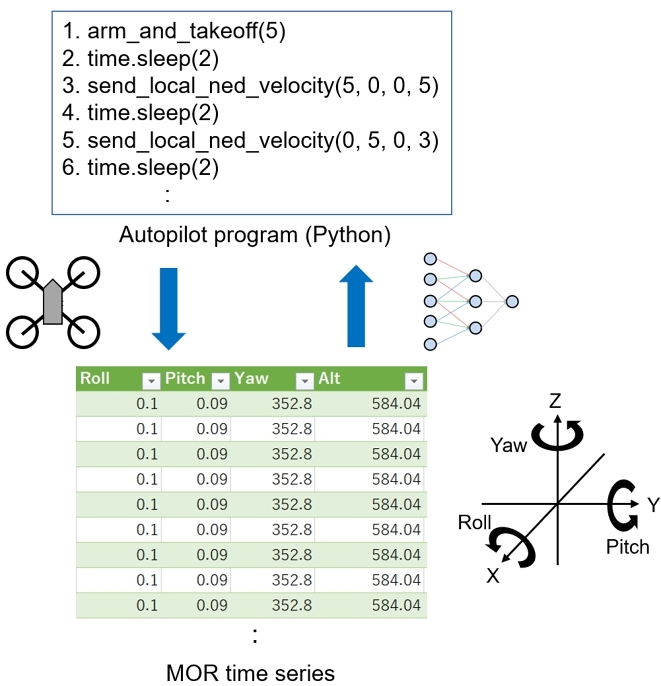

Fig. 1 shows our experimental drone system, which carries a Raspberry Pi to execute autopilot and localization programs. The system was developed with ArduPilot [2], one of the most widely used autopilot platforms. The vast majority is using Ardupilot in the open source share. ArduPilot controls Pixhawk [18], a flight controller of the drone system, and saves a dataflash log, which contains everything that happens during the flight, including outputs of many Pixhawk sensors [17].

This study made use of the four MOR signals (roll, pitch, yaw, and throttle) in the dataflash log, except that the throttle was replaced by the altitude of the barometer because the travel speed of drones is constant in this study. In the experiment, the throttle is used only to gain altitude. Increasing the throttle increases the motor power of the drone, thereby changing its altitude and travel speed. Unlike competitive drones, industrial drones are considered to have less throttle variation so as not to overload the batteries and motors. Notice that the MOR-based method is inexpensive to implement because it uses only existing Pixhawk sensors as input sources.

3.2 Conversion Program

Fig. 2 aptly represents the objective of this study. The autopilot program in the figure consists of functions in the DroneKit-Python tool, which send control messages to the Pixhawk through Micro Air Vehicle Communication Protocol (MAVLink) [2, 17]. In Fig. 2, for example, arm_and_takeoff(5) instructs the Pixhawk to take off and reach an altitude of 5 m, time.sleep(2) instructs it to hover for 2 s, and send_local_ned_velocity(5,0,0,5) requests it to move north at a rate of 5 m/s for 5 s (so that it reaches the first waypoint). As the drone flies according to the program, new measurements of sensors (roll, pitch, yaw, altitude) are added to the time series every 100 ms. The 3D coordinates in the figure represents the rotation angles of the roll, pitch and yaw sensors when the center of gravity of the drone moving in the X-axis direction is placed at the origin of the coordinates.

This study proposes a neural network program (referred to as a conversion program), which converts the time series into an autopilot program. Note that the autopilot program represents the flight route, so the planned current position can be retrieved from it. As the size of the time series increases, the converted autopilot program becomes longer. For a concise representation of the converted autopilot program, the conversion program outputs

(1)

(2)

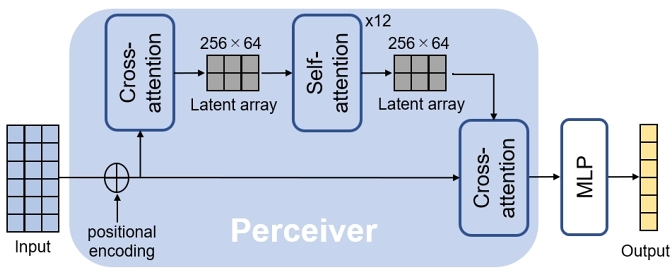

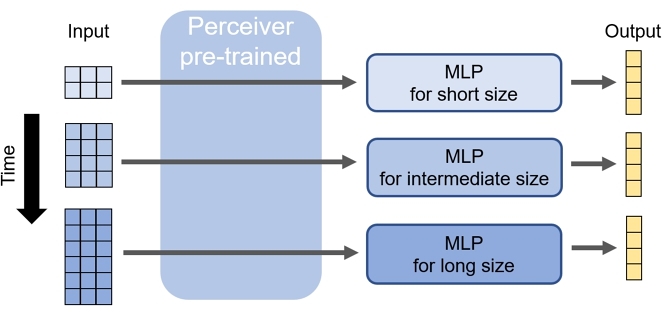

Fig. 3 illustrates the software structure of the conversion program, in which the input is an MOR time series and the output is 3(m + 1) + 1 real numbers given in (1). The conversion program consists of Perceiver, which analyzes the time series, and Multi-Layer Perceptron (MLP), a 3-layer neural network added to solve a multi-variate regression model. In the Perceiver model, the first cross-attention and self-attention (the second cross-attention) operations serve as an encoder (a decoder). The first cross-attention compresses a long input array into a latent array of size 256 × 64. The self-attention iteratively computes the interactions between all elements of the latent array. The Perceiver code used in this study relies on the Hugging Face Transformers library [1], a Python package that contains open-source implementations of transformer models for text, image, and audio tasks.

Among various deep learning architectures that can handle time series [13], Perceiver, an enhanced version of Transformer [28], was selected for the following reasons.

- Long-range dependency:

Industrial drones may travel long distances, for example, when transporting goods from a retail store. Long Short-Term Memory (LSTM) [9] and Transformer [28] are two well-known architectures that can capture the departure and arrival times of waypoints, even with long travel times. The latter model is easier to handle than the former (because the former faces the problem of vanishing and exploding gradients) and easier to parallelize than the former (because the former operates sequentially) [13]. - Error accumulation free:

Inertial navigation systems process a time series sequentially, so that error accumulation occurs. In contrast, all Transformer-derived models are error accumulation free because they perform self-attention operations (the interactions among all the elements of a time series are obtained) instead of sequential operation (see Fig. 3). - Input data compression:

An input time series should be sufficiently compressed because there are no features that should be extracted from drone behavior while moving between two waypoints. This study employs Perceiver because it can compress input time series. Note that the self-attention operation consumes computation and memory resources on the order of the square of the (compressed) time series length [10, 29].

4 EXPERIMENTS

4.1 Datasets

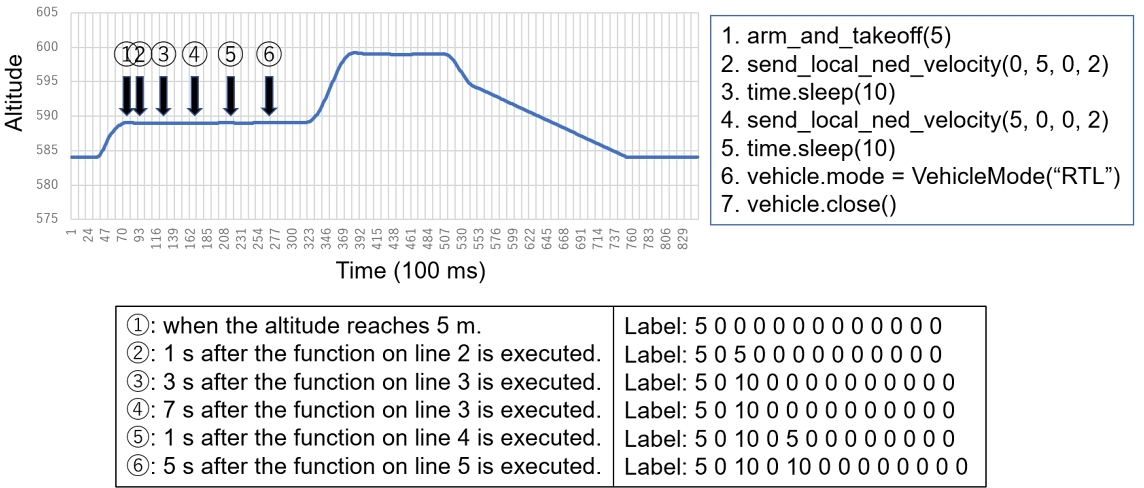

Fig. 4 illustrates how to collect training and test data. In the figure, an autopilot program (i.e., one flight route) generates six different MOR time series based on when the time series is sampled. In the program, the drone flies at a constant speed of 5 m/s. It first goes 10 m to the east, then 10 meters to the north, and finally back to the starting point. Each time series has a label (a correct answer). Note that at the second (fifth) sampling point, the drone is on its way to the first (second) waypoint, and at the third, fourth, and sixth sampling points, the drone is stationary in the air.

We used two mutually disjoint datasets. One dataset (referred to as dataset A) was derived from 40 flight routes including the maximum of three waypoints, and consists of 152 time series and label pairs. The other (referred to as dataset B) was derived from 12 flight routes including two waypoints, and consists of 24 time series and label pairs. The former is used for pre-training and the latter is for fine-tuning.

4.2 Evaluation

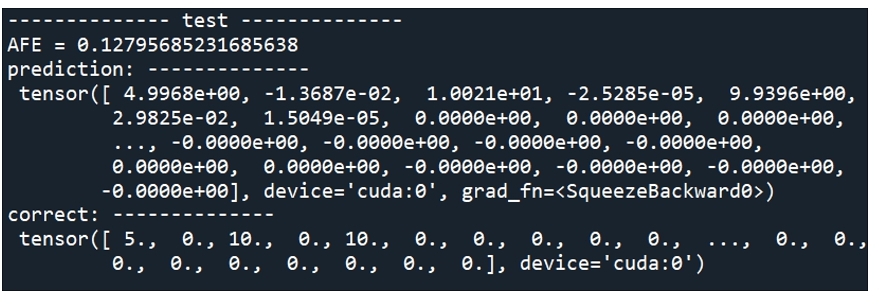

The accuracy of the prediction given by (1) is measured based on the normalized Absolute Flight-route Error (AFE), which is AFE devided by the total flight distance and

(3)

4.3 Fine Tuning

Because of the large number of parameters to be learned, it may be difficult to obtain a universal conversion program that can predict flight routes with high accuracy at any sampling time. To overcome this difficulty, we first pre-train Perceiver with a large number of training data, and then fine-tune MLPs such that each MLP covers a certain range of the input size. In other words, features of a time series are extracted as many as possible by pre-training, and then necessary features are selected for each input size range through fine-tuning. Fig. 6 illustrates that the conversion program selects an appropriate MLP for accuracy improvement.

4.4 Cross-Validation

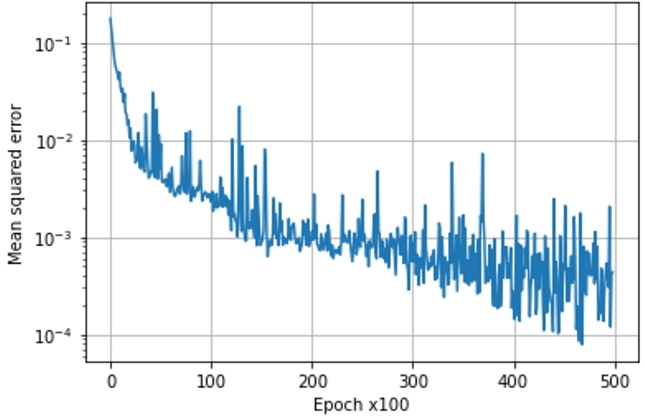

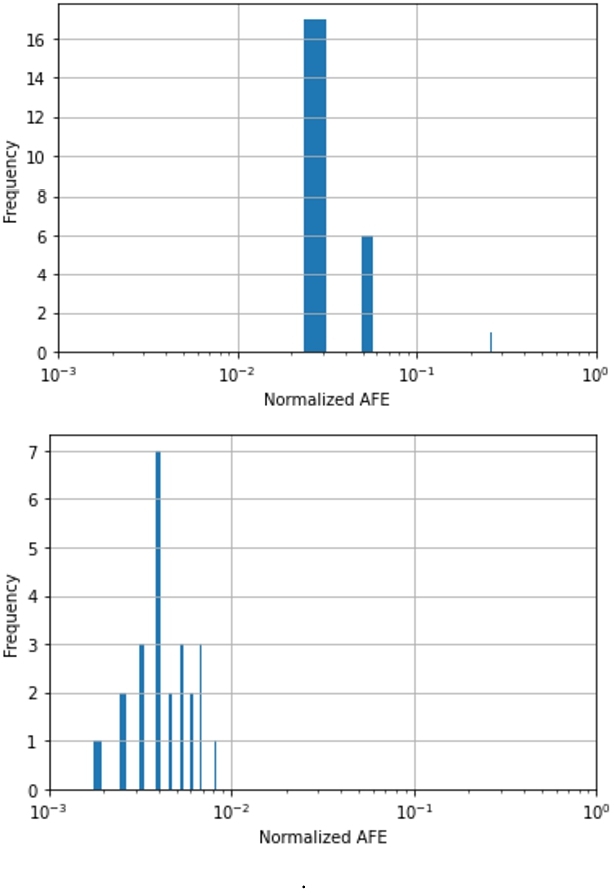

Fig. 7 shows the decrease in the Mean Squared Error (MSE) while pre-training Perceiver using dataset A. To see the effect of the amount of pre-training, pre-trained weight values were stored when the number of epochs was 20,000 and 50,000. Fig. 8 demonstrates this effect after performing 24-fold cross-validation with dataset B. Figs. 8 (upper) and (lower) show the cases of 20,000 and 50,000, respectively. In Fig. 8 (upper), there is one normalized AFE value of about 0.27, which implies that the prediction error per flight distance is 27%. In Fig. 8 (lower), all of the normalized AFE values are less than 10− 2.

5 CONCLUSIONS

In this paper, we proposed a new GPS alternative approach based on manual operation representation (MOR). With the exception of inertial navigation systems (INS), this approach is superior to conventional approaches in that it can completely ignore external signals and does not suffer from spoofing and interference attacks. In addition, because sequential processing are not performed, there is no accumulation of errors; thus, the approach can handle longer time series than INS. Furthermore, it does not require additional hardware, making it versatile and inexpensive. This is because this approach utilizes manual operation signals and remotely manual-controllable moving systems always possess sensors that receive manual operation signals.

This study applied this approach to the industrial drone flying according to an autopilot program. In this case, the MOR sensors were roll, pitch, yaw, and altitude, and Perceiver, a deep-learning architecture, was used to infer the drone's flight route from the time series of these signals. Perceiver was selected because it can handle long time series having long-range dependency with error accumulation free. A large decrease in the normalized AFE after fine-tuning confirmed the feasibility of this approach. In the future, the model stability will be improved by adjusting hyperparameters, reducing the effect of wind, and considering data loss and signal noise countermeasures.

REFERENCES

- [n. d.]. The AI community building the future.https://huggingface.co/. Online; accessed 7 August 2023.

- [n. d.]. ARDUPILOT Versatile, Trusted, Open. https://ardupilot.org/. Online; accessed 7 August 2023.

- Mary B Alatise and Gerhard P Hancke. 2020. A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 8 (2020), 39830–39846.

- Daoud Burghal, Ashwin T Ravi, Varun Rao, Abdullah A Alghafis, and Andreas F Molisch. 2020. A comprehensive survey of machine learning based localization with wireless signals. arXiv preprint arXiv:2012.11171 (2020).

- Marco Ceccato, Francesco Formaggio, Nicola Laurenti, and Stefano Tomasin. 2021. Generalized likelihood ratio test for GNSS spoofing detection in devices with IMU. IEEE Transactions on Information Forensics and Security 16 (2021), 3496–3509.

- Vinod Kumar Chauhan, Anshul Thakur, Odhran O'Donoghue, and David Andrew Clifton. 2022. COPER: Continuous patient state perceiver. In 2022 IEEE-EMBS International Conference on Biomedical and Health Informatics (BHI). IEEE, 1–4.

- Kongyang Chen, Guang Tan, Jiannong Cao, Mingming Lu, and Xiaopeng Fan. 2019. Modeling and improving the energy performance of GPS receivers for location services. IEEE Sensors Journal 20, 8 (2019), 4512–4523.

- Hai-fa Dai, Hong-wei Bian, Rong-ying Wang, and Heng Ma. 2020. An INS/GNSS integrated navigation in GNSS denied environment using recurrent neural network. Defence technology 16, 2 (2020), 334–340.

- Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long short-term memory. Neural computation 9, 8 (1997), 1735–1780.

- Andrew Jaegle, Sebastian Borgeaud, Jean-Baptiste Alayrac, Carl Doersch, Catalin Ionescu, David Ding, Skanda Koppula, Daniel Zoran, Andrew Brock, Evan Shelhamer, et al. 2021. Perceiver io: A general architecture for structured inputs & outputs. arXiv preprint arXiv:2107.14795 (2021).

- Andrew Jaegle, Felix Gimeno, Andy Brock, Oriol Vinyals, Andrew Zisserman, and Joao Carreira. 2021. Perceiver: General perception with iterative attention. In International conference on machine learning. PMLR, 4651–4664.

- Giil Kwon and Hyunjung Lee. 2023. Time series KSTAR PF superconducting coil temperature forecasting using recurrent transformer model. Fusion Engineering and Design 193 (2023), 113693.

- Bryan Lim and Stefan Zohren. 2021. Time-series forecasting with deep learning: a survey. Philosophical Transactions of the Royal Society A 379, 2194 (2021), 20200209.

- Jie Liu, Bodhi Priyantha, Ted Hart, Yuzhe Jin, Woosuk Lee, Vijay Raghunathan, Heitor S Ramos, and Qiang Wang. 2015. CO-GPS: Energy efficient GPS sensing with cloud offloading. IEEE Transactions on Mobile Computing 15, 6 (2015), 1348–1361.

- Zihao Lu, Fei Liu, and Xianke Lin. 2022. Vision-based localization methods under GPS-denied conditions. arXiv preprint arXiv:2211.11988 (2022).

- Andrew Mackey and Petros Spachos. 2019. LoRa-based localization system for emergency services in GPS-less environments. In IEEE INFOCOM 2019-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS). IEEE, 939–944.

- Evangelos Mantas and Constantinos Patsakis. 2019. GRYPHON: Drone forensics in dataflash and telemetry logs. In Advances in Information and Computer Security: 14th International Workshop on Security, IWSEC 2019, Tokyo, Japan, August 28–30, 2019, Proceedings 14. Springer, 377–390.

- Lorenz Meier, Petri Tanskanen, Friedrich Fraundorfer, and Marc Pollefeys. 2011. Pixhawk: A system for autonomous flight using onboard computer vision. In 2011 ieee international conference on robotics and automation. IEEE, 2992–2997.

- Lianxiao Meng, Lin Yang, Wu Yang, and Long Zhang. 2022. A survey of GNSS spoofing and anti-spoofing technology. Remote Sensing 14, 19 (2022), 4826.

- Azin Moradbeikie, Ahmad Keshavarz, Habib Rostami, Sara Paiva, and Sérgio Ivan Lopes. 2021. GNSS-free outdoor localization techniques for resource-constrained IoT architectures: A literature review. Applied Sciences 11, 22 (2021), 10793.

- Mohammad Nayfeh, Yuchen Li, Khair Al Shamaileh, Vijay Devabhaktuni, and Naima Kaabouch. 2023. Machine Learning Modeling of GPS Features with Applications to UAV Location Spoofing Detection and Classification. Computers & Security 126 (2023), 103085.

- Chenguang Ouyang, Suxing Hu, Fengqi Long, Shuai Shi, Zhichao Yu, Kaichun Zhao, Zheng You, Junyin Pi, and Bowen Xing. 2023. A semantic vector map-based approach for aircraft positioning in GNSS/GPS denied large-scale environment. Defence Technology (2023).

- Hossein Pirayesh and Huacheng Zeng. 2022. Jamming attacks and anti-jamming strategies in wireless networks: A comprehensive survey. IEEE communications surveys & tutorials 24, 2 (2022), 767–809.

- Yinrong Qiao, Yuxing Zhang, and Xiao Du. 2017. A vision-based GPS-spoofing detection method for small UAVs. In 2017 13th International Conference on Computational Intelligence and Security (CIS). IEEE, 312–316.

- Mary Jane C Samonte, Darwin A Medel, Joshua Millard N Odicta, and Ma Zhenadoah Leen T Santos. 2023. Using IoT-Enabled RFID Smart Cards in an Indoor People-Movement Tracking System with Risk Assessment. Journal of Advances in Information Technology 14, 2 (2023).

- Attilio Sbrana and Paulo André Lima de Castro. 2023. N-BEATS Perceiver: A Novel Approach for Robust Cryptocurrency Portfolio Forecasting. (2023).

- Xiang Sheng, Jian Tang, Xuejie Xiao, and Guoliang Xue. 2014. Leveraging GPS-less sensing scheduling for green mobile crowd sensing. IEEE Internet of Things Journal 1, 4 (2014), 328–336.

- Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. Advances in neural information processing systems 30 (2017).

- Qingsong Wen, Tian Zhou, Chaoli Zhang, Weiqi Chen, Ziqing Ma, Junchi Yan, and Liang Sun. 2022. Transformers in time series: A survey. arXiv preprint arXiv:2202.07125 (2022).

- De Jong Yeong, Gustavo Velasco-Hernandez, John Barry, and Joseph Walsh. 2021. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors 21, 6 (2021), 2140.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than the author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org.

ICIT 2023, December 14–17, 2023, Kyoto, Japan

© 2023 Copyright held by the owner/author(s). Publication rights licensed to ACM.

ACM ISBN 979-8-4007-0904-3/23/12.

DOI: https://doi.org/10.1145/3638985.3639013