SEGAR: A Visual Guides System for Surface Extension in Augmented Reality

DOI: https://doi.org/10.1145/3694907.3765944

SUI '25: ACM Symposium on Spatial User Interaction, Montreal, QC, Canada, November 2025

Due to the increasing availability of Augmented Reality (AR) devices, it is now possible to 3D sketch over physical objects. However, users still encounter challenges that affect their stroke accuracy and drawing experience when sketching in AR. This paper presents the design and implementation of the Surface Extension Guides for Augmented Reality (SEGAR) system, a pen-based user interface that allows users to extend the physical surfaces to mid-air while sketching. SEGAR provides users with visual and haptic feedback to help them seamlessly transition between sketching on physical surfaces and mid-air. We ran a pilot study, where four participants sketched a cube in mid-air using SEGAR. Our results show that participants found the system useful.

ACM Reference Format:

Junyuan Wu and Mayra Donaji Barrera Machuca. 2025. SEGAR: A Visual Guides System for Surface Extension in Augmented Reality. In ACM Symposium on Spatial User Interaction (SUI '25), November 10--11, 2025, Montreal, QC, Canada. ACM, New York, NY, USA 3 Pages. https://doi.org/10.1145/3694907.3765944

1 Introduction

Thanks to the increasing availability of devices with Augmented Reality (AR) capabilities, people can use 3D sketching to add virtual content to physical objects [2, 3, 10, 14, 19]. 3D sketching in AR allows users to utilize the haptic and visual feedback provided by the physical world to increase their sketching accuracy [9, 13, 17]. However, mid-air sketching in AR is challenging, as it relies on users’ spatial thinking to estimate object distance and orientation for accurate stroke placement [4, 16], while also requiring 6DOF device control without a physical surface—factors shown to reduce accuracy [1, 8].

We present SEGAR (Surface Extension Guides for Augmented Reality), a pen-based 3D sketching system for AR to address these issues. SEGAR facilitates transitioning from sketching on a physical surface to sketching in mid-air by providing two types of feedback to the user when sketching: a) visual feedback: dynamically appearing visual grid fragments around the pen tip that work as a global coordinate system, and b) haptic feedback: a virtual surface that appears when the user crosses the edge of a physical surface and provides vibrotactile feedback when the user touches it.

SEGAR extend past work that uses mobile devices to sketch in AR [3, 10, 19] by using a pen to sketch, which provides better ergonomics and increases user accuracy compared to finger painting [6, 8]. We also extend past work like SymbiosisSketch [2] that uses AR head-mounted displays and a pen to sketch by using visual and haptic feedback to enhance sketching continuity and accuracy when boundary-crossing.

2 SEGAR

2.1 Design

When designing SEGAR, we aimed to increase sketching accuracy by extending the support provided by the physical surface to mid-air [1, 17]. Past work has shown that visual guides help users visualize the relationship between objects and plan their strokes, which increases their accuracy [4, 16]. Other work has shown that providing haptic feedback can enhance sketching accuracy without constraining the user's strokes [1, 15]. We also choose to use a pen as an input device, as this device allows users to utilize the precision grip while sketching, which improves user stroke control over controllers and gestures [6, 8, 12]. Next, we describe the different features of SEGAR:

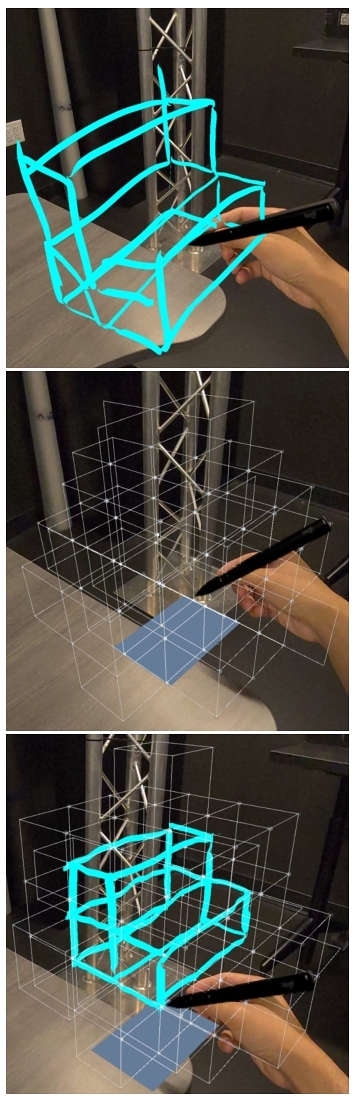

Proximity-based 3D Grid. While the user is sketching, we show a visual 3D grid that provides visual feedback to the user. The grid has 5 cm cubes that extend from the physical surface to mid-air. We only display grid lines with an endpoint within 10 cm of the pen's tip, which past work has shown provides a reference frame that improves user accuracy without cluttering the environment [4, 16].

Boundary-Triggered Extension Plane (BTEP). When the pen crosses the physical surface, a virtual plane appears at the same position and orientation as the surface. This plane follows the pen position, allowing the user to maintain their stroke at the same height and tilt of the physical surface. To keep the view clear, the plane is intentionally kept small, with the maximum generation size about 15 cm × 10 cm. BTEP reduces the jumps in the stroke when switching between real and virtual surfaces. Past work has shown that stable visual structures and clear real–virtual boundaries help reduce spatial drift and improve accuracy [5, 7, 18].

Haptic Feedback. Our system provides haptic feedback during drawing to help people feel the BTEP. The stronger the pressure applied to the mid button, the stronger the vibration. Past work has shown that using haptic feedback while sketching makes the process feel more natural, improves the drawing flow, and enhances immersion and accuracy [15].

2.2 Implementation

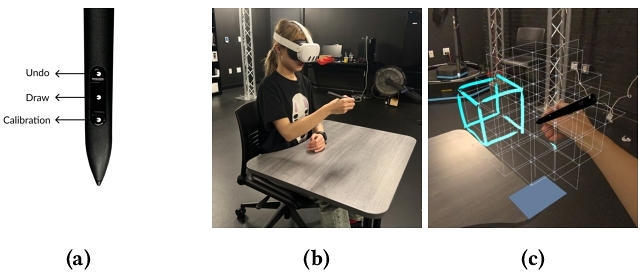

We implemented SEGAR using C# and Unity (version 2022.3.50f1). For the AR device, we used the video-see-through mode of the Quest 3. We used the Logitech MX Ink [11] as an input device with vibrotactile features that provide haptic feedback to the user. See Figure 2a for a diagram of the pen's button mapping.

Before drawing, the user must calibrate the system to match the virtual environment with the physical one. First, users set three calibration points on the physical surface to provide sufficient data for the three-point plane fitting algorithm. This algorithm determines the physical surface's normal vector and position in space, which defines the drawing plane's height and tilt. Next, users need to specify the physical size and orientation of the drawing area by taping the four corners of the surface. Once the system is calibrated, users can start sketching.

3 Pilot Study

We conducted a pilot study with four participants (two male, two female). See Figure 2 for the pilot study setting and sketch example. Three had HCI-related backgrounds and AR experience, and one had none. From those with AR experience, two had sketched in AR before, and one had used AR for gaming. The study included three phases: (1) tutorial, (2) AR sketching without SEGAR as a baseline, and (3) calibration and drawing a cube with SEGAR. Then, participants completed the SUS questionnaire (5-point Likert scale). SEGAR's SUS score was 83, indicating high usability (See Table 1). Qualitative feedback highlighted that the localized grid improved spatial accuracy and drawing flow, while the AR-inexperienced participant noted a need for more practice and occasional guidance.

| Item | Mean |

|---|---|

| I would like to use this app frequently. | 4.00 |

| I found this app unnecessarily complex. | 3.50 |

| I thought this app was easy to use. | 3.50 |

| I would need assistance to use this app. | 2.25 |

| I found the various functions were well integrated. | 3.50 |

| There was too much inconsistency in this app. | 3.75 |

| Most people would learn to use this app very quickly. | 3.25 |

| This app is very cumbersome/awkward to use. | 3.75 |

| I felt very confident using this app. | 3.50 |

| I needed to learn a lot of things before I could get going with this app. | 2.25 |

4 Conclusion

We designed and implemented SEGAR, a pen-based 3D sketching system for Augmented Reality (AR), which provides visual guides and haptic feedback to extend the physical surface while sketching. These additional feedback types enable users to seamlessly transition between sketching on a physical surface and mid-air without losing accuracy. A pilot study with four participants showed that SEGAR is highly usable and provides stable and efficient support for mid-air 3D sketching in AR, laying the foundation for developing higher-precision, cross-boundary creative tools in AR environments. In the future, we will add other features to SEGAR, like a colour picker and support for different shapes of physical surfaces. We also plan to run a user study to compare SEGAR performance against other 3D sketching systems for AR.

References

- Rahul Arora, Rubaiat Habib Kazi, Fraser Anderson, Tovi Grossman, Karan Singh, and George Fitzmaurice. 2017. Experimental Evaluation of Sketching on Surfaces in VR. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (Denver, Colorado, USA) (CHI ’17). Association for Computing Machinery, New York, NY, USA, 5643–5654. https://doi.org/10.1145/3025453.3025474

- Rahul Arora, Rubaiat Habib Kazi, Tovi Grossman, George Fitzmaurice, and Karan Singh. 2018. SymbiosisSketch: Combining 2D & 3D Sketching for Designing Detailed 3D Objects In Situ. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18). ACM, 1–15. https://doi.org/10.1145/3173574.3173759

- Simone Arrieu, Gianmarco Cherchi, and Lucio Davide Spano. 2021. ProtoSketchAR: Prototyping in Augmented Reality via Sketchings. In Smart Tools and Applications in Graphics (STAG 2021). The Eurographics Association. https://doi.org/10.2312/STAG.20211488

- Mayra D. Barrera Machuca, Wolfgang Stuerzlinger, and Paul Asente. 2019. Smart3DGuides: Making Unconstrained Immersive 3D Drawing More Accurate. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology (VRST ’19). Association for Computing Machinery, New York, NY, USA, Article 2, 11 pages. https://doi.org/10.1145/3359996.3364254

- Ekaterina Borodina, Alexander Toet, Jeroen van Erp, Tobias Heffner, Heinrich H. Bülthoff, and Betty J. Mohler. 2023. Boundaries and landmarks improve spatial updating in immersive virtual environments. Frontiers in Psychology 14 (2023), 1144861. https://doi.org/10.3389/fpsyg.2023.1144861

- Chen Chen, Matin Yarmand, Zhuoqun Xu, Varun Singh, Yang Zhang, and Nadir Weibel. 2022. Investigating Input Modality and Task Geometry on Precision-first 3D Drawing in Virtual Reality. In 2022 IEEE International Symposium on Mixed and Augmented Reality (ISMAR). IEEE, 1–10. https://doi.org/10.1109/ISMAR55827.2022.00054

- Chris G. Christou, Bosco S. Tjan, and Heinrich H. Bülthoff. 2003. Extrinsic Cues Aid Shape Recognition from Novel Viewpoints. Journal of Vision 3, 3 (2003), 183–198. https://doi.org/10.1167/3.3.1

- Hesham Elsayed, Mayra Donaji Barrera Machuca, Christian Schaarschmidt, Karola Marky, Florian Müller, Jan Riemann, Andrii Matviienko, Martin Schmitz, Martin Weigel, and Max Mühlhäuser. 2020. VRSketchPen: Unconstrained Haptic Assistance for Sketching in Virtual 3D Environments. In VRST ’20: Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology. Association for Computing Machinery, New York, NY, USA, Article 3, 11 pages. https://doi.org/10.1145/3385956.3418953

- Wolfgang Hýrst, Ronald Poppe, and Jerry van Angeren. 2015. Drawing Outside the Lines: Tracking-based Gesture Interaction in Mobile Augmented Entertainment. IEEE. https://doi.org/10.4108/icst.intetain.2015.259589

- Kin Chung Kwan and Hongbo Fu. 2019. Mobi3DSketch: 3D Sketching in Mobile AR. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI ’19). Association for Computing Machinery, New York, NY, USA, 1–11. https://doi.org/10.1145/3290605.3300406

- Logitech. [n. d.]. Logitech MX Ink MR Stylus & MX Inkwell. https://www.logitech.com/en-ca/products/vr/mx-ink-mr-stylus-mx-inkwell.914-000087.html. Accessed: 2025-08-13.

- Duc-Minh Pham and Wolfgang Stuerzlinger. 2019. Is the Pen Mightier than the Controller? A Comparison of Input Devices for Selection in Virtual and Augmented Reality. In Proceedings of the 25th ACM Symposium on Virtual Reality Software and Technology (VRST ’19). ACM, Article 35, 11 pages. https://doi.org/10.1145/3359996.3364264

- Patrick Reipschläger and Raimund Dachselt. 2019. DesignAR: Immersive 3D-Modeling Combining Augmented Reality with Interactive Displays. In Proceedings of the 2019 ACM International Conference on Interactive Surfaces and Spaces (Daejeon, Republic of Korea) (ISS ’19). Association for Computing Machinery, New York, NY, USA, 29–41. https://doi.org/10.1145/3343055.3359718

- Andrew Rukangu, Ethan Bowmar, Sun Joo Ahn, Beshoy Morkos, and Kyle Johnsen. 2025. Drawn Together: A Collocated Mixed Reality Sketching and Annotation Experience. In 2025 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW). 1626–1627. https://doi.org/10.1109/VRW66409.2025.00458

- Lucas Siqueira Rodrigues, Timo Torsten Schmidt, John Nyakatura, Stefan Zachow, Johann Habakuk Israel, and Thomas Kosch. 2024. Assessing the Effects of Sensory Modality Conditions on Object Retention across Virtual Reality and Projected Surface Display Environments. Proc. ACM Hum.-Comput. Interact. 8, ISS, Article 537 (2024), 28 pages. https://doi.org/10.1145/3698137

- Rumeysa Turkmen, Zeynep Ecem Gelmez, Anil Ufuk Batmaz, Wolfgang Stuerzlinger, Paul Asente, Mine Sarac, Ken Pfeuffer, and Mayra Donaji Barrera Machuca. 2024. EyeGuide & EyeConGuide: Gaze-based Visual Guides to Improve 3D Sketching Systems. In CHI ’24: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, New York, NY, USA, Article 178, 14 pages. https://doi.org/10.1145/3613904.3641947

- Philipp Wacker, Adrian Wagner, Simon Voelker, and Jan Borchers. 2018. Physical Guides: An Analysis of 3D Sketching Performance on Physical Objects in Augmented Reality. In Proceedings of the 2018 ACM Symposium on Spatial User Interaction (SUI ’18). ACM, New York, NY, USA, 25–35. https://doi.org/10.1145/3267782.3267788

- Xiaoang Irene Wan, Ranxiao Frances Wang, and James A. Crowell. 2009. Spatial Updating in Superimposed Real and Virtual Environments. Attention, Perception, & Psychophysics 71, 1 (2009), 42–51. https://doi.org/10.3758/APP.71.1.42

- Yang Zhou, Peixin Yang, Xinchi Xu, Bingchan Shao, Guihuan Feng, Jie Liu, and Bin Luo. 2024. FMASketch: Freehand Mid-Air Sketching in AR. International Journal of Human–Computer Interaction 40, 8 (2024), 2142–2152. https://doi.org/10.1080/10447318.2023.2223948

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be honored. For all other uses, contact the owner/author(s).

SUI '25, Montreal, QC, Canada

© 2025 Copyright held by the owner/author(s).

ACM ISBN 979-8-4007-1259-3/25/11.

DOI: https://doi.org/10.1145/3694907.3765944