Robotecture: A Modular Shape-changing Interface Using Actuated Support Beams

DOI: https://doi.org/10.1145/3689050.3704925

TEI '25: Nineteenth International Conference on Tangible, Embedded, and Embodied Interaction, Bordeaux / Talence, France, March 2025

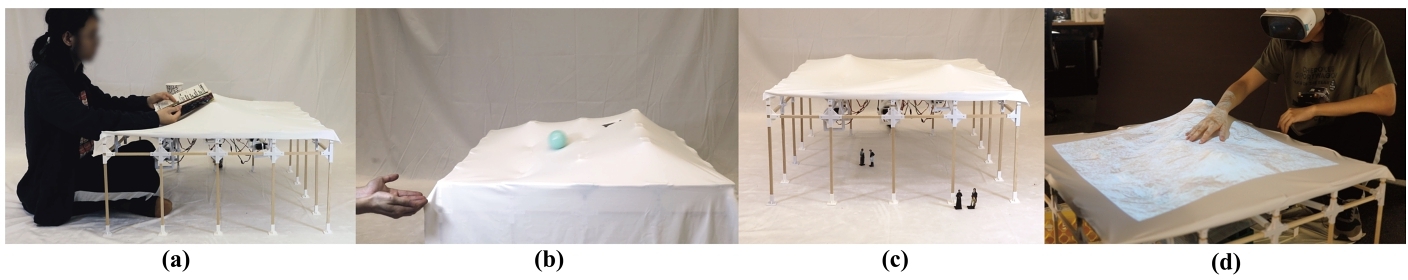

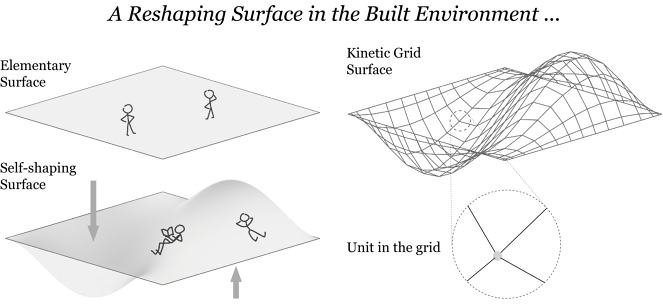

Shape-changing User Interfaces (SCUIs) can dynamically adjust their shape or layout in response to user interactions or environments. It is challenging to design expandable, affordable, and effective SCUIs with optimal space utilization for novel interactions. To tackle this challenge we introduce Robotecture, a cost-efficient and expandable shape-changing system which utilizes a self-lifting structure composed of modular robotics that actuate support beams. Robotecture generates dynamic surface displays and enclosures by modulating a grid of robotic units with linear movements, each with two actuators and four beams connecting to adjacent units. The modular design allows the structure to scale to different grid sizes and to be arranged in flexible layouts. The self-lifting nature of Robotecture makes it possible to utilize the space on both sides of the surface. The design of a sparse grid structure makes the system more efficient in simulating large-scale structures such as smart architecture, and the spaces between the beams enable objects to pass through the actuated surface for novel interactions. In this paper, we demonstrate a few prototypes with different layouts and validate the proof of concept. Additionally, we showcase various scenarios where Robotecture can enhance tangible interactions and interactive experiences for versatile and eco-friendly applications across different scales, such as tabletop tangible displays, room-scale furniture simulation, smart architecture, etc.

ACM Reference Format:

Yuhan Wang, Keru Wang, Zhu Wang, and Ken Perlin. 2025. Robotecture: A Modular Shape-changing Interface Using Actuated Support Beams. In Nineteenth International Conference on Tangible, Embedded, and Embodied Interaction (TEI '25), March 04--07, 2025, Bordeaux / Talence, France. ACM, New York, NY, USA 11 Pages. https://doi.org/10.1145/3689050.3704925

1 Introduction

Researchers in Human-Computer Interaction (HCI) have long explored the development of Shape-changing User Interfaces (SCUIs) that can dynamically adjust their form or layout in response to user interactions or environments [31]. They play a crucial role in delivering adaptive and interactive user experiences, offering unique and engaging interactions that bridge the gap between the physical and digital worlds [41]. Previous research has used SCUIs to simulate shapes, provide haptic feedback for virtual experience, and facilitate object-handling interactions [31, 36]. Many hardware design of SCUIs has been explored, such as linear mechanical actuation [7, 38, 40], inflatable (hydraulic and pneumatic) actuation [27, 45], dynamic polygonal surface design [2, 9], and deformable materials [6, 45]. However, most prior work still faces potential barriers to achieving expandability, making effective use of the space beneath the dynamic surface, simulating enclosures, and reducing cost.

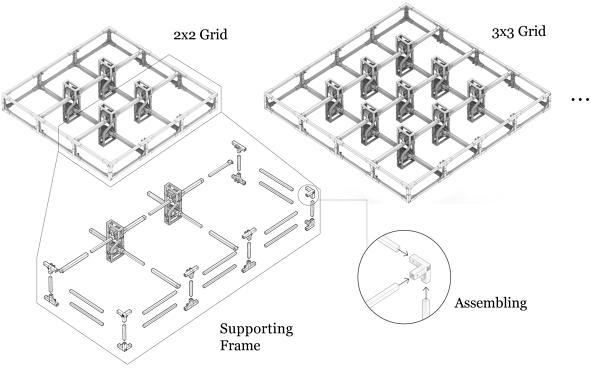

We introduce Robotecture to address these challenges by designing and implementing a novel, affordable mechanical architecture. Our architecture adopts a modular design where identical actuated units are linked together via support beams. This modular design approach brings a high degree of expandability because Robotecture units can be assembled and connected together into different layouts, and additional units can be integrated into the existing layout to create a larger surface. In addition, the modular design could simplify production as units are identical therefore each piece can be mass-produced. It could also enhance maintainability as each unit is interchangeable. Robotecture provides rigid support despite a wide separation between the support beams. Its innovative design allows users to simulate enclosures and interact with objects passing through the actuated surface.

Robotecture's structure is essentially a self-lifting surface composed of a sparse grid of robotic units, where each unit can be independently raised or lowered by utilizing two motors to rotate extendable support beams. For each unit, the rotation of the motors will cause the angles between the support beams and other adjacent units to change, thereby raising or lowering that unit. The modular architecture ensures that individual units can easily be linked together into an arbitrary sparse grid layout. The self-lifting mechanical structure of Robotecture is packed into one flat layer, with all the raising and lowering movements powered by the actuators and support beams within the layer. This structural design is fundamentally different from most pin-based SCUIs, which typically have a base structure underneath to house the actuators and other mechanical components. One significant benefit is that it also allows for the utilization of the space beneath the layer, which would otherwise be occupied by the base of pin-based SCUIs. The available spaces from both sides open up possibilities for creative space utilization and interactions. As a result, multiple Robotecture layouts can be arranged in non-planar configurations to form geometries that are beyond 2.5D and to create different types of enclosures. In addition, the ability for objects to pass through the grid surface can also open up possibilities for novel interactions. To the best of our knowledge, Robotecture is the first grid-based SCUI to enable efficient and novel space utilization on both sides of the actuating surface, while allowing objects to pass through the surface.

It's crucial to note that our primary contribution lies in the architecture. We implement a few prototypes, which are then showcased to validate the feasibility of the architecture and demonstrate possible interactions. These applications include dynamical physical affordances (e.g. intelligent buildings, smart furniture), object handling (e.g. pushing a passive object into the user's hand), haptic feedback for Mixed Reality (MR) (e.g. Robotecture provides the haptic sensation of a virtual surface by physically simulating its shape), and tangible telepresence (e.g. manipulating a remote object with physical telepresence enabled by shape-changing).

In summary, our work makes the following contributions:

- Design an expandable, self-lifting, shape-changing interface using actuated support beams.

- Implement prototypes with different layouts, and showcase several applications to demonstrate the potential of our system, attributed to the nature of its novel self-lifting and sparse grid structure.

2 Related Work

SCUIs play an important role in human-computer interaction, enabling unique and engaging experiences by integrating digital and physical environments. Due to the fact that SCUI research spans multiple domains, innovation and development in SCUIs have greatly advanced alongside various related fields, such as computer graphics, computer vision, robotics, materials science, media arts, interactive design, etc [4, 29, 31, 41]. Researchers have extensively explored various aspects of SCUIs, such as mechanisms, techniques, and functionality. A wide range of mechanisms and techniques have been developed to implement the actuators, such as mechanical structures [2, 7, 12, 23, 40], inflatable (e.g. pneumatic, hydraulic) components [21, 38, 45], smart materials (e.g. shape memory alloys/polymers) [3, 4, 19, 32, 33], etc. Consequently, these developments have led to the rise of new interactions in addition to displays [7, 9, 12], such as dynamic physical affordances [7, 38], object manipulation [1, 16, 20], physical telepresence [11, 18, 34], haptic feedback for MR[35, 39, 43, 47], etc.

2.1 Linear Mechanical Actuation

Robotecture utilizes linear mechanical actuation to transform its shape. Linear mechanical actuation is one of the popular approaches of SCUIs. It typically converts the actuator's rotary motion into linear motion. In our system, the rotary motion from the motor is converted into raising and lowering motions for the unit to move in elevation. Pin-based SCUIs, one of the most popular SCUIs, typically use linear mechanical actuation [5, 17, 25, 30, 44]. For example, Project FEELEX [12] and inFORM [7] are milestone table-top pin-based SCUIs for 2.5D shape display, involving different designs and different types of motors and linkages to retract pins independently. Most pin-based architecture place the actuation structure beneath the shape-changing surface (i.e. inFORM's 2.5D display). Materiable [26] uses a similar mechanism as inFORM, but in addition to a simple shape-changing display, its touch detection capability allows the perception of interactions between humans and the rendered displays. Some pin-based SCUIs have various sizes, like ShapeShift [35] and PoCoCo [47], which have a similar mechanism to inFORM but smaller sizes (ShapeShift is a mobile tabletop SCUI, and PoCoPo is a handheld SCUI). Their portability and light weight make them easier to be paired with XR environments to enhance the haptic experience for users immersed in virtual environments. MagneShape [46] is another pin-based SCUI that utilizes the magnetic field between magnetic pins and magnetic sheets to induce motions of the pins rather than using electronic actuators. Elevate [13] is a room-scale pin-based shape-changing structure that can afford human body weight and enables users to walk over it.

Compared to prior work, Robotecture does not rely on actuators underneath for lifting or lowering. Instead, it focuses on leveraging the shape-changing output in the form of a continuous and transformable layer. The layer can flex to various forms while maintaining a connected structure throughout the transformations. It also emphasizes larger, room or table scale designs with low-resolution yet comfortable interfaces, as opposed to smaller, handheld designs with high-resolution shape displays. Our unique beam structure enables us to simulate extensive surfaces with sparsely placed robotic units, making Robotecture a modular and affordable option for such interactions. Furthermore, our lightweight design allows us to expand the dimensions (vertically and horizontally) of dynamic shape displays by easily installing Robotecture as walls, roofs, and floors.

2.2 Self-lifting Structure

Another primary feature of Robotecture is its self-lifting structure. Self-lifting structures, unlike most pin-based SCUIs, have the ability to support themselves and to generate lifting force without relying on external mechanisms [22]. For example, truss-based SCUIs, like TrussForm [15] and PneuMesh [10], use truss structures to construct larger and more complex shapes. Mori [2], an origami-inspired SCUI, is a modular and reconfigurable system capable of utilizing origami-like folding to fold each unit up to a vertical angle. Lumina [14], constructed with soft kinetic materials, can change its form by actuating elastic soft skins via SMA-built (shape-memory alloy) skeletons. JamSheets [28] focuses on creating a thin and lightweight SCUI by using a layer-jamming mechanism. Gonzalez2023 et al. [8] introduced a constraint-driven robotic surface system that utilizes squeezing force to push and lift its robotic units. While Robotecture has a similar design concept to other self-lifting SCUIs, it is different in its approach to designing each modular unit and forming larger structures. Other self-lifting SCUIs have better stability and the ability to construct more complex shapes, and Robotecture has a limited range for height adjustment without the ability to fold up. However, Robotecture focuses on actively and conveniently forming dynamic surfaces independently with existing structures, while other projects like Mori [2] and PneuMesh [10] need to prefabricate and alter the structure partially to produce functionalities in different scenarios. Additionally, Robotecture has the advantages of lower implementation cost and technical simplicity, especially for larger scales.

2.3 Tangible Interactions

With the development of SCUIs, tangible interactions have gained significant benefits and have taken a step up in blurring the boundaries between the digital and physical worlds. These advancements push the envelopes for users to physically engage with digital content through interactive experiences and haptic feedback [11, 31, 36, 42].

Among the various tangible interactions, dynamic physical affordances allow users to interact with a deforming or reconfiguring shape to achieve different functionalities (e.g. physical buttons can dynamically change their shapes in response to users’ interactions [7]). Tangbile telepresence helps users interact with remote objects and collaborators through the sense of physical presence provided by the SCUI (e.g. users can manipulate an object through their remote physical embodiment provided by the SCUI [18]). Haptic feedback for VR enables users to feel tactile sensations during their physical interactions with virtual objects [35, 37, 43, 47]. Object handling involves the physical manipulation of real-world objects using actuation capabilities (e.g. physically moving passive objects autonomously [7]).

A unique benefit of the sparse grid structure is that it connects the spaces above and below the surface. Also, the hollow spaces between the support beams allow smaller objects to pass through the surface. Therefore, Robotecture enables novel interactions that demand unique spatial requirements, such as hiding an object under the surface and then revealing the object from beneath.

3 Design

3.1 Design Goal

Our objective is to provide a dynamic, continuous surface design that is suitable for simulating interactive physical environments such as furniture with flexible affordance and smart ergonomic architecture. To achieve that, the system needs to: 1. make efficient use of the space on both sides of the surface to simulate enclosure; 2. be able to construct large-scale surfaces at low cost; 3. be flexibly arranged to different layouts and different scales to meet the diverse user requirements; and 4. can comfortably accommodate human interaction.

We propose the following feature design according to these goals:

3.1.1 Self-lifting Surface. As mentioned in Section 2, previous shape-changing displays such as pin-based actuation approach require extra supporting structures beneath the surface, consequently restricting the use of internal space. In contrast, Robotecture's innovative structure introduces a self-lifting surface by utilizing actuated beams to lift the unit without requiring extra support under itself. This approach makes it possible to leverage both sides of the surface, thereby opening up new interaction possibilities, such as enclosure simulation for architecture, furniture, and intelligent storage systems.

3.1.2 Sparse Grid Design. Simulating large-scale surfaces, such as architecture, typically doesn't require high-resolution shape rendering. Therefore, we propose a sparse grid design that reduces the number of robotic units needed to form the surface while still displaying shapes with reasonable detail. This approach makes Robotecture a cost-effective solution for large-scale shape-changing displays suitable for furniture and architecture simulation. Additionally, the shape-rendering resolution can be further adjusted by varying the length of the supporting beams: longer beams result in fewer robotic units and lower resolution, while shorter beams provide higher resolution with more robotic units.

3.1.3 Modular Robotic Unit. Our modular robotic unit design allows Robotecture to adapt to different user activities, making it easy to assemble surfaces of varying sizes and configurations. Much like assembling Lego blocks, the robotic units can be easily connected by stacking the beams together. This design choice enables new modules to be easily added to the existing grid by inserting motors into the unit chassis and connecting motors to support beams. With their self-lifting capability, the units can be arranged at various angles to create surfaces that go beyond horizontal and vertical planes. This design strategy promotes adaptability and customization, making Robotecture highly versatile for responsive physical environments.

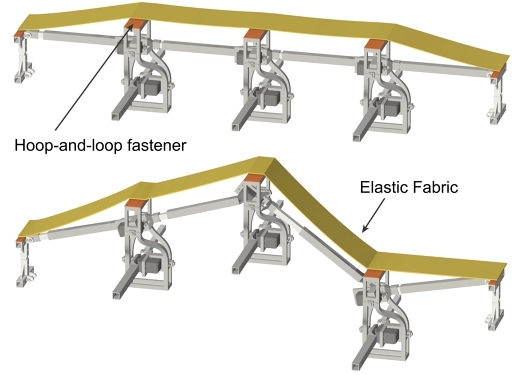

3.1.4 Smooth and Weight-tolerance Interface. To adapt to use cases such as smart furniture, it is important that the shape-changing surface can accommodate human interaction comfortably and support substantial weight. To achieve this, Robotecture is equipped with a fabric layer that covers its robotic grid, providing a smooth, soft, and user-friendly interface for human interactions.

As the units move, the surface enclosed by four adjacent beams will be reshaped continuously with a change in its surface area. Therefore, using elastic materials or deployable structures to cover the surface is a fundamental design choice for Robotecture. For use cases that require direct body contact, such as smart beds and chairs, soft materials such as elastic fabric blankets or sheets can be used. For applications requiring rigid connections, such as architectural floors or walls, rigid materials with deployable origami structures that can expand and contract to cover the surface are suitable.

Additionally, the incorporation of beam support enhances the surface's weight tolerance, reducing deformation under applied force and ensuring the system's overall robustness.

3.2 Design Process

Our fundamental design principle is to make the structure simple, efficient, and robust. The robotic unit design converts the motor's rotational motion into height adjustment by controlling the angle between two support beams. We will detail the mechanics further in the following sections. This straightforward and effective design aligns with our commitment to simplicity and ease of implementation. It not only reduces complexities in design and manufacturing but also reduces the potential for quality issues.

We iterated the design of Robotecture for a few rounds to enhance its efficiency and strength. Across these design cycles, we tested the stability and smoothness of both mechanical and electrical components.

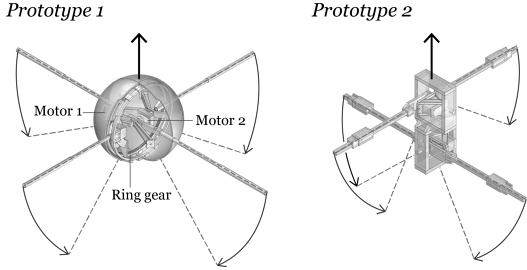

We initially designed the robotic unit in the shape of a sphere with four beams connected to its center. We placed two motors as shown in Figure 3 prototype 1, and used a ring gear design to guide the movement of the beams connected to the motors. The functioning of the system relies on the precise shape of the gears, which is supported by the inner surface of the sphere. We found that this sphere can easily get deformed in a few days with our PLA 3D printing material. What's more, the complicated inner structure also limited the space to put the motors, making it hard to use large, powerful motors for lifting the unit efficiently.

To simplify the structure and make it more robust, we improved our design to its current form, making beams from two directions stacked in different layers without relying on gear ring guidance, leaving more space for using larger motors. Therefore, we can upgrade the motor to provide more reliable power for the movement. We also strengthened the beams and made them light and robust. so this final version is more stable and powerful to expand to larger grids. The iterative journey facilitated continuous improvement and ultimately resulted in a refined design poised for a variety of interactive applications.

4 Implementation

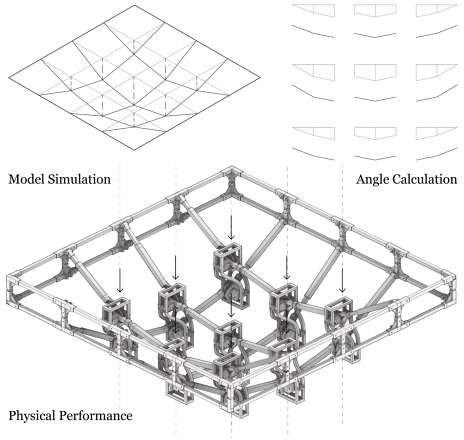

Robotecture is implemented as a self-lifting, modular, sparse-grid shape-changing user interface. It is constructed from a series of identical robotic units, aligned in a grid layout. Each unit incorporates a modular design, equipping the system with the potential for arranging into a flexible layout and grid size. Its innovative beam-based design facilitates the simulation of large-scale continuous surfaces in a cost-effective manner.

4.1 Mechanical Design and Implementation

As introduced earlier, Robotecture ensures that each individual unit in a grid layout can be interlinked with other identical units by connecting their support beams. Each unit has 4 support beams extending in 4 different directions along the sagittal and frontal axes. Therefore, the system can scale up by aligning units along these axes. Due to the modular design, when one unit malfunctions, it can be easily replaced without affecting the entire system. We now break down the mechanical design and implementation of one representative unit.

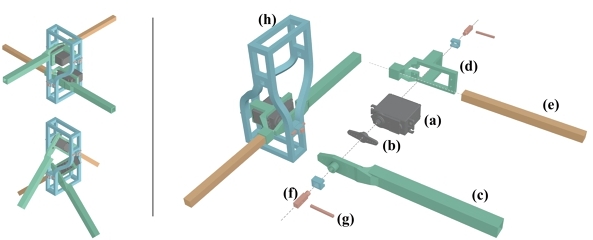

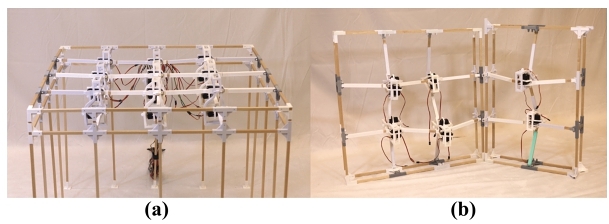

4.1.1 Modular Actuator. Each actuated unit, modularized in a chassis (with the dimension of 15cm x 10cm x 10cm, see Figure 4(h)), is equipped with two servo motors, with each motor's shaft linked to the joint of two support beams connected at one end. The motor transmits the rotational force to the two support beams, then to adjacent components, and finally to the support frame. As a result, rotation causes the unit to raise and lower.

The model of the servo motors used in the prototypes of Robotecture is MG996r (Figure 4(a)), which has a maximum torque of 1.47N · m at the voltage of 6V. In the Robotecture prototype with the layout of 3x3, we use two 16-channel PWM servo drivers (PCA9685) and a 12V 50A power supply for powering all 18 motors via two 6V 25A power adapters and two power distribution boards. The system is controlled by an Arduino UNO microcontroller. The software control manages the rotation (thus, manages the height) of each unit through serial communication.

4.1.2 Telescopic Beams. For each unit, every support beam consists of 2 rigid poles with the internal pole sliding inside the external tube, allowing the beam to collapse or expand telescopically. In our prototype, the internal pole is a wooden square dowel rod (Figure 4(e)) measuring 0.95cm on each side and 16cm in length; and the external tube is a 3d-printed tube (Figure 4(c)) that allows the wooden rod to slide smoothly in or out of it. With the telescopic beams connected to both of its unit's motors and adjacent units’ motors, the telescopic beams will collapse or expand in response to the rotations of its motor.

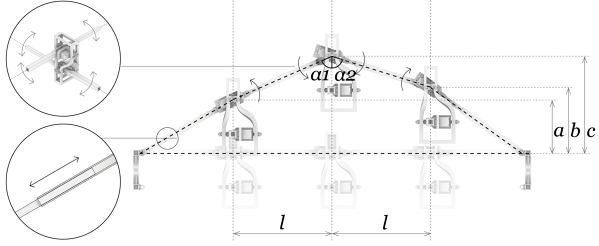

4.1.3 Rotational Motion to Height Adjustment. The angle between each motor's two support beams can be controlled through the motor's rotation. As a result of this rotation, the unit's height changes due to the nature of the mechanical structure. As depicted in Figure 5, when the angle α is 180 degrees, the unit is at its neural position (the two beams are aligned in a straight line); when the angle α(α1 + α2) is smaller than 180 degrees, the unit lifts itself up; when the angle α is larger than 180 degrees, the unit lowers itself down.

In order to control the height adjustment, we need to calculate the rotational angle required for each motor. For each pair of support beams, α represents the angle required to determine the rotation needed from the motor. We can derive angle α by calculating α1 and α2 with Equation 1 and 2. The method calculates tan(α1) and tan(α2) from trigonometric ratios in Equation 1, where l is the horizontal distance from the unit to the adjacent unit (also the cell size of the grid) and (b − a), (b − c) indicate the corresponding height difference between units. Then, we can derive tan(α), and subsequently, arctan can help calculate the rotation angle α. In this way, we create a mapping between rotation and height, which allows the system to control height as a function of rotation.

(1)

(2)

Finally, we use arctan to get the rotation angle α. It is worth mentioning that the tan function exhibits periodicity. Therefore, to ensure injectivity in obtaining the angle, we need to restrict the domain by comparing the heights between the three adjacent units and checking whether the angle is greater or smaller than 180 degrees. Generally speaking, a smaller angle α results in a greater height and a larger angle α results in a lower height for the unit. However, the actual angle is limited to a range of 90 to 270 degrees due to the mechanical structure. Freedom of movement is bounded by the length of the support beams and the chassis which provides space for the support beams to move without colliding with them.

4.1.4 Support Frame. The outermost rigid support frames of our prototypes are assembled with 3D-printed joints connecting the square wooden rods (0.95cm on each side with two lengths of 20 cm and 8.5cm). The dimensions of the frame depend on the layout of the target setup. For each grid cell, the size (step width) is 20cm; For our prototypes, the 2x2 layout (see Figure 2) is 64cm x 64cm and the 3x3 layout (see Figure 7(a)) is 85cm x 85cm.

4.1.5 Fabric Display. As Figure 8 shows, an elastic fabric layer can attach to the top of Robotecture to render the approximate shape of a smooth surface. In our prototype, this layer of fabric uses hoop and loop tape to stick onto each unit and to the frame edge, so that it can be exchanged for other materials. Our applications use different types of elastic fabric materials, transparent or opaque, to serve various interaction purposes.

4.1.6 Software and Electronic Control. In our 3x3 implementation, one Arduino UNO board is used to process the model and send out PWM (pulse-width modulation) signals to control the motors to rotate to the desired angle. two Adafruit PCA 9685 servo driver boards are used to distribute the Arduino signal to 18 servo motors used in the 9 Robotecture units. For the electrical power supply, a 12V regulated power supply was transited to a 6V power with a power transformer. The power was connected to the motors with wires through a power distribution board. The prototype communicated with a laptop computer (Windows operating system, i7-1365U CPU, 32GB memory, integrated Intel GPU) through serial port. We implemented our applications with Unity and WebXR.

5 Technical Evaluation

We present the technical evaluation of Robotecture's prototype to demonstrate its physical capabilities, performance, and the feasibility of its structural design.

5.1 Robotic Unit Specifications

A single Robotecture unit (including the motor and 4 beams) weighs 218 grams, and each servo motor's maximum torque is 1.47N · m. Each unit can lift up to 1kg weight measured by a digital force meter. The cost of each unit in our prototype, including the cost of 3d printing filament, is under 25 US dollars. For the 3x3 layout, the total cost including the controlling system and the outer frame is approximately 300 US dollars, covering an area of 2.8 ft x 2.8 ft (85cm x 85cm).

Each Robotecture unit is built with a few key materials: 3D-printed parts like the supporting chassis, motor bases, and external tubes, which cost about $10. Each unit also includes two MG996r motors for $9 and wooden beams for $1. For the control system and outer frame, the total cost is around $60 for an Arduino microcontroller, power supply, and wiring. In addition, the outer frame includes extra 3D-printed and wooden components, adding $30 to the overall cost.

The maximum operating speed of the MG996r motor is 0.17 s/60º at 4.8V, and 0.14 s/60º at 6V. The speed can be reduced by electronic control. The transition's smoothness results from the interplay between the motor and friction among contacting components. The static friction coefficient between 3D printed PLA plastic and wood is approximately 0.4, while the sliding friction coefficient is about 0.1. Consequently, moving from a static state to a dynamic state or altering the movement direction demands a substantial peak of torque from the motors. Furthermore, rotational movements from the motors may not always be perfectly smooth. However, from our observation of our prototypes, any achievable shape-changing configuration can be finished in 1.3 seconds.

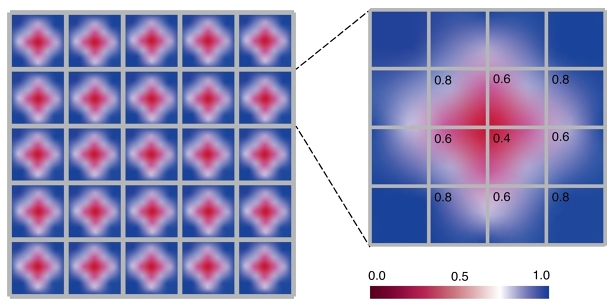

5.2 Load Capacity

Because the units lift themselves through rotation, the load capacity of each unit is limited by motor torque. The self-lifting design causes the outer units (nearer to the support frame) to bear the weight exerted by the inner units. Consequently, the load capacity of each unit depends on its position in the layout. The heatmap of the maximum weight bearing for our 3x3 prototype is shown in Figure 9. The maximum weight-bearing limit in the center is lower (up to 0.4 kg) and the maximum weight-bearing limit of the units closer to the frame is higher (up to 0.8 kg). Units near the center create more torque demands and thus can support less weight. Additionally, increasing the beam length (currently 20 cm) with the same motor providing torque would result in an inverse proportional decrease in the maximum force at the beam's end due to the longer lever arm. This, in turn, would reduce the weight-bearing capacity. Based on the force analysis of the torque system, the weight bearing at the ith unit position of a line composed of N beams would be:

5.3 Height Range

The angle between adjoining units is limited to a range of 90 to 270 degrees due to the fact that the freedom of movement is bounded by the chassis of the unit and the length of support beams. For our prototypes, the unit's height range is limited to -20cm to +20cm relative to the neutral position (when angle α is 180 degrees). This angle range results in a height range equal to the width of the grid. As a result, a Robotecture system with a larger or smaller scale will have a correspondingly greater or lower height range when either the unit size and the number of grid units are proportionally increased or decreased. Therefore, the maximum height of a certain unit in the grid equals its horizontal distance to the nearest outer frame.

6 Applications

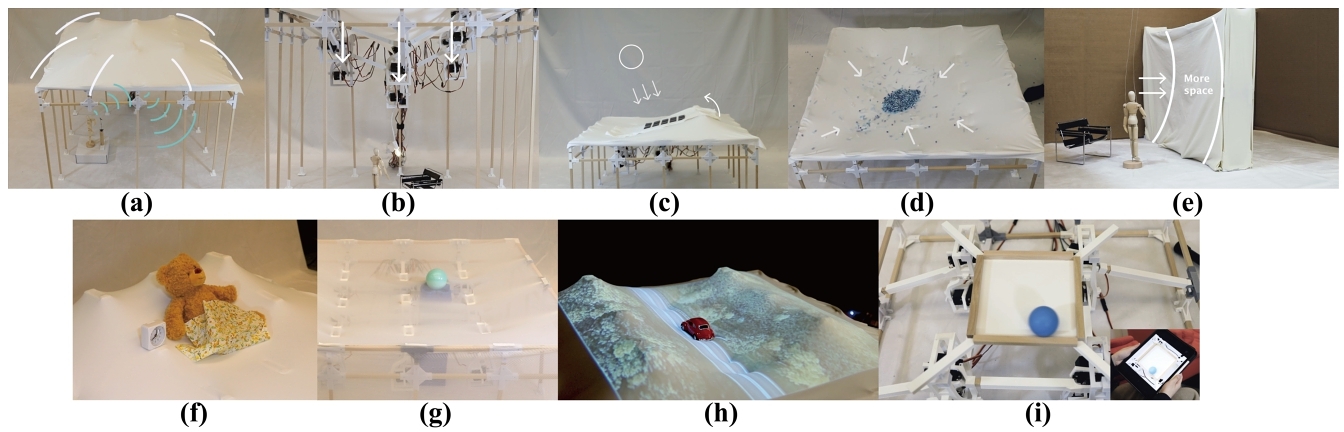

Robotecture is a lightweight and modular self-lifting dynamic surface, which facilitates interactions both with the surface and the enclosure created by that surface. We illustrate three key aspects of Robotecture's capabilities: 1) Large-scale Enclosure Simulation, 2) Smooth Surface Haptic Display, and 3) Object Handling, utilizing 1x2, 2x2, and 3x3 layout configurations. These demonstrations showcase Robotecture's potential to provide novel interactions in the field of SCUIs.

6.1 Enclosure Simulation

Robotecture's beam-based support structure enables the simulation of shape-changing interfaces with sparse lifting node configurations. Its cost-efficiency makes it a viable choice for large-scale displays that do not necessitate high-resolution shaping. Furthermore, because it is self-lifting, Robotecture allows its under-surface space to be available for use, thereby enabling an actively configurable enclosure, rather than just a 2.5D surface with the underneath space left unusable.

6.1.1 Smart Architecture. Architecture serves as an exemplary use case for large-scale enclosures facilitated by Robotecture. By employing Robotecture units as dynamic, shape-changing roofing structures, we unlock an array of possibilities that traditional, rigid architectural elements cannot achieve. This not only enhances user convenience but also improves the building's adaptability to environmental conditions. We used our prototype as a miniature architecture model to illustrate several scenarios using a 3x3 layout:

- Intelligent Opera House: In Figure 10(a), we simulated a smart opera house in which the ceiling dynamically reshapes into a semi-spherical structure to optimally reflect the performer's voice toward the audience.

- Solar-Powered Roof: With solar panels affixed to the rooftop, in Figure 10(c), the structure can detect the sun's direction and automatically tilt the roof to maximize sunlight exposure, thereby optimizing solar energy collection, contributing to eco-friendly home design.

- Rainwater Collection: When it rains, the roof can reconfigure itself to create a central dip, thus establishing an efficient system for rainwater collection. In Figure 10(d), we simulated a rainfall scenario using beads, and demonstrated how the roof surface would depress upon sensing rainfall. This feature also potentially enables eco-friendly architectural designs by improving the utilization of natural resources.

- Light Bulb Replacement: In Figure 10(b), if a fault light bulb hanging from the ceiling is out of the user's reach, the ceiling node to which the light is attached can lower itself, allowing for easy replacement. This principle can extend to similar situations, such as hanging paintings or accessing items stored overhead, providing greater user convenience and increasing object accessibility.

6.1.2 Dynamic Space Division. For room-scale interactions, Robotecture can be used to dynamically partition a room. In Figure 10(e), we demonstrate this concept with a 1x2 display, which bisects a miniature model of a house into two distinct rooms. The available space in each room can be adjusted dynamically based on occupancy. When an individual occupies one room, the Robotecture wall recedes, thereby providing more space for the occupied room while proportionally reducing the size of the unoccupied room. This novel functionality helps users optimally leverage limited spatial resources.

6.2 Haptic Display

The application of fabric material atop sparsely arranged nodes generates a continuous and user-friendly surface, which could substantially alleviate the issue associated with the rigidity and discomfort of tangible pixels. This makes it particularly suitable for scenarios where the user's body needs to directly contact the surface for ergonomic and comfortable interactions.

6.2.1 Smart Furniture Simulation. The user-friendly interface of Robotecture makes it a prime candidate for applications in smart furniture simulation. Its beam-supporting structure also adds extra support for the surface, providing more stability and safety for interactions such as sitting or lying on top of the surface. Leveraging our 3x3 prototype, we developed a shape-shifting bed capable of transforming into a chair, aimed at facilitating user wakefulness when an alarm activates. In Figure 10(f), this structure transitions from a planar surface (acting as a bed) into a chair-like form, effectively aiding in physically rousing the user from sleep. Integrating a pressure sensor and using a larger grid would provide the potential for detecting the user's body contours and subtly adjusting the surface shape in response, to optimize for comfort and ergonomic fit. This paves the way for adaptive living environments, continually adjusting to user needs and preferences. By dynamically altering physical affordances, the system can render daily tasks more conveniently and efficiently. The potential for novel forms of user interaction encompassing both tangible and spatial experiences enriches the dialogue between humans and their environment.

6.2.2 Haptic display for MR. Robotecture serves as an adept medium for embodying virtual models in physical reality, offering a tangible display with projection capabilities or tactile feedback within VR scenarios. In Figure 10(h), we constructed a terrain simulation of a vehicle driving along a mountain road. We incorporated projection mapping in tandem with the physical re-creation of variably tall trees using Robotecture, thereby curating a physical display experience. Furthermore, in Figure 1(d) we rendered a rocky landscape within our VR setting and simulated that model on the Robotecture surface. Users can engage physically with the surface, receiving haptic feedback that aligns with the VR environment. As we merge more surfaces at differing layers and angles, we envision this system forming increasingly intricate and immersive spaces, like the comprehensive environment within a cave, inclusive of floor, walls, and ceiling. Such a scenario might pose significant challenges to alternative approaches. The surface's smoothness ensures a comfortable interaction, while the easily interchangeable fabric material permits rapid adoption of diverse textures.

6.3 Object Handling

The hollow design between the beams facilitates objects passing through Robotecture's surface. This opens up possibilities for Robotecture to create novel object-handling interactions, such as concealing and revealing objects. Robotecture can also move objects by tilting its surface.

6.3.1 Hiding and Revealing Objects. In Figure 10(g), we showcase Robotecture's ability to conceal and reveal objects using a ball approximately 7cm in diameter. A small slit was made in the elastic fabric covering the surface to create a passageway for the ball, which initially lies on a base below the surface. When the beam structure lowers, the fabric is stretched both by the beam and the object, which causes the slit to open. This mechanism allows the object to pass up through the surface. Once the object is above the surface and the stretching force is reduced, the fabric returns to its original form, allowing the object to rest stably above the now-closed surface. To take the ball back down to its underneath storage, the user can push the ball through the slit. Robotecture fabric will remain its original form, effectively concealing the object. This dynamic process illustrates the potential of Robotecture as an interactive storage system that responds to users’ needs.

6.3.2 Object Actuation and Tangible Telepresence. Robotecture also has the capacity to move objects on its surface by creating an inclined plane, thus providing a sliding surface. As shown in Figure 1(b), once the ball is brought to the surface by Robotecture, it can then be slid towards the user's hand based on the hand position. In Figure 10(i), when integrated with a network system, Robotecture can be remotely controlled to manipulate physical objects from a distance, thereby implementing tangible telepresence.

7 Discussion

Comparing to prior work mentioned in section 2, the primary contribution of Robotecture lies in its self-lifting ability, eliminating the need for large supporting frameworks typically required by pin-based systems. This makes both sides of Robotecture a potential interactive interface. For example, objects can stay on top of the surface or hang and hide beneath it, expanding the range of potential applications.

While we envision this technology expanding into various furniture designs, architectural applications, and object actuation methods, the current prototypes have limitations. We outline these limitations and potential directions for future work below:

7.1 Limitations

7.1.1 Height Range. As we mentioned in Section 4.1.3 and Section 5, the height range is bound by the grid size, and the architecture can't form right angles between adjacent units. Therefore, for the mechanical design to reach a vertical surface from a horizontal plane, an origami-inspired SCUI (similar to Mori [2]) would be a reasonable approach, which would be one of our future directions.

7.1.2 Self-lifting and Weight Bearing. There is a side effect discussed in the previous sections that self-lifting causes loading capacity bound by its position in the layout. Units in the center usually have lower capacity than the units closer to the outer frame. One way to overcome this issue while maintaining the expandability of Robotecture is by connecting smaller grids with rigid frames to form a greater surface, as depicted in figure 9. This way the capacity limitation will be only restricting the inner part of each section, while maintaining robustness for the greater surface. However, this design will further limit the height range of the overall surface and greatly reduce the potential of shapes to form in the scale of the greater surface.

Another side effect of the self-lifting design is that the cumulative free play of the mechanical structures (e.g. the small gaps between inner and outer components of each support beam) and motors becomes noticeable due to gravity as the number of units increases. In the 3x3 layout prototype, we observed a slight sagging effect in the center unit when the system is placed horizontally. The sagging effect could potentially reduce the precision of the height adjustment as the number of grid units increases.

Another way to solve these issues would be using higher precision components and higher quality materials for the support beams rather than 3D-printed components. Additionally, using high-quality motors with better power-to-weight ratios and minimal free play would also contribute to increasing load capacity and reducing sag. For even greater capability, motors like the RDS5180 150kg combined with aluminum alloy or carbon fiber beams would enhance both strength and durability. According to our calculations, this setup could support up to 1.2 kg at the center of a 10x10 grid structure, with an estimated cost of $80 per unit.

Additionally, according to our tests, placing the prototypes vertically can significantly recover the load capacity of the outer units and thereby reduce sagging effects. This is because this orientation causes the weight-bearing to be distributed more on the support beams rather than relying on the motors to compensate for the weight.

7.1.3 Size and Resolution. The Robotecture's sparse grid structure presents some trade-offs on the size and resolution of the shape-changing display. In our prototypes, the individual units are generally larger when compared to pin-based SCUIs. The primary cause of this limitation is the size of the MG996R motor, which influences both the chassis dimensions and the length of beams at certain levels. Therefore, one trade-off of the sparse grid structure is that the resolution is lower than most pin-based SCUIs. We could use smaller motors (e.g. SG90) and increase the number of motors to create a denser and higher-resolution version. However, this would cause a reduced load capacity, as smaller motors typically generate lower power. Nevertheless, a reduced load capacity for a denser layout should be a concern because the intended focus of Robotecture is primarily designed for larger scales, at least tabletop and room scale. The smaller prototypes showcased in previous sections are only intended for validating the design and mechanisms. The smaller scale simplifies engineering and reduces cost.

7.1.4 Lack of Physical Activity Sensing. Robotecture primarily focuses on leveraging its shape-changing attributes rather than sensing physical activities. Thus, to simplify the design and engineering process, the mechanical structure does not have an embedded force sensor to directly capture users’ input. In future work, we plan to integrate force sensing into our prototypes, such as bonding torque sensors to the shaft of the motors to enable more versatile interactive experiences. For example, Robotecture prototypes at tabletop size could help scan and replicate objects’ physical properties (similar to the functionality of inFORCE [24]).

7.2 Future Work

Apart from the applications we have accomplished, we envision some possible future scenarios that could be developed using the design of Robotecture.

7.2.1 Adaptive Floors. One possible scenario is that floors constructed using Robotecture architecture in a building could dynamically change their shapes, adjust their elevation and curvature, and seamlessly connect with other floors by creating smooth walkable pathways, slopes, and surfaces. As a result, people of all abilities, including those with limited mobility, will be able to move around freely and navigate between different floors without needing to take stairs or elevators. For instance, a user wants to enter their bedroom located in a duplex home. The Robotecture-based first floor dynamically reconfigures to lift the user, while simultaneously the second floor lowers to meet the user. Additionally, by incorporating Robotecture's object handling feature, it becomes efficient and convenient for users to transport heavy objects between floors.

In order to achieve this, Robotecture-based floors will require powerful motors, stronger frames and support beams to lift the structure and to support the weight of humans. Industrial-level motors (e.g. Moog Industrial Motors) with torque over 981 N · m (capable of lifting 100 kg with 1-meter linkage) would have the potential to be selected for the application. Our calculation shows that while using Moog motors in a 3x3 grid with beam length to be 1meter, the central unit can stand the weight of an adult. Using resilient materials capable of bearing human weight such as metallic (e.g. aluminum alloy, steel) facets or woven polypropylene, individuals will be able to freely walk across the entire surface, including areas covered by these resilient materials, without being restricted to only walking on the support beams. This scenario opens up research opportunities to develop functionalities that can be enhanced by eliminating the need for stairs or elevators, as well as exploring the possibilities of creating a smart building with improved accessibility.

7.2.2 Reshaping room. Our vision extends to the potential of constructing an entire room, including floors, walls, and ceilings. This could allow the integration of multiple applications that we have showcased, thereby creating an intelligent room that reshapes according to its user's needs. In this vision, the floor could transform into various kinds of smart furniture, such as tables, chairs, beds, and dynamically reshape to accommodate the user's body shape.

Robotecture can also help to create customized public or private spaces based on the requirement of divisions and users’ preferences. Differing from pin-based floors, the self-contained surface design affords storage space beneath the surface, thereby making efficient use of the room's volume. Furthermore, the beam structure, when combined with elastic surface covers, enables a smooth surface while providing sufficient support. This design ensures a comfortable and user-friendly interface for bodily contact.

8 Conclusion

We have presented the design of Robotecture and the implementation of its prototypes in various layouts; demonstrated Robotecture's features and novelties by showcasing some tangible interactions including dynamic physical affordances, object handling, haptic feedback for MR, and tangible telepresence; explored limitations and described future work. Compared to prior work, our self-lifting surface, enabled by a sparse grid structure and actuated support beams, offers unique benefits in developing a large-scale, low-cost, and modular SCUI with a mechanical structure that can help to enhance interactivity and connectivity through its hollow design for spaces both above and beneath the surface.

References

- Laurent Aguerreche, Thierry Duval, and Anatole Lécuyer. 2010. Reconfigurable tangible devices for 3D virtual object manipulation by single or multiple users. In Proceedings of the 17th ACM Symposium on Virtual Reality Software and Technology. 227–230.

- Christoph H Belke and Jamie Paik. 2017. Mori: a modular origami robot. IEEE/ASME Transactions on Mechatronics 22, 5 (2017), 2153–2164.

- Marcelo Coelho, Hiroshi Ishii, and Pattie Maes. 2008. Surflex: a programmable surface for the design of tangible interfaces. In CHI’08 extended abstracts on Human factors in computing systems. 3429–3434.

- Marcelo Coelho and Jamie Zigelbaum. 2011. Shape-changing interfaces. Personal and Ubiquitous Computing 15 (2011), 161–173.

- Severin Engert, Konstantin Klamka, Andreas Peetz, and Raimund Dachselt. 2022. Straide: A research platform for shape-changing spatial displays based on actuated strings. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–16.

- Aluna Everitt and Jason Alexander. 2017. PolySurface: a design approach for rapid prototyping of shape-changing displays using semi-solid surfaces. In Proceedings of the 2017 Conference on Designing Interactive Systems. 1283–1294.

- Sean Follmer, Daniel Leithinger, Alex Olwal, Akimitsu Hogge, and Hiroshi Ishii. 2013. inFORM: dynamic physical affordances and constraints through shape and object actuation.. In Uist, Vol. 13. Citeseer, 2501–988.

- Jesse T Gonzalez, Sonia Prashant, Sapna Tayal, Juhi Kedia, Alexandra Ion, and Scott E Hudson. 2023. Constraint-Driven Robotic Surfaces, At Human-Scale. In Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology. 1–12.

- Mark Goulthorpe, Mark Burry, and Grant Dunlop. 2001. Aegis hyposurface: The bordering of university and practice. In Proc. of ACADIA. Association for Computer-Aided Design in Architecture, 344–349.

- Jianzhe Gu, Yuyu Lin, Qiang Cui, Xiaoqian Li, Jiaji Li, Lingyun Sun, Cheng Yao, Fangtian Ying, Guanyun Wang, and Lining Yao. 2022. PneuMesh: Pneumatic-driven truss-based shape changing system. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. 1–12.

- Hiroshi Ishii. 2007. Tangible user interfaces. Human-Computer Interaction: Design Issues, Solutions, and Applications (2007), 141–157.

- Hiroo Iwata, Hiroaki Yano, Fumitaka Nakaizumi, and Ryo Kawamura. 2001. Project FEELEX: adding haptic surface to graphics. In Proceedings of the 28th annual conference on Computer graphics and interactive techniques. 469–476.

- Seungwoo Je, Hyunseung Lim, Kongpyung Moon, Shan-Yuan Teng, Jas Brooks, Pedro Lopes, and Andrea Bianchi. 2021. Elevate: A walkable pin-array for large shape-changing terrains. In Proceedings of the 2021 CHI Conference on human Factors in Computing Systems. 1–11.

- Chin Koi Khoo and Flora D Salim. 2013. Lumina: a soft kinetic material for morphing architectural skins and organic user interfaces. In Proceedings of the 2013 ACM international joint conference on Pervasive and ubiquitous computing. 53–62.

- Robert Kovacs, Alexandra Ion, Pedro Lopes, Tim Oesterreich, Johannes Filter, Philipp Otto, Tobias Arndt, Nico Ring, Melvin Witte, Anton Synytsia, et al. 2018. TrussFormer: 3D printing large kinetic structures. In Proceedings of the 31st Annual ACM Symposium on User Interface Software and Technology. 113–125.

- Sébastien Kubicki, Sophie Lepreux, and Christophe Kolski. 2012. RFID-driven situation awareness on TangiSense, a table interacting with tangible objects. Personal and Ubiquitous Computing 16 (2012), 1079–1094.

- Matthijs Kwak, Kasper Hornbæk, Panos Markopoulos, and Miguel Bruns Alonso. 2014. The design space of shape-changing interfaces: a repertory grid study. In Proceedings of the 2014 conference on Designing interactive systems. 181–190.

- Daniel Leithinger, Sean Follmer, Alex Olwal, and Hiroshi Ishii. 2014. Physical telepresence: shape capture and display for embodied, computer-mediated remote collaboration. In Proceedings of the 27th annual ACM symposium on User interface software and technology. 461–470.

- Cheng Liu, Yizheng Tan, Chaowei He, Shaobo Ji, and Huaping Xu. 2021. Unconstrained 3D shape programming with light-induced stress gradient. Advanced Materials 33, 42 (2021), 2105194.

- Ke Liu, Felix Hacker, and Chiara Daraio. 2021. Robotic surfaces with reversible, spatiotemporal control for shape morphing and object manipulation. Science Robotics 6, 53 (2021), eabf5116.

- Jose Francisco Martinez Castro, Alice Buso, Jun Wu, and Elvin Karana. 2022. TEX (alive): A Toolkit To Explore Temporal Expressions In Shape-Changing Textile Interfaces. In Designing Interactive Systems Conference. 1162–1176.

- Satoshi Murata, Eiichi Yoshida, Akiya Kamimura, Haruhisa Kurokawa, Kohji Tomita, and Shigeru Kokaji. 2002. M-TRAN: Self-reconfigurable modular robotic system. IEEE/ASME transactions on mechatronics 7, 4 (2002), 431–441.

- Ken Nakagaki, Artem Dementyev, Sean Follmer, Joseph A Paradiso, and Hiroshi Ishii. 2016. ChainFORM: a linear integrated modular hardware system for shape changing interfaces. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology. 87–96.

- Ken Nakagaki, Daniel Fitzgerald, Zhiyao Ma, Luke Vink, Daniel Levine, and Hiroshi Ishii. 2019. inforce: Bi-directionalforce'shape display for haptic interaction. In Proceedings of the thirteenth international conference on tangible, embedded, and embodied interaction. 615–623.

- Ken Nakagaki, Yingda Liu, Chloe Nelson-Arzuaga, and Hiroshi Ishii. 2020. Trans-dock: Expanding the interactivity of pin-based shape displays by docking mechanical transducers. In Proceedings of the Fourteenth International Conference on Tangible, Embedded, and Embodied Interaction. 131–142.

- Ken Nakagaki, Luke Vink, Jared Counts, Daniel Windham, Daniel Leithinger, Sean Follmer, and Hiroshi Ishii. 2016. Materiable: Rendering dynamic material properties in response to direct physical touch with shape changing interfaces. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems. 2764–2772.

- Yasutaka Nishioka, Megumi Uesu, Hisae Tsuboi, Sadao Kawamura, Wataru Masuda, Toshihiko Yasuda, and Mitsuhiro Yamano. 2017. Development of a pneumatic soft actuator with pleated inflatable structures. Advanced Robotics 31, 14 (2017), 753–762.

- Jifei Ou, Lining Yao, Daniel Tauber, Jürgen Steimle, Ryuma Niiyama, and Hiroshi Ishii. 2014. jamSheets: thin interfaces with tunable stiffness enabled by layer jamming. In Proceedings of the 8th International Conference on Tangible, Embedded and Embodied Interaction. 65–72.

- Amanda Parkes, Ivan Poupyrev, and Hiroshi Ishii. 2008. Designing kinetic interactions for organic user interfaces. Commun. ACM 51, 6 (2008), 58–65.

- Wanli Qian, Chenfeng Gao, Anup Sathya, Ryo Suzuki, and Ken Nakagaki. 2024. SHAPE-IT: Exploring Text-to-Shape-Display for Generative Shape-Changing Behaviors with LLMs. In Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology. 1–29.

- Majken K Rasmussen, Esben W Pedersen, Marianne G Petersen, and Kasper Hornbæk. 2012. Shape-changing interfaces: a review of the design space and open research questions. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 735–744.

- Michael L Rivera, Jack Forman, Scott E Hudson, and Lining Yao. 2020. Hydrogel-textile composites: Actuators for shape-changing interfaces. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems. 1–9.

- Anne Roudaut, Abhijit Karnik, Markus Löchtefeld, and Sriram Subramanian. 2013. Morphees: toward high" shape resolution" in self-actuated flexible mobile devices. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. 593–602.

- Thomas B Sheridan et al. 1992. Musings on telepresence and virtual presence.Presence Teleoperators Virtual Environ. 1, 1 (1992), 120–125.

- Alexa F Siu, Eric J Gonzalez, Shenli Yuan, Jason Ginsberg, Allen Zhao, and Sean Follmer. 2017. shapeShift: A mobile tabletop shape display for tangible and haptic interaction. In Adjunct Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology. 77–79.

- Miriam Sturdee and Jason Alexander. 2018. Analysis and classification of shape-changing interfaces for design and application-based research. ACM Computing Surveys (CSUR) 51, 1 (2018), 1–32.

- Ryo Suzuki, Hooman Hedayati, Clement Zheng, James L Bohn, Daniel Szafir, Ellen Yi-Luen Do, Mark D Gross, and Daniel Leithinger. 2020. Roomshift: Room-scale dynamic haptics for vr with furniture-moving swarm robots. In Proceedings of the 2020 CHI conference on human factors in computing systems. 1–11.

- Ryo Suzuki, Ryosuke Nakayama, Dan Liu, Yasuaki Kakehi, Mark D Gross, and Daniel Leithinger. 2020. LiftTiles: constructive building blocks for prototyping room-scale shape-changing interfaces. In Proceedings of the fourteenth international conference on tangible, embedded, and embodied interaction. 143–151.

- Ryo Suzuki, Eyal Ofek, Mike Sinclair, Daniel Leithinger, and Mar Gonzalez-Franco. 2021. Hapticbots: Distributed encountered-type haptics for vr with multiple shape-changing mobile robots. In The 34th Annual ACM Symposium on User Interface Software and Technology. 1269–1281.

- Ryo Suzuki, Clement Zheng, Yasuaki Kakehi, Tom Yeh, Ellen Yi-Luen Do, Mark D Gross, and Daniel Leithinger. 2019. Shapebots: Shape-changing swarm robots. In Proceedings of the 32nd annual ACM symposium on user interface software and technology. 493–505.

- Roel Vertegaal and Ivan Poupyrev. 2008. Organic user interfaces. Commun. ACM 51, 6 (2008), 26–30.

- Keru Wang, Zhu Wang, Ken Nakagaki, and Ken Perlin. 2024. “Push-That-There”: Tabletop Multi-robot Object Manipulation via Multimodal'Object-level Instruction’. In Proceedings of the 2024 ACM Designing Interactive Systems Conference. 2497–2513.

- Keru Wang, Zhu Wang, Karl Rosenberg, Zhenyi He, Dong Woo Yoo, Un Joo Christopher, and Ken Perlin. 2022. Mixed Reality Collaboration for Complementary Working Styles. In ACM SIGGRAPH 2022 Immersive Pavilion. 1–2.

- Zhengrong Xue, Han Zhang, Jingwen Cheng, Zhengmao He, Yuanchen Ju, Changyi Lin, Gu Zhang, and Huazhe Xu. 2024. Arraybot: Reinforcement learning for generalizable distributed manipulation through touch. In 2024 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 16744–16751.

- Lining Yao, Ryuma Niiyama, Jifei Ou, Sean Follmer, Clark Della Silva, and Hiroshi Ishii. 2013. PneUI: pneumatically actuated soft composite materials for shape changing interfaces. In Proceedings of the 26th annual ACM symposium on User interface software and Technology. 13–22.

- Kentaro Yasu. 2022. MagneShape: A Non-electrical Pin-Based Shape-Changing Display. In Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology. 1–12.

- Shigeo Yoshida, Yuqian Sun, and Hideaki Kuzuoka. 2020. Pocopo: Handheld pin-based shape display for haptic rendering in virtual reality. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 1–13.

Footnote

⁎Equal advising

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than the author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org.

TEI '25, March 04–07, 2025, Bordeaux / Talence, France

© 2025 Copyright held by the owner/author(s). Publication rights licensed to ACM.

ACM ISBN 979-8-4007-1197-8/25/03.

DOI: https://doi.org/10.1145/3689050.3704925